You say you want a revolution?

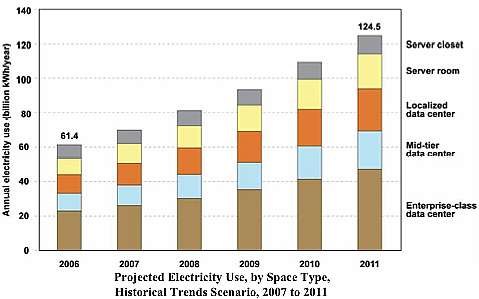

Energy efficient data centers are in the news again, with the EPA reporting that data centers use 1.5% of US electricity – almost 6 million home’s worth – and doubling in five years.

The numbers don’t include the “custom server” power usage of Google, which I estimate will be about 250 MW next year. If Google keeps selling ads, and people keep using Google, I expect that number will grow by ~50 MW a year for the next several years.

Measuring & comparing power use

Forget about global warming, that is a lot of power. Expensive power. Can we cut the power requirement? We could, if we had a way to reliably benchmark power consumption across architectures. Which is what JouleSort: A Balanced Energy-Efficiency Benchmark (PDF) by Suzanne Rivoire, Mehul A. Shah, Parthasarathy Ranganathan and Christos Kozyrakis tries to do. Thanks to alert reader Wes Felter for bringing it to my attention.

As noted in Powering a warehouse-sized computer system power requirements are overstated. Power requirements vary with workload. So how to compare?

The benchmark of the future

The authors chose a sort algorithm:

We choose sort as the workload for the same basic reason that the Terabyte Sort, MinuteSort, PennySort, and Performance-price Sort benchmarks do: it is simple to state and balances system component use. Sort stresses all core components of a system: memory, CPU, and I/O. Sort also exercises the OS and filesystem. Sort is a portable workload; it is applicable to a variety of systems from mobile devices to large server configurations. Another natural reason for choosing sort is that it represents sequential I/O tasks in data management workloads.

JouleSort is an I/O-centric benchmark that measures the energy efficiency of systems at peak use. Like previous sort benchmarks, one of its goals is to gauge the end-to-end effectiveness of improvements in system components. To do so, JouleSort allows us to compare the energy efficiencies of a variety of disparate system configurations. Because of the simplicity and portability of sort, previous sort benchmarks have been technology trend bellwethers, for example, foreshadowing the transition from supercomputers to clusters. Similarly, an important purpose of JouleSort is to chart past trends and gain insight into future trends in energy efficiency.

The authors focused on 2 things at odds with the Google power paper. They chose a benchmark that exercised all components of the system, while the Googlers concluded that CPU utilization was the key variable. They also focused on peak workload power consumption, rather than Google’s strategy model of throttling back at peak loads while reducing idle load consumption.

The differences may be a question of the problems each examined. Google has well-defined workloads with strong time-of-day dependencies. The Stanford/HP Labs team defined a database sequential access workload. My guess is that both approaches are valid for understanding parts of the energy problem and that neither is sufficient for a complete picture.

Update: Another major difference is that the JouleSort team looked at individual servers, while Google’s paper focused on the efficiency of racks, PDU groups and multi-thousand node clusters.

Prototyping an energy efficient server

Any benchmark is a compromise. Much of the paper presents the author’s rationale for their choices, which I trust will be hashed out by people competent to debate them. I could see how some different choices might change the results, but the authors made reasonable choices.

They used the benchmark to evaluate several systems, some “unbalanced” systems such as a laptop they had in the lab and systems “balanced” or configured to meet the needs of the benchmark most efficiently.

They found that unbalanced CPU utilization was quite low, ranging from 1% to 26%. As a result, the system didn’t accomplish much work for the power it consumed.

Since the CPU is usually the highest power component, these results suggest that building a system with more I/O to complement the available processing capacity should provide better energy efficiencies.

Ah, the irony! 40 years after the minicomputer we are back to a batch mainframe I/O-centric architecture. All things old are new again.

Design for efficiency

Storistas will discern that disks and bandwidth are critical to efficiency in this benchmark. To keep the CPU busy requires lots of bandwidth and I/O. At 15 W each, it doesn’t take many enterprise disks to overtake the CPU as the major power sink. The balanced system required 2 trays of 6 disks each to keep a dual-core CPU busy.

Here’s the configuration of a balanced server and note the disk components.

A really efficient server

The team then built a server optimized for the benchmark. The configuration:

Note the consumer CPU and the notebook disk drives. The controller types are more an artifact of the limited choices in motherboards that support mobile chips and lots of disks. Power may indeed be the factor that tips the industry to 2.5″ drives. The power savings are immense over the fast 3.5″ drives.

File systems, RAM and power supplies

The authors looked at some other issues as well.

The benchmark is a sequential sort. The authors found that file systems with higher sequential access rates more efficient. Developers, the time may not too far away when your code is measured on power efficiency.

They also found that reducing the RAM footprint to the needed capacity raised efficiency as well.

They also found that the winning system could use a much smaller power supply and that at loading below 68% the original and replacement power supplies were about equally efficient. The bigger issue is the cost of over-provisioning for data center power. The authors suggest that power-factor corrected power supplies are required to make energy efficient servers economic as well.

The StorageMojo take

As the breadth of the paper suggests, power efficiency requires a holistic understanding of computes, I/O, software, power factors and configuration trade-offs. Some of the supercomputer folks can do this, but the average data center is years away from this level of workload understanding.

Instead research should point to a few things that increase efficiency and reduce consumption across a wide range of workloads and configurations. Mobile CPUs and notebook disks are 2 likely candidates. Software effects will be found significant as well because widely used software affects so many systems.

We should also remember other areas of power waste. I’m an astronomy buff and the amount of energy used across the US to illuminate empty parking lots and untraveled streets is immense and light-polluting. There are many ways we can become more power efficient. Data centers are just one important component in our connected world.

Comments welcome, as always. No more blogging about blogging either: my traffic dropped by almost 20% last week. For StorageMojo quality content beats controversy hands down. I couldn’t be more pleased.

One near trivial note I wouldn’t bring up except that Robin flamed about it so well in:

http://storagemojo.com/2007/05/22/intels-best-and-worst/

and that it’s not inconsequential in a “holistic understanding” ^_^.

Note what I assume to be the FB-DIMM penalty in the first “balanced server” system; I’ve just finished building three different systems (replacements for old ones for my father and myself) for which I did peak power budgets, so I have the numbers still in my head.

I just bought some Kingston (w/Micron DRAM) 2GB unbuffered ECC PC2-5300 sticks, and the data sheet says they weight in at 2.2 Watts each.

A little bit of Wikipedia and Googling indicates the CPU almost certainly uses FB-DIMMs, and chart says their 2GB sticks weigh in at 7.5 Watts each. About 5 Watts more each!

For someone like Don MacAskill for whom DRAM in his database servers is the single most important thing in selecting a motherboard, that’s going to add up to a bit of an ouch if he chooses to drink the FB-DIMM Kool-Aid. Only so much, 40 or 80W for 16 or 32GB (but that brackets this CPU’s TDP), he as I remember uses 15K disks by choice and would use faster ones if available, but it all adds up.

– Harold

Robin,

Definitely a good article. As someone who’s done a lot of work with low power platforms I found it refreshing to see that there’s now a benchmarking tool available for efficiency, etc. One thing I’d like to point out is that the “Ideal System” makes use of a mobile CPU, not a consumer CPU. This does skew the envelope somewhat since the design of those systems is not something easily entered into by Joe IT User. There’s a couple of players, additionally, in that efficiency space that have more mature designs that, while not focusing on massive I/O for storage, easily enter into power efficient computation space.

The first contender is ALWAYS VIA. Their Eden platform (in mini-ITX and pico-ITX formats) is the class leader when it comes to power efficiency and thermals. Not only are they exceptionally cool and quiet, their onboard AES and Padlock encryption units make them ideal for security appliances and the like. Companies like Ainkaboot (http://www.ainkaboot.co.uk) have also developed 4U clusters using these processors and low power storage devices.

The second contender, while not currently leading the pack, is AMD. AMD actually has better power management (scalable clock multipliers, etc.) that allow a deeper idle state than Intel. This will only increase in coming months with separate power planes for the onboard memory controller and processor cores. Coupled with Hypertransport’s ability (ver. 3.x) to ungang and dynamically scale width and power requirements, this platform becomes very competitive. On the mobile front, this isn’t currently the case but, it should be noted for comparison purposes especially at the consumer level.

Anyhow, those are just some of the thoughts floating through the ether at this time.

I’d love to hear your thoughts.

cheers,

Dave

The Stanford paper is excellent, in particular the list of references includes a lot of very useful connections into low power benchmarking and research.

I have been taking this general approach to the extreme with some work that I have called “Millicomputing” – I didn’t just look at mobile CPUs with low power disks, I have been exploring how to build useful (for some classes of application) enterprise servers out of CPUs designed for battery powered devices using flash based storage. These have an overall power per node of less than one watt, hence the name millicomputer, since they are measured in milliwatts.

2.5″ drives are dense and low power, but 1/3 the capacity and 3X the $/GB vs. 3.5″ may be a lot to pay for energy efficiency.

Excellent article and terrific comments.

Robin, I believe your images are broken (they appear to be using page-relative rather than root-relative URLs), leaving out some significant content for those viewing this not on the front page.

Good comments all.

Harold,

An Intel person confirmed to me that the FB-DIMM controller consumes five watts. That is why the FB-DIMMs need the extra “heat spreaders”. The original idea was to use standard high-volume DIMMs, presumably to save money, but the addition of the controller and heat spreaders has eliminated that advantage. Adding the 5 W penalty per stick just adds insult to injury.

Dave,

I don’t follow AMD, but all of Intel’s post-Netburst CPUs are based on the mobile architecture that the Intel Israel came up with. In a very real sense, we are all using mobile architectures now.

Also, I wouldn’t expect an IT guy to piece together an energy efficient server. Vendors should. I suspect Intel has the jump on HP, IBM and Supermicro due to the Google contract for – among other features – energy efficient servers. Whether they can figure out how to communicate the benefits is an open question.

Thanks for the pointer to VIA. A couple of folks mentioned them and now I’m curious.

Adrian,

Good to hear from you. Are you still with Sun?

I love the milli-computing concept. Gordon Bell’s concept of a new generation of computing every 10 years is bumping into human-scale limits. Rather than make ever-smaller computers, at some point we reach a reasonable size and price point and build as much as we can into it. A cluster in the palm of your hand!

Wes,

I *think* I’m seeing 2.5″ drives beginning to close the gap with 3.5″ drives. Like all prior drive migrations, the SFF drives don’t need to match the $/GB to win, just get close enough so that their other advantages close the deal. As I noted last year, flash drives are a very power-efficient alternative. Drive vendors see the writing on the wall: the 3.5″ drive won’t live forever.

Marc, I couldn’t agree more!

Jonathan, thanks for flagging that. I think I’ve fixed it. Let me know if there is still a problem.

Robin

Hi Robin, I left Sun in 2004, went to eBay Research Labs for a while, and I’m currently working at Netflix, managing a team that works on website personalization algorithms. I’m doing the millicomputing stuff in my spare time, to keep current on hardware. I was inspired by what I found when helping design a homebrew mobile phone – see myPhone at http://www.hbmobile.com

I’m presenting a paper on millicomputing at the HPTS workshop in October http://www.hpts.ws and at CMG07 in San Diego in December.

Cheers Adrian