In our last episode we reviewed the paper’s supply and demand drivers. Now we look the investigator’s top 10 obstacles and opportunities for cloud computing.

Adoption, growth and business obstacles

The paper identifies 10 obstacles and their associated opportunities. The first 3 are adoption obstacles, the next 5 are growth obstacles adn the last 2 are policy and business obstacles to adoption. Obstacles are bold and the opportunities follow them

- Availability of Service. Use Multiple Cloud Providers to provide Business Continuity; Use Elasticity to Defend Against DDOS attacks

- Data Lock-In. Standardize APIs; Make compatible software available to enable Surge Computing

- Data Conï¬dentiality and Auditability. Deploy Encryption, VLANs, and Firewalls; Accommodate National Laws via Geographical Data Storage

- Data Transfer Bottlenecks. FedExing Disks; Data Backup/Archival; Lower WAN Router Costs; Higher Bandwidth LAN Switches

- Performance Unpredictability. Improved Virtual Machine Support; Flash Memory; Gang Scheduling VMs for HPC apps

- Scalable Storage. Invent Scalable Store

- Bugs in Large-Scale Distributed Systems. Invent Debugger that relies on Distributed VMs

- Scaling Quickly. Invent Auto-Scaler that relies on Machine Learning; Snapshots to encourage Cloud Computing Conservationism

- Reputation Fate Sharing. Offer reputation-guarding services like those for email

- Software Licensing. Pay-for-use licenses; Bulk use sales

Service availability and performance unpredictability are the deal killers. If there aren’t acceptable answers – or acceptable applications – cloud computing is DOA.

Availability

Here’s a table from the paper:

Recent cloud services outages

The authors argue that users expect Google Search levels of availability and that the obvious answer is using multiple cloud providers. The also offer an interesting argument on the economics of Distributed Denial of Service (DDoS) attacks:

Criminals threaten to cut off the incomes of SaaS providers by making their service unavailable, extorting $10,000 to $50,000 payments to prevent the launch of a DDoS attack. Such attacks typically use large “botnets” that rent bots on the black market for $0.03 per 14 bot (simulated bogus user) per week. . . . Suppose an EC2 instance can handle 500 bots, and an attack is launched that generates an extra 1 GB/second of bogus network bandwidth and 500,000 bots. At $0.03 per bot, such an attack would cost the attacker $15,000 invested up front. At AWS’s current prices, the attack would cost the victim an extra $360 per hour in network bandwidth and an extra $100 per hour (1,000 instances) of computation. The attack would therefore have to last 32 hours in order to cost the potential victim more than it would the blackmailer. . . . As with elasticity, Cloud Computing shifts the attack target from the SaaS provider to the Utility Computing provider, who . . . [is] likely to have already DDoS protection as a core competency.

Depending on the level of Internet criminality going forward, that last point will prove decisive for some customers.

Unpredictable performance

As always, I/O is the issue:

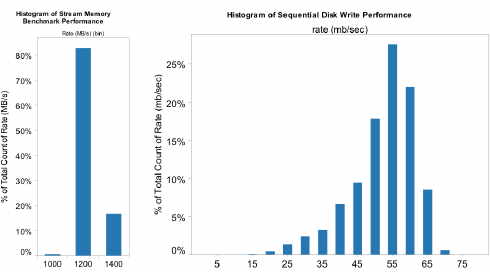

Our experience is that multiple Virtual Machines can share CPUs and main memory surprisingly well in Cloud Computing, but that I/O sharing is more problematic. Figure 3(a) shows the average memory bandwidth for 75 EC2 instances running the STREAM memory benchmark [32]. The mean bandwidth is 1355 MBytes per second, with a standard deviation of just 52 MBytes/sec, less than 4% of the mean. Figure 3(b) shows the average disk bandwidth for 75 EC2 instances each writing 1 GB ï¬les to local disk. The mean disk write bandwidth is nearly 55 MBytes per second with a standard deviation of a little over 9 MBytes/sec, more than 16% of the mean. This demonstrates the problem of I/O interference between virtual machines.

Virtual machine I/O problem

The opportunity, then, is to improve VM I/O handling. They note that IBM solved this problem back in the 80’s, so it is doable. The key is getting people to pay for the fix.

What about the other 8?

They aren’t deal killers. Yes, it will take a while to figure out data conï¬dentiality and auditability, but there is nothing intrinsic that says it can’t be done.

The StorageMojo take

Is cloud computing viable? Of course. AWS claims 400,000 users – 80,000 active under the 80/20 rule – commercial viability is a given.

Some think it is an issue, especially for storage – reasoning from the Storage Networks and Enron debacles of the early millennium. All that proved is that buying enterprise kit means enterprise costs: you can’t undersell the data center using their gear.

But Google and Amazon have shown that commodity-based, multi-thousand node scale-out clusters are capable of enterprise class availability and performance – at costs that “name brand” servers and storage can’t match. How much more proof do people need?

The issue is cognitive: the implicit assumption that cloud computing must compete with enterprise metrics. The funny thing is most enterprise systems and storage don’t need enterprise availability.

In the early 90’s Novell PC networks averaged around 70% availability – pathetic even in those days. Yet they spread like wildfire outside the glass house over IT opposition.

The lesson: if the reward is big enough the LOB will accept performance and availability far less than what they expect from IT. If they can get useful work done for 1/5th the cost they’ll accept some flakiness. Uptime is a means to an end – not an end in itself.

In the near term existing apps aren’t going to move to the cloud. It is new apps that aren’t feasible with today’s enterprise cost structures. Longer term – 10+ years – we’ll be surprised, just as the mainframe guys were in the 90’s.

The current economic crisis – the impoverishment of the industrialized world – means brutal cost pressures on IT for the next 5 years or more. Successful IT pros will help the LOB use cloud computing, even if it doesn’t provide “enterprise” availability and response times.

Courteous comments welcome, of course. One gem from the paper is a wake-up call for Cisco:

One estimate is that two-thirds of the cost of WAN bandwidth is the cost of the high-end routers, whereas only one-third is the ï¬ber cost. Researchers are exploring simpler routers built from commodity components with centralized control as a low-cost alternative to the high-end distributed routers. If such technology were deployed by WAN providers, we could see WAN costs dropping more quickly than they have historically.

With respect to DDOS attacks, it is important to differentiate between two different classes of relationships between cloud service providers and their customers.

When a cloud service provider has a relationship with a small number of larger customers, then it is economical to deploy technologies, such as VPN’s, specialized networking equipment, etc, which can mitigate or eliminate the threat of DDOS attacks.

However, when a cloud service provider has a relationship with a large number of smaller customers, then the costs of preventing DDOS attacks from having a significant impact on their operations is very high.

Let’s look at a simple example: Let’s say, for example, that a storage service provider has several hundred large enterprises as customers. Because these are larger accounts, the provider can use enterprise-grade VPN connections, and install networking equipment that discards all traffic except that which originates from legitimate customers. Thus, DDOS attacks can be stopped at the front door at a networking level.

Compare this to Amazon S3, where anyone on the Internet can be a customer. A DDOS that ties up CPU resources by performing bogus TLS authentication attempts could prevent legitimate customers from being able to perform transactions, and because there is little way to distinguish between a legitimate user and a DDOS botnet client, there is no easy way to stop the traffic before it consumes significant resources within the cloud authentication layer.

And when customer systems hosted on clouds are opened to the general public, as is the case with many EC2 hosted web applications, DDOS attacks against these customer systems are in many cases indistinguishable from the actions of regular users.

Having said this, it is important to emphasize that these issues are in no way specific to cloud-based deployments. The risks of DDOS are the same with in-house (non-cloud) infrastructure. But with usage-based charges for dynamic scaling, instead of just maxing out your in-house infrastructure, a cloud-based deployment might end up surprising you with a really large bill at the end of the month unless you put upper bounds on the elasticity of your account.

Every 6 months or so there’s a rumour that Google is building it’s own high-end router, for example this one

http://news.cnet.com/8301-1001_3-10137682-92.html

I can see it happening, but given that Cisco can cut their prices signifcantly if the situation demands it, Google can probably save more money by threatening Cisco rather than actually switching.

I suspect the mostly likely outcome is that someone builds this new low-cost router, Cisco buy the company, and start to produce a version themselves at a slightly lower cost than they currently build their high-end routers.

It would be interesting to see the user reaction to a 2-3 day outage of one of the AWS data centres, it’s obviously unlikely but it’ll never be impossible. I’m sure Amazon could switch people over to another site but it might take a long time, and I think it would be a big dent in cloud computing if it happened soon. In 5-10 years the outage would still be significant, but I don’t think it would have the same reaction, simply because by then people will consider clouds the norm rather than the risky new thing.

Robin,

I think you’ve hit the nail on the head – new applications which don’t require multiple 9’s of availability, or sub-millisecond latency, will use cloud services.

Just like less-reliable minicomputers took lots of market share from mainframes, and even-less-reliable PCs and commodity servers took lots of market share from minis and mainframes, cloud computing could take lots of market share from earlier technologies.

Also, I expect cloud computing providers and third parties to find ways to improve availability, and address the other problems. Remember what RAID did for commodity hard drives. A middleware provider could sell a way to host the same applications or storage on multiple cloud providers despite their base incompatibilities. Like JungleDisk, for example.

I’ve been pushing “dumb branch offices” instead of Cisco routers for several years now. In one case, buying “Ethernet extenders” with spares, cost less than one year’s Cisco router maintenance (aka “the Cisco tax”), with far less sys admin time. No need to wait for Google’s rumored high-end Cisco killer to save lots of money – you can keep the big Cisco routers/switches at the core of the network, and save big bucks on the branch offices.