Why do we focus on I/O? Because our architectures are all about moving data to the CPU. But why is that the model? Because Turing and von Neumann?

Universal Turing Machines (UTM) have a fixed read/write head and a movable tape that stores data, instructions and results. Turing’s work formed the mathematical basis for the First Draft of a Report on the EDVAC that John von Neumann wrote, a description of the work of Eckert and Mauchly at Penn.

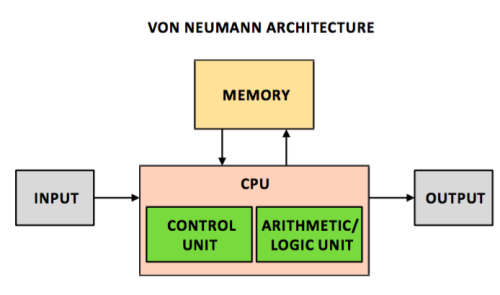

The von Neumann architecture looks like this:

Note that the number of data paths in the von Neumann architecture. In an era of Big Data – which is only going to get Bigger – I/O will continue to be problematic.

But is that the only way to build computers? No. Analog computers are older. Quantum computers are showing promise. But there’s another up and coming non-von Neumann architecture in the very early stages of development.

Universal Memcomputing Machines by Fabio L. Traversa and Massimiliano Di Ventra – physicists at UC San Diego – shows how it is possible to build memcomputers, developing a model of computing based on mem devices.

What are memdevices?

For some time HP Labs has been promoting memristor storage, a solid-state alternative to flash. But memristors are part of the memdevice family: memcapacitors, memristors, and meminductors.

UC Berkeley’s Leon Chua proposed memristors back in the 70s, but one wasn’t built until the 90s, by HP’s xxxxxxx. Now Traversa and Di Ventra are looking at building a computer from memdevices.

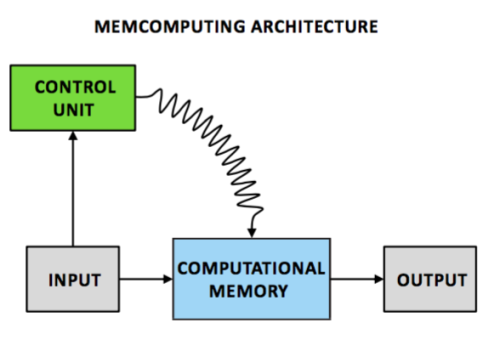

As the prefix mem suggests, memdevices have memory. So the data the processors are working on can be integrated into the device, rather then shuttled off to a cache or RAM.

Here’s their block diagram of the memprocessor:

Memprocessors exhibit some powerful features. The are inherently power efficient, since the data is coresident with the processor. Other key features include

- Intrinsic parallelism: they operate concurrently and collectively during computation, reducing the number of steps required for some problems.

- Functional polymorphism: different functions can be computed without changing the machine’s network topology.

- Low information overhead: the ability of an interacting memprocessor network to store more information than possible by non-interacting memprocessors.

In the latter case, the authors say

. . . show how a linear number of interconnected memprocessors can store and compress an amount of data that grows even exponentially.

Furthermore, they say this interconnectedness has important similarities to how our brain’s neurons operate – which is a major difference between UTMs and memcomputers. The authors define a Universal Memcompuing Machine (UMM) and show that

. . . in view of its intrinsic parallelism, functional polymorphism and information overhead, we prove that a UMM is able to solve Non-deterministic Polynomial (NP) problems in polynomial (P) time.

Since the UMM isn’t a von Neumann architecture machine this finding is not applicable to the P=NP problem. But it does point to a powerful advantage for memcomputing.

The StorageMojo take

Don’t expect memcomputers to hit the market any time soon. But they raise the issue of if and how current processors will able to deal with massively expanding data sets.

Moving data is expensive. Memcomputing may change that in some very useful ways.

Courteous comments welcome, of course.

Eckert & Mauchly complained bitterly of credit-hogging by von Neumann, but they themselves “borrowed” liberally from Atanasoff.

This sounds a lot like a coprocessor that has been built into Air Traffic Control systems for a really long time, see…

https://www.computer.org/csdl/proceedings/afips/1971/5077/00/50770049.pdf

Memcomputing has the potential being revolutionary and disruptive. In many ways it’s similar to how neurons in our brain store and process information – much more efficiently than our computers and devices today.

https://www.linkedin.com/pulse/4th-industrial-revolution-raj-gupta