OK, it is still a pyramid

Predictions of the storage array’s death struck some commenters as premature. Commenters raised a host of issues:

- Cost. Low-end storage arrays are cheaper than clusters.

- Complexity. The complexity of clustered hardware – all those cables and boxes – increases management costs

- Functionality. “Unless the cluster storage also provides the same reliability, scalability, and supportability as the larger monolithic arrays. . . ” it won’t supplant traditional arrays.

- Cost pt. II: Lower-cost modular arrays, combined with a software layer that knits them into a seamless whole, could provide a full-service storage infrastructure complementing today’s virtual servers.

History repeats itself

The issues are similar to the mainframe vs everybody arguments of the last 40 years. Within living memory mainframes from IBM and the 7 dwarfs – Burroughs, Sperry Rand, NCR, RCA, Honeywell, CDC and GE – went through the same process monolithic storage arrays will.

Mainframes faced the same negatives: costly; complex management; inflexible; limited applications; and optimized for batch computing in an interactive world. Proponents argued the positives: reliability; scalability; efficiency; security; and control.

Reinventing the wheel – without end

Mainframes were expensive because they were a) low-volume products and b) had high (60%+) gross margins. Each mainframe architecture had its own processors, peripheral interconnects, networks, OS, application software and sales and support groups.

Every mainframe company had to solve all the problems every other mainframe company did – at enormous cost.

Mainframes today

Mainframes are far from dead, but they are very different today. There are fewer vendors; they use commodity processors, networks and interconnects; run open source software such as Linux; and adjusted for inflation they are much cheaper.

That is the future of big monolithic arrays.

Monolithic arrays tomorrow

That we still have as many large arrays and vendors is due to the fact that the vendors have already gone far down the mainframe path. Commodity server motherboards, Linux, SATA drives and Xyratex enclosures are all common in high-end arrays, helping cut costs.

But at a fast approaching point, cutting costs isn’t enough. Vendors have to give customers good reasons to keep buying the big iron. The traditional mantra of availability, performance, scalability and supportability won’t hold customers forever.

Why?

Moore’s Law keeps moving the tiers

The industry has been pushing tiered storage in multiple guises for decades: HSM; ILM; and now, cloud storage. But customers embrace tiers out of necessity, not love.

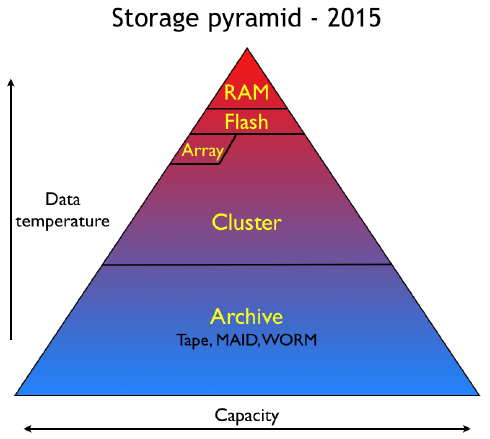

The powerful visual picture of the layer-cake storage pyramid is deceptive. The x and y axes are cost and capacity, but they are only proxies for the application requirements of the layer above.

Array vendors want to believe that there will always be an “array layer” in the storage pyramid. But why should there be?

As Moore’s Law keeps moving commodity server performance up, the performance envelope of commodity-based storage systems will enlarge. With the commoditization of 10GigE, flash, 6 and 12 Gbit SAS and a 10x increase in areal density, the bandwidth to exploit higher CPU performance will push today’s “archive” cluster storage into monolithic array territory. At a lower price, too.

The software that ties commodity hardware together will improve, weakening the availability argument. If performance is bandwidth driven, pNFS will close the deal for clusters. Scalability goes to clusters today and will only improve with time. Supportability isn’t owned by hardware companies – plenty of software-only companies have cracked the code.

Here’s the future storage pyramid

Won’t arrays disappear?

No, but they will change. For example, they’ll look a lot more like cluster storage under the sheetmetal and GUI. Flash will be an integral part of the architecture – and not as a disk drive. There will be less add-on software, because more will be built in.

Arrays will continue to support legacy interconnects, such as FC and FCoE – remember, this is the future we’re talking about – and legacy OS’s that commodity-based storage won’t. Storage is a conservative part of IT and arrays won’t disappear.

The StorageMojo take

I was at DEC when the company was growing fat selling VAXen. Many predicted that PCs would be the death of the minicomputer companies, but it took 8 years to hit DEC.

There is life after arrays. Minicomputers still exist – and are selling more than ever – but the business model is totally different. The loss of 30 gross margin points forced the issue.

Storage requirements will keep growing. But the days of 60%+ gross margins are drawing to a close. Survivors will follow classic military strategy: concentration of force; short supply chains; and clear objectives.

Courteous comments welcome, of course.

I can’t help but think some of the differentiation between storage clusters and arrays as presented here isn’t a little bit artificial. For many organisations then they would be extremely happy is they were just presented with a “black box” which presented certain, standardised storage services with known and predicable service levels. It doesn’t greatly matter to them how is it organised internally, but for sure they will want it very easy to setup, manage, expand and so on. If it turns out that what underlies this is commodity hardware, servers, operating systems and software then fine – but I stil think that’s a storage array. Perhaps we ought to adopt the term “storage appliance” to avoid religious wars over what consititutes an array. Then it just becomes a matter of how this “storage appliance” is built internally which may well be using what we think of as “storage clusters” – which I would just define as a storage architecture that is largely defined by a software layer unifying the storage functionality over a number of discreet nodes.

I still see a need for some hardware packaging which will make this rather different to just putting a few discrete servers into a rack and calling it an appliance. However, it’s very easy to see that there are certain hardware packaging techniques, such as rumours about the way that future blade enclosures can be used in new ways (such as building very large scale SMPs with cross-blade NUMA memory) which could mean a storage array could be built out of many of the same building blocks as compute farms. Of course some of these techniques aren’t completely new – but they have been hamstrung by sufficiently high-speed, standardised, cheap data interfaces.

For the true low-end – no doubt the software and processing will be similar, but there’s still a lot of value in optimised hardware design. After all, it’s perfectly possible to run Linux on a PC as a home router, but how much more convenient to buy a small, low-powered dedicated box of a tenth of the size and power consumption optimised for the purpose. That it runs the same basic software as the full sized Linux router hardly matters to the user – it is the packaging and the user interface that matters.

So arrays won’t go away, and neither do I believe that they will low huge market share to “storage clusters” unless we redefine an array as a storage cluster because of the way it is built (in which case, why isn’t a NetApp a storage clustger?). They will just adapt and commoditise – tha major storage vendors (at least if they have their wits about them) will adapt. DEC failed as they got fat an lazy on over-priced and slow VAX. IBM almost went the same way on mainframes.

I agree with Steve.

There’s a bit of an apples & oranges confusion in your pyramind. A clearer story would show 2 pyramids: one focused on media – RAM, flash, disk drive (2 levels?) – and the other focused on black box solutions.

The problem with Big Storage is not the internals, but the vendors’ insistence on selling at high margins. Take any commodity-based storage (or for that matter compute) system, and any competent designer will probably find several areas where judicious application of some more specialized hardware will make it just a bit better than competitors – even if it’s just power and cooling to support higher density. Look under the covers of any big system and you’ll probably find something that’s about 90% familiar already, which is *not* like the mainframes of old which were almost entirely custom. In other words, storage has already gone through most of the transformation you’re predicting.

“Availability, performance, scalability and supportability” aren’t just gravy. They’re the main course. It actually does take expertise to put together commodity parts to meet these goals. That’s expertise a few customers have, but most don’t and in any case IT-staff time has value. If knowledge about how to make and maintain a scalable system is represented in the design of product, then a rational customer would pay up to the equivalent value of IT-staff time to get it even if the physical components are all available off the shelf. Arrays won’t go away until every customer is an expert on optimizing performance and availability for a system with multiple tiers, dozens of ports and hundreds of spindles. Google and LLNL can do better by hiring their own experts, but they’re not typical customers.

One thing missing from this analysis is just what the future role of flash will be. As with most new technologies, flash is being used to re-implement existing technologies: The first industrial electric motors simply replaced the waterwheel that drove the shafts and belts the provided power throughout a factory. In fact, the actual characteristics of flash memory makes it a pretty poor replacement for a disk. You have to play all kinds of games to make flash memory look like a disk. Then you implement complex protocols designed to make talking to a disk efficient. Then the OS, and the application, plays all sorts of games in an attempt to make efficient use of a disk.

Of course, all this makes perfect sense because we really only have three well-defined, widely implemented, storage interfaces today: Main memory, disks, and tapes. Flash is closest to disk, so of course we initially pay the costs of using it as disk. But is this what will make sense in the long run? Certainly not. It will take some time, but we’ll eventually figure out the right abstractions for presenting flash to programs. It will almost certainly look much more like main memory than disks do – but it will have all kinds of special characteristics of its own.

Once that happens, the pyramid changes. Flash is physically small, doesn’t use all that much power, and will be very fast. It’s likely to be physically interconnected to CPU’s using technologies much more like today’s memory busses than today’s disk interfaces. One possible future: Flash moves into the box with the CPU’s. Arrays today allow sharing, but most sharing is by only a few programs. With virtualization, f the flash is only directly accessible to a fraction of the CPU’s, it may make sense to move the programs to the data, reshuffling the data to other clusters of CPU and flash as required. Disks remain outside of the CPU/flash combo, in big arrays/cluster storage boxes (the distinction fades away), which no concentrate on bulk storage, not on speed – or, more to the point, on speed for big transfers, since all “small” stuff takes place in flash.

Exactly what systems like this will look like isn’t clear, but if they’re at all like this, one can make some obvious predictions. For example, the market for 10K and 15K RPM disk drives disappears – their huge extra cost becomes unjustifiable when flash takes over speed-critical roles. The old vision of RAID – using inexpensive disks and using redundancy for reliability – becomes more interesting again. (Of course, the cluster approach of geographic redundancy just takes this an extra step further.)

Robin,

What I think is missing in your picture is backup/restore. The storage-part has a clean interface – anybody can do read and write. Where it gets messy is snapshot, replication and other data security features. They bind to OSses and applications in very specific ways, to the extend that a change of array implies redesigning, rescripting and retesting these pesky but unavoidable issues.

Since a true and open standard to solve these integration issues is not even in the works, the alternative is to solve all data security issues at the OS and application level. Microsoft is doing just that, with recent additions in Windows, SQLserver, Exchange and Data Protection Manager. With such tools, storage becomes just a pool of r/w blocks, organised in volumes. Commodity pure and simple.