Imagine cloud storage that didn’t cost much more than bare drives. High density storage with RAID 6 protection, reasonable bandwidth and web-friendly HTTPS access.

And really, really cheap.

Raw disk cost is only 5-10% of a RAID systems cost. The rest goes for corporate jets, sales commissions, 3 martini lunches, tradeshows, sheetmetal, 2 Intel x86 mobos, obscene profits and a few pale and blinking engineers in a windowless lab who make the whole thing work.

Storage for ascetics

But let’s say you didn’t want the 3 martini lunch or the barely-clad booth babes. All you want is really cheap economical, reasonably reliable storage.

You aren’t running the global financial system – what’s left of it anyway – and you don’t have a 2500 person call center hammering on a few dozen Oracle databases 7 x 24. No, you’re thinking a quiet cloud storage business for SMB’s, maybe backup and some light file sharing, that will give you a nifty little revenue stream with annual renewals so you can see trouble coming 12 months in advance.

Enough redundancy so when something breaks you can wait until morning to fix it instead of an 0300 pajama run to the data center. Easy connectivity so you aren’t blowing the savings on Cisco switches.

Bliss

Well, you aren’t the only one. Backblaze, a new online backup provider, designed the Storage Pod for their own use and are sharing it with everyone. They aren’t in the hardware business and I think they figured sharing it would be a nice little attention-getting device.

It worked for me. Here’s the box – which they are using in production.

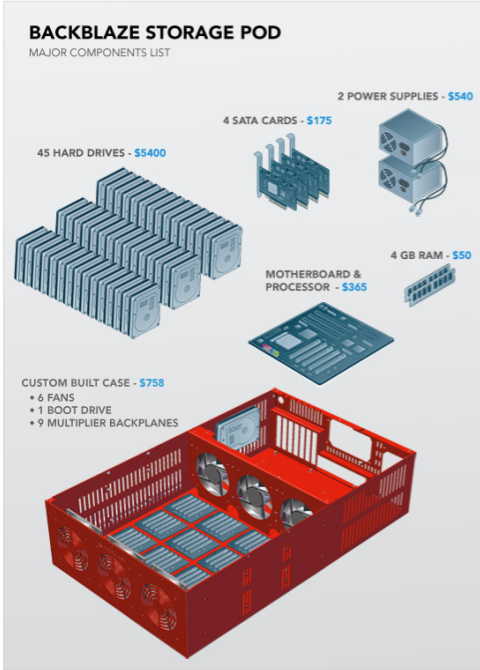

Here’s an exploded diagram with a simplified BOM:

And then there’s the (free) software. 64-bit Debian Linux, IBM’s open source JFS file system and HTTPS access. Put a stateless webserving front end on it and you’re good to go. Scale out the webserver and add storagepods to grow the system.

The StorageMojo take

This isn’t general purpose or high-performance storage. Nor is it backed by global network of 7 x 24 service professionals. But there are a lot of applications out there that just need a big bit bucket. This is it.

No one is manufacturing this for you either – which is a good thing. If you don’t know what you’re doing you can get in a lot of trouble with a lot of data real fast. Want to be the Bernie Madoff of cloud storage?

But the density is good, the performance is reasonable, the availability is decent and the price is right. This is a DC-3, not a 747. It is all you need for the right application.

Courteous comments welcome, of course. See the plans and get the box model in the paper Backblaze put together.

Oh man. I look at that box and I think “hot”. How does it cool all of those hard drives? It looks like there’s a small rail that might go between the drives, so maybe that’s how, but it seems…light, you know?

Otherwise, it’s an impressive amount of storage in just a few rack units of space.

Even a DC-3 can fly with only one engine: if one of the PSUs on this puppy goes you’re SOL. You’d have to ensure that any data is accessible on (at least) a second unit to be safe. I think that’s the main ‘deficiency’ of this design from a hardware perspective.

I’d also be curious to know if anyone sells generic rackable enclosures that could fit this. Appendix A says it was a custom design.

Lastly, anyone want to try ZFS on this? 🙂

The price is great, but I disagree with your “enough redundancy” claim. With only one motherboard, one boot drive and non-redundant power supplies, all then are single points of complete failure. Also, failure of any of the SATA cards takes out at least 10 drives, so with 3 RAID6 strings that means at least one of them will be unavailable. The only real redundant component you have is drives.

Sleeping through the night isn’t a sure bet.

David, Charlie,

Well, if you must, mirror 2 pods. Then it costs $200/TB. Or triple mirror for $300/TB. You can afford a lot of redundancy at $100/TB.

In any massive array drive failures are the #1 issue. Power supplies are probably #2. Once the electronics burn in they are usually good for 5-10 years.

This is about as robust as what Google uses. Not for everyone, but usable for the right apps.

Robin

Like Matt Simmons, we expected heat to be one of our biggest challenges but that has not been the case. 120mm fans move a lot of air and the enclosure keeps the drives within specs with just 1 out of 6 fans running….. Here are the drive temps from our SMART monitor on each drive in a heavily loaded server: /dev/hda: 37°C, /dev/sda: 29°C, /dev/sdb: 31°C, /dev/sdc: 30°C, /dev/sdd: 30°C, /dev/sde: 30°C, /dev/sdf: 32°C, /dev/sdg: 35°C, /dev/sdh: 35°C, /dev/sdi: 34°C, /dev/sdj: 32°C, /dev/sdk: 36°C, /dev/sdl: 38°C, /dev/sdm: 38°C, /dev/sdn: 38°C, /dev/sdo: 34°C, /dev/sdp: 30°C, /dev/sdq: 31°C, /dev/sdr: 33°C, /dev/sds: 34°C, /dev/sdt: 33°C, /dev/sdu: 34°C, /dev/sdv: 35°C, /dev/sdw: 36°C, /dev/sdx: 37°C, /dev/sdy: 36°C, /dev/sdz: 34°C, /dev/sdaa: 34°C, /dev/sdab: 34°C, /dev/sdac: 34°C, /dev/sdad: 33°C, /dev/sdae: 36°C, /dev/sdaf: 38°C, /dev/sdag: 38°C, /dev/sdah: 38°C, /dev/sdai: 35°C, /dev/sdaj: 35°C, /dev/sdak: 34°C, /dev/sdal: 35°C, /dev/sdam: 36°C, /dev/sdan: 35°C, /dev/sdao: 35°C, /dev/sdap: 37°C, /dev/sdaq: 38°C, /dev/sdar: 37°C, /dev/sdas: 37°C.

With regard to David Magda comment on redundant power, it’s easy to swap out the 2 PSUs in the current design with a 3+1 redundant unit. This adds a couple hundred dollars to the cost and since we built redundancy into our software layer we don’t need it. Our goal was dumb hardware, smart software.

And finally, no one sells an enclosure like this…. At least not that we could find. That’s why we designed our own and included a link to download the full 3D SolidWorks files on the blog post! Companies like Protocase will make you case based on these drawings in quantities as small as one so you really can build one of these servers if you want 🙂

For comparison, if you want to purchase something pre-assembled with a 5 year warranty… we just got a 48 bay server (dual 2.8 xeon, 24 GB of RAM, Hardware RAID6) from Aberdeen Inc. for about $12,000. We’re populating it with WD RE4-GP 2 TB enterprise drives ($300) for a total raw capacity of 96 TB. The cost per terabyte comes to about $0.28 almost three times as much as the backblaze solution, but still way cheaper than our NetApp.

One major difference is density. The server we have is 8U. We don’t have space constraints in our server room so this isn’t an issue for us. Replacing disks easier as the bays are front mounted. No need to slide the chassis out.

The pinch-point is the case. Where can you source one of these? I’d very much like to try building one, to have as secondary storage for backup purposes.

When you say reasonable bandwidth, I am just guessing here but you’re going through probably a 1Gb ethernet port on the motherboard?

Also, I think someone using a similar solution in Los Angeles recently made headlines:

http://www.cbc.ca/world/story/2009/09/01/los-angeles-fire-california958.html

Robin, I realize that you’re being sarcastic, but this talk about “3-martini lunches” is an insult to storage engineers everywhere. Firmware is expensive and not everyone can use Linux/Solaris.

It would be interesting to apply the same whitebox (er, redbox?) approach to HA general-purpose primary storage and see where the cost comes out.

Wes, actually, I’m not being sarcastic. Vendor hardware array product costs the R&D is typically on the order of 10-15% of the total product costs. SG&A – commissions, 3 martini lunches, corporate brass, etc. – is typically 2-3x R&D. Check this EMC 10Q report.

2 points.

– I have a lot of sympathy for engineers. They do a lot of painstaking work, put years of their lives into a project and all too often it tanks because the marketing sucks.

– I do not begrudge the high-performance/availability arrays their margins or their prices. What does burn me is when vendors sell highly capable & costly storage into apps that don’t require it just because they can. Some apps require EMC’s or IBM’s finest storage. Great!

But the secular trend to cooler data means new storage models are needed for storing massive amounts of cool data. Encouraging and analyzing that trend is one of the reasons I founded StorageMojo.

Robin

This class of solution has its place. The main place for it is data centers where, by design, failing at this scale is not an availability concern. For example, supposing as Robin said that you replicated your data on systems like this, and had some kind of software glue that counted the minimum required number of replicates, triggering off some software to make more replicates for files that go below a certain threshold. This software glue is in-house stuff for quite a number of the largest of the world’s web shops. You are now beginning to see that glue start to be sold as “cloud storage”.

Architectures built to tolerate failures at this level have significant advantages. The most obvious of the advantages is the maintenance approach–one schedules maintenance on the data center quarterly, instead of running around and hot fixing things that have failed, like power supplies, or disks in RAID sets (indeed, usually one is avoiding RAID at all).

Enterprise versions of the software that do roughly what I decribed, and might leverage this sort of hardware include EMC Maui, ByCast StorageGrid, Caringo CAStor, and Parascale. EMC’s Atmos platform is a full turnkey version of the same, with its own (not quite so dense) hardware, having the advantage of being appliance-like, and with one throat to choke.

Joe.

Upfront cost isn’t the whole story. Profit margins are tight in the online world and the total cost of ownership of infrastructure is a big chunk of costs. I would be interested to know the power and cooling costs per Petabyte (mirrored of course because of the single points of failure).

Disclosure. I work for a storage company with extremely low power and cooling costs per PB

I stand corrected; thanks for the data. Given all that fat, one wonders why it persists. Why hasn’t EqualLogic taken over the world?

Yes you could run (stable) zfs on such an enclosure via NexentaStor, improving data integrity and adding management capabilities. More likely you would run it and our API on a gateway and use this as a JBOD. Actually the prices for our partners hardware is similar and the support and likely quality is likely higher.

Actually you cd buy a solution at similar economics without having to build your own storage software and hardware.

0. Ewan: Or just put an OpenSolaris 2009.06 on it …

1. I think, this baby cries for ZFS on this devices to protect their data against all the bad things that can happen and will happen in such a large population of disks. JFS has no checksums for data.

2. On the hardware: A nice poor-mans thumper 😉 Do you have any problems with vibration? It doesn’t look that you use a drive bracket and that the disk is just holded at half of the disk?

Well, if you are going to use a free OS why not use the Linux user land of http://www.nexenta.org which also has the ZFS file system? OpenSolaris is powerful however unstable, costly to manage, and is ‘just’ an OS, not a storage management solution.

For not that much more than the hardware listed, you can buy a complete unified storage solution from Nexenta partners.

For example, we just had a partner sell an active / active 96TB system for ~$45,000 and that includes fiber, NFS, CIFS, iSCSI out the front, a useful UI and console, an industry leading module for the management of storage for virtualized environments, synchronous (non ZFS) plus async replication (ZFS based and hardened with transport and fault management), and optional Windows back up clients that integrates with VSS. You try NexentaStor for free at http://www.nexenta.com/freetrial

Oh … unstable … don’t have such problems so far … and most of my systems are running with OpenSolaris 😉 Costly to manage? Whats costly at using some simple commands at the bash? Sorry … i hope you know, to whom you talk at the moment and i’m sure that you will understand, why i have another opinion, albeit i’m thinking Nexenta is a nice piece of tech. By the way … pleace contact me by mail, it’s on my blog … i have a strong opinion about some of your sales tactics, that i won’t discuss in public.

You can use ParaScale to glue the boxes together. ParaScale is a software only solution (free 4TB license available at http://www.parascale.com) that can be installed on individual Storage Pods. The software will aggregate all the storage within the Storage Pods and present it to you as a single pool.

Several advantages of doing that –

* It takes care of the “enough redundancy” problem. With a 2 or more replication policy for data, even if one entire box goes down due to say a power supply failure, your data is still accessible.

* You build a scalable cloud storage solution that can be accessed using standard access protocols. The software will take care of load and capacity balancing among Storage Pods.

* It takes care of the performance problem. If one box gives reasonable performance, aggregating several boxes together will only improve performance as the software does not bottleneck data.

* You can mix and match hardware, and so your solution can consist of some Storage Pods with some older Dells/Supermicro etc. A true heterogeneous cloud. And with better designs of Storage Pods coming in the future, you can add them and retire older hardware, doing all this while keeping your data intact.

>>> Robin, I realize that you’re being sarcastic, but this talk about “3-martini lunches†is an insult to storage engineers everywhere. Firmware is expensive and not everyone can use Linux/Solaris.

“3-martini lunches†is standard practice in some shops.

When I was in software I saw cases where sales were made by fast-talking high flyers on all you can eat credit cards in strip clubs, with promises of deadlines and performance coming out the wazoo,

while the honest small software shop making realistic promises and no big entertainment budget came in 3 years later to clean up the high flyer’s mess.

You’d think the software buyers of the world would get a clue but my contacts in SW say the same stuff is going on still

more relevant to this topic, I’m wondering about, and I hope this is not a total clueless newbie question,

why so many fans in the individual units?

If you have the whole rack to play with, why not perforate the tops & bottoms and have one HUGE AC fan at the bottom?

You might even fit in one more port multiplier per case without the 6 fans

“Chimney cooling” tends to bake the stuff at the top of the rack, since the air just gets hotter and hotter as it rises.

Robin/backblaze:

The number 1 failed component in storage arrays is hard drives. Unless I’m missing something, the drives in these setups are nothing remotely close to resembling hot swappable. It looks like the servers themselves sit on shelves rather than using rail-kits that would allow them to be pulled from the rack. The drives appear to have absolutely no caddy, making removal a chore at best.

@Evan:

Unless Nexenta has GREATLY enhanced driver support recently, you don’t even support port multipliers, just like opensolaris hasn’t (until the end of last month). So no, a “nexenta CD” wouldn’t work on the backblaze setup.

Either prove me wrong, or learn your own product… If I’m right, it’s pretty sad the CEO and VP of marketing doesn’t even know what he’s trying to pimp.

>> Unless I’m missing something, the drives in these setups are nothing remotely close to resembling hot swappable.

sata under Linux can be easily swapped out of a running system. I’ve done it on standard desktop systems with zero advertised hot swap capability.

Under XP I’ve heard horror stories, and had XP crash when I tried it but SATA drives definitely do show up under “safely remove hardware”

>>> It looks like the servers themselves sit on shelves rather than using rail-kits

The pictures I saw had rails

>>> “Chimney cooling†tends to bake the stuff at the top of the rack

I figured this is one rationale …

Except that a big AC fan will move FAR more air, and develop more than enough head to push it through 20 sets of perforated sheets than 6 tiny 8″ DC fans.

Heck, put a 2nd big AC at the rack’s top exit

“Unless I’m missing something, the drives in these setups are nothing remotely close to resembling hot swappable.”

You are missing something, Tim. There are enterprises that are allergic to “swapping” anything, because the act of needing to swap (say, when a drive fails in a raid set) requires staff be present to attend tot he matter on a timely basis. This is just not acceptable to these sorts of enterprises, because they can by now means afford the staff to do this. Their data centers are entirely LIGHTS OUT. Service occurs only once a quarter or so. Attending to RAID rebuilds just is not part of the business model.

So what they are doing is JBOD only. If a disk fails, they let it STAY failed. Replication attends to the problem instead of RAID. If a large enough number of drives fail in a single unit, they just throw out the whole thing. They may or may not even scavenge the working drives out of the system at that point, depending on their recap strategy.

Anyway, since these enterprises are allergic to swapping stuff, you might see how they’d be allergic to paying for hot swappable components. In many cases, they don’t want pay to be notified that a drive has failed, either. I know of at least one enterprise that’s so tightwad about components they don’t want, they’ve refused to pay for both the power switch (and it’s 1W of circuitry) in their custom designed drive assembly. And so on and so forth.

The thing to keep in mind here is that these systems are purpose built for a scale that beggars one’s imagination.

Joe Kraska

San Diego CA

USA

So many things could go wrong with this model. Excess heat in the back of the unit and rotational vibrations come to mind right off the bat.

My favorite part of systems like these, do you have any idea how much this thing will weigh with 45 drives in it? Both Nexsan and Dell|Equallogic recommend lower rack installations, and floor secured racks.

How have you been?

Nice. It highlights that server storage has not commoditised the way servers did 15 years ago.

This solution however is for the very technically competent D.I.Y enthusiast. Anything D.I.Y usually leaves a hole behind when the person that built it, loved it, happens to leave the building (very flippant look at DIY storage here http://www.matrixstore.net/2009/05/21/diy-good-or-bad-short-video/). Saying that I am all for this sort of solution when used in the right context.

One of our customers asked us if we can run MatrixStore on a cluster of these things. Food for thought ….

I wonder what your IO speeds are. With a shared bus and only 6 true sata connectors, and then placing HTTP on top of this shared bus it has to be slow. Yes, probably perfect for your storage needs but many need more and faster raw disk access.

I wonder, on the backblaze blog you mentioned several prices. Why didn’t you price out HP’s LeftHand Networks product?

“One of our customers asked us if we can run MatrixStore on a cluster of these things. Food for thought ….”

A tour of the MatrixStore website produced:

“A MatrixStore license cost $1000 for every Terabyte of assets you need to store.”

OUCH!!!! That’s $1,000,000 per PB, man! One can buy turnkey, fully assembled storage appliances for less than that!

I suggest you look into some of the new cloud storage providers, like Parascale or Caringo. If one is looking at large scale deployments, I suggest EMC Atmos or ByCast Storage Grid.

Joe Kraska

San Diego CA

USA

“Excess heat in the back of the unit and rotational vibrations come to mind right off the bat.”

I recall long conversations with COPAN, who has storage systems about as dense as they get. They were very concerned in the initial stages of their design about vibration. What they discovered as they built their prototypes, however, is something that sort of seems obvious in retrospect: drives are very heavy. Vibration was by no means a problem, and I’m doubting it would be a problem with this system, either.

Joe Kraska

San Diego CA

USA

Um, I see my comments on NexentaStor I guess sounded like an attack. I’m not a pimping person 🙂

For the record – yes try open source hardware, try it all, the future of storage is openness just like in other domains of IT.

I have no idea whether NexentaStor would run on this particular piece of hardware. However yes you can combine interfaces and multi-path and can get excellent performance on industry standard hardware in general AND get the full legacy storage experience such as call home so that when your drives fail a replacement drive can appear magically. My point is that you don’t have to build your own hardware and your own software to have similar benefits in terms of openness and savings. But some users WILL absolutely want to build their own and that’s great as well.

Regarding Parascale — I guess I see a proprietary distributed file system as not a fully open approach (although it is hardware independent). pNFS is coming (as we’ve all been saying for years — but this time it really is) and an open approach will trump the closed eventually. On the other hand, I see value in what Parascale is doing around the file system so perhaps they could use pNFS when it emerges?

Hi Joe,

It was in deed a quick tour that or our website needs to better reflect what you are getting for your money. The $1000 is for the Mac OS version which is for organisations who wish to re-use the Xserve RAIDs they are rolling out the from their SANs.

The $1000 per TB includes:

– 2 copies of data (RAID5 mirrored)

– Object based storage providing single namespace (not CAS) – Less management

– scalable in seconds (less Management)

– searchable (integrated metatdata support, metadata living in the archive with the content)

– replication

– self healing, self-managing (less Management)

– fully audited

– regulation compliance

– multiple-tenancy support

– migration in place to new platforms (less Management)

More than just disk.

We have a Linux based appliance also which has better economics. We are shortly to release our nodes using 2TB disks giving pricing from: From $729/Terabyte (single Copy) to $1460/TB (dual copy). that includes all hardware and the software. All this on non-proprietary platform, OS, Filesystem or File formats. Your data WILL outlive your vendor.

Please do let me know of any other solutions with that feature set for the same price as we are always keen to learn more about any competition.

Cloud argument is for another day.

Thanks

Nick

“Please do let me know of any other solutions with that feature set for the same price as we are always keen to learn more about any competition.”

Thats interesting, Nick. It’s probably a mistake to simply post pricing without naming a volume point, as at the petascale there are in fact competitors that will grossly underprice you. I cannot tell you who they are, as every petascale negotiation I have every participated in has been NDA’d. However, I can tell you with certainty that I have to even once, in the last year, price out systems for less than $1/GB usable at the petascale from any vendor, and the pricing pressure has definitely been downwards since.

Auditing and regulatory compliance are, of course, important niche features. But specifically on that subject, we know of at least one vendor doing what you are doing (and more than what you have named above) pricing their SW at $0.25/GB when the procurements are on the order of 1PB/2 mos.

Do keep in mind that people looking at systems like the one named in this article are likely interested in unprecedented scales of deployment, generally also associated with unprecendented cost sensitivity.

Joe Kraska

San Diego CA

USA

Thanks for the feedback Joe.

Some points.

1. Our pricing is public list pricing. Not a mistake. A starting point. Open. We can and do, of course, scale our pricing

based on scale. I could like most show you a private price list under NDA that is considerably higher

and then “dramatically” reduce the pricing based on a volume play.

2. The appliance product is priced for hardware and software. There is of course more

that MatrixStore has to offer than the above list but that is not for here. My first comment on this post was a genuine request from a client. I am happy

to go offline for the full sales pitch.

Like I said, the Backblaze approach is good in the right hands, for a team that has the skills

to build/support Pb scale. Simply not for everyone.

Ping me offline it you like. Be great to chat.

N

Joe:

Two points, first, they aren’t jbod’s. They specifically state they are doing raid-6 with LVM.

Second, they have 3 raid-6 raidsets with 15 disks each. They have 45 total 1.5 TB drives. IE: No hot spares.

There’s absolutely NO WAY they’re going entire quarters or more without swapping drives in that setup.

“There’s absolutely NO WAY they’re going entire quarters or more without swapping drives in that setup.”

Well. It’s a doit-it-yourselfer. One could simply use a more basic SAS card, or set the controller to JBOD.

But as for the never swapping drives in a RAID set notion. Could one leave it unserviced? One could. If one is willing to lose an entire RAID set and leave it alone forever if lost, then one could go until one wanted to FRU or recap the whole unit. I.e., if you want and need a virtual LUN the size of 15 hard drives, one can make the decision to also have the 15 hard drive size LUN be a unit of failure. Personally, I would only be motivated to do this if I had a specific single stream speed requirement. I.e., if your performance requirement drives you to RAID, you RAID.

Various “cloud storage” technologies can all accomodate what I just said. One just needs to learn to think creatively about the storage problem. To wit: there is nothing about a RAID set that intrinsically requires service, once one has the appropriate replication glueware in place.

Joe.

“However, I can tell you with certainty that I have to even once, in the last year, price out systems for less than $1/GB usable at the petascale from any vendor, and the pricing pressure has definitely been downwards since.”

Hmmm, if I am reading this right, then you haven’t seen Peta-scale systems from any vendor less than $1/GB.

No offense intended, but this suggests to me that you are not talking to the right vendors. We have done numerous designs and quotes over the last 9 months for large scale PB+ systems coming in *well under* that target, with the parallel file system and management stack included.

Relative to vibration, while these drives are heavy, you still have to worry about higher frequency (non-bulk) oscillations, being transmitted through any support. Given 45 drives with a 120Hz signal (7200 RPM) arranged the way they are, it is left as an exercise for the student to calculate the frequency and normal mode power spectrum for this unit. We have seen units in racks situated near a “jumpy” section of a datacenter floor have higher failure rates than identical units situated elsewhere. Moving the rack or the device to a less “bouncy” section did eliminate the issues. Vibrations/impulses can/do impact raids. There is a famous Sun video where the engineer shouted at his drives, and caused some issues. You have to worry about that normal mode power spectrum. Happily, it should be easy to work around by doing some material alterations, and other things that are relatively low cost changes.

The design is interesting of these boxes. We had thought of something similar for our DeltaV line, but elected to go with a more standard approach, and made some radically different design decisions on the internal guts. They are minimizing cost of build above all other design decisions. We minimized cost of build while making sure if a bus hits our company (so to speak) our customers can still service and support the machines they bought from us, and that they will still perform well even in the face of low cost design decisions.

“Hmmm, if I am reading this right, then you haven’t seen Peta-scale systems from any vendor less than $1/GB.”

I’m sorry, Joe, it was early in the morning. What I meant was that they are ALL less than $1/GB now.

Joe.

Here is an article showing that this solution will not work. It is quite technical, but it point out several flaws in this design.

http://www.c0t0d0s0.org/archives/5899-Some-perspective-to-this-DIY-storage-server-mentioned-at-Storagemojo.html

@crimson,

The article you posted doesn’t say the “solution will not work”. The author is a Sun Employee and was merely saying:

“No, it isn’t a system comparable to an X4540 … I see several problems, but i think it fits their need, so it’s an optimal design for them…the nice thing about custom-build is the fact, that you can build a system exactly for your needs. And the Backblaze system is a system reduced to the minimum.”

This is ideal for nearline storage and D2D backup, which is exactly the business Backblaze built it for. Their solution solves a key problem that no one in the industry has solved — namely this:

http://blog.backblaze.com/wp-content/uploads/2009/08/cost-of-a-petabyte-chart.jpg

we recently launched an open-source hardware project to discuss / improve the backblaze design:

http://openstoragepod.org

Like everything else, you get what you pay for. A professionally designed and tested fault tolerant system is going to cost you $100 per gigabyte when everything is said and done.

Carrott – I’d say poorly designed. I don’t think even EMC would claim that – and they aren’t known for being shy around pricing.

Robin

PS- Any EMC’ers want to weigh in? My guess is that dual-Symms, SRDF and interconnects would top out at about $50/GB. Last I heard, EMC does test their stuff.