One infrastructure to rule them all discussed the emerging enterprise need for a single, scalable file storage infrastructure. But what infrastructure?

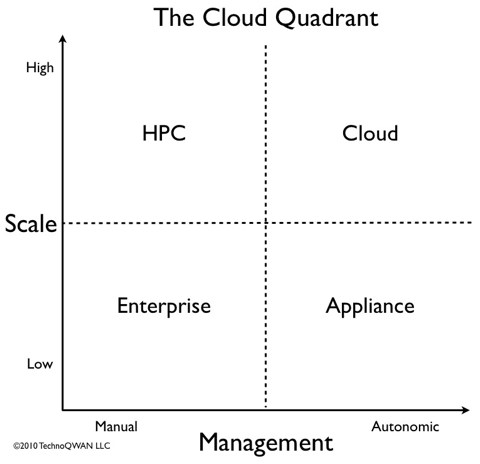

Some background to this is last year’s Cloud Quadrant and this year’s Why private clouds are part of the future.

Block and file

For decades direct-attached block-based storage was the only option. The ’80s introduced file-based storage. Much of storage systems growth in the last 15 years has been in file servers.

New systems, be they video, sensor or social, are producing massive collections of files at an accelerating rate. The rapid development of lower cost mobile computing devices – smartphones, iPad’s, netbooks and Android tablets – mean that content consumption and production will be a major source of file growth. The long tail of content demand means that the variety of online content will grow – especially as the cost of storage declines.

Private cloud

The larger issue is the need to keep this fast-growing information online for years, despite rapid change in the underlying storage, network and computing infrastructures. File data must become independent of our storage and server choices.

As stores grow data migration becomes less feasible. Rip ‘n replace gives way to in-place upgrades.

Achieving that means moving to an object storage paradigm. How do we know this will happen? Because it already has.

Object stores at Google and Amazon Web Services are already among the largest storage infrastructures in the world. AWS alone stores over 100 billion objects today. Hundreds of millions of people use object storage every day – and don’t even know it.

What is object storage?

Object storage instantiations vary in detail and supported features. However, all object storage has two key characteristics:

–Individual objects are accessed by a global handle. The handle may, for example, be a hash, a key or a something like a URL.

–Extended metadata. The extended metadata content goes beyond that of traditional file systems and may include additional security and content validation as well as presentation, decompression or other information relating to the content, production or value of the enclosed file.

Like files, objects contain data. But they lack key features that would make them files. They don’t have:

-Hierarchy. Not only are all objects created equal, they all remain at the same level. You can’t put one object inside another.

-Names. At least, not human-type names like Claudia_Schiffer or 2006_Taxes.

A user-facing component provides those missing elements. You decide which files belong in which folders. You give the files names. You decide which users have access to which files and what those users can do with those files.

Those choices are embedded in the object metadata so they can be presented as you have organized them. But if you have the object’s handle you can access it directly.

All objects look alike. Some are bigger and some are smaller, but until we get them dressed and named, they aren’t files. Yet they are a lot closer to files than blocks are. Which means that if you choose to manage objects you no longer have to worry about blocks.

Essentially then, objects are files with an address – instead of a pathname – and extra metadata. Unlike distributed file systems – where the metadata is stored in a metadata server. The metadata server keeps track the location of the data on the storage servers.

Some file storage systems are built on object storage repositories. Legacy APIs make it a requirement for many applications, but URL-style access through HTTP is more flexible in the long run.

Crossing the implementation chasm

While the economics of objects are obvious at scale, they are less compelling at the beginning of a typical enterprise project. It is easier to buy another file server than to worry about long-term architecture.

Here’s a rough diagram of the relative scalability of storage options:

When under-12-month paybacks are expected, who will buy an object storage infrastructure? The simple answer is that as object stores become better known and startup costs are reduced, more companies will buy them. Archives will be the first market. The longer answer is that as public cloud projects are brought inside, object stores will receive them.

The StorageMojo take

As organizations amass large file collections, the economies of scale and management for object storage will become apparent. Savvy architects will add commodity-based scale-out object storage to their tool kit.

HDS, NetApp and HP have recently added modern object stores to their product lines. And rumor has it EMC will too, either by getting Atmos to work or by buying Isilon.

Courteous comments welcome, of course. Still don’t like the name object, but I’ll get over it.

“And rumor has it EMC will too, either by getting Atmos to work or by buying Isilon.”

Didn’t know Isilon had object storage in their repertoire (atleast their website doesn’t have any indication). How about Panasas ? They seem to actually have object storage implementation which has been shipping for years.

EMC to buy Isilon?! Do you have any more information on this?

[disclaimer: I work for an object-based storage software company]

Object-stores of cloud-computing flavor like Amazon’s S3 or Azure’s Blob service, all have a common trait that is not really obvious on first sight.

Their approach to storage is to have a single protocol for both data and metadata management. They are completely API-driven and leverage the most-ubiquitious data and communication protocol: HTTP.

Both have huge implications for the IT-landscape as a whole. By leveraging HTTP there are virtually no limitations on devices or communication technologies to access your storage [now compare this with iSCSI or SANs].

The API abstraction enables applications of all kind to directly store _and_ manage data likewise. ISVs can start to vertically integrate from application-level all down to storage-level.

Users of such cloud-storage enabled applications do no longer have to go to the IT department to have a new storage resource added to the DMS system, instead non-IT staff can (almost) completely manage storage w/o calling the IT-department.

IMHO the nature of direct access and control of object-based cloud-storage is the true game changer.

Let’s not forget that EMC more or less invented object-based storage with Centera.

Sachin, Isilon is essentially an object store with a a NAS front end. They don’t talk about it much because it is irrelevant to their target markets.

Damir, EMC buying Isilon is currently a hot rumor, along with either Oracle buying EMC. I don’t see how either EMC or NetApp will manage to remain independent given that both Oracle and Cisco could use a healthy storage business.

Mike, EMC bought the product that became Centera back in the ’90s.

Robin

Hey Robin,

Nice article. There is a clear need in the industry to clarify what is and is not Object Storage. A lot of confusion probably stemming from the use of ‘Object’. So thanks for starting the clarification process..

Also, I guess the first link is incorrect though .. it links to this same article, and i think you meant to link to http://storagemojo.com/2010/09/10/one-infrastructure-to-rule-them-all/

my 2 cents

-Marc

Robin,

Just wondering if you’re going to publish an updated version of the Cloud Quadrant with the company names on it (as per your previous one http://storagemojo.com/2009/09/28/the-cloud-quadrant/)?

Nice piece Robin. You did forget to mention Dell with their DX platform which is 100% object based.

@ Mike EMC did push the object storage market with Centerra but bought the technology from a Belgium company called Filepool.

Object storage just makes a lot of sense for many large-scale, and even medium-scale, systems.

A significant advantage of object storage is that it adds a degree of freedom to the redundancy model – objects can be parity-striped across multiple nodes. Isilon and ZFS are two examples of this, albeit both with block-level interfaces wrapped on the front. Without this ability, you must mirror everything across multiple nodes, or entrust expensive storage nodes with “redundant everything”.

I can see lots of advantages to object stores, and some major obstacles for smaller shops that don’t want headaches:

– No interoperable standards for accessing object stores, so you get vendor lock-in from day one. Yes, HTTP is important. So is Ethernet for iSCSI, FCoE, and AoE, and that’s only three standards to choose from for block access. We need HTTP API standards.

– Metadata is not metadata is not metadata, and one apps’s data is another app’s metadata. No vendor has even scratched the surface on providing comprehensive, extensible metadata for object stores, and we’re even farther from metadata access standards than we are from basic object access standards.

– For the near future, object stores need to be wrapped with filesystem and/or block semantics, so our existing apps will continue working. Here’s our standard access + metadata layer with a limited number of widely used standards – CIFS, NFS, iSCSI. Great market opportunities for one product to span multiple object store back ends and possibly multiple access front ends. A few vendors are pursuing this, but not getting much traction yet. And some of them will get bought and buried by cloud or hardware vendors to preserve lock-in.

– For the near future, there’s another great market opportunity for filesystem access products that span internal object stores, private cloud object stores, and multiple public cloud object stores, with rules to move and copy objects automagically. This is how I avoid vendor lock-in at multiple levels, and optimize storage speed, cost, and reliability by application. Absolutely must have high quality, client-controlled data and selected metadata encryption.

There is a small problem in the Object storage concept, is that the storage itself does not is not able to maintain its own structure.

The information about hash keys or URLs needs to be saved somewhere, and guess where ? it is saved on an external block level storage… Small one indeed.. But becomes a single point of failure.

Lose your object storage DB, and you’ll spend years scanning your object storage meta-datas to rebuild the DB, if you have millions of objects (that is should your storage enable you to do so).

There has got to be some intelligent way to tackle that obstacle of the external DB, and reduce the point of failures.

I like the concept of object storage (and I don’t like the name either), for large masses of files with long retention & easy put/get interfaces, but I fail to see currently how to overcome the problem the storage pushes back at ya with the DB.

@ RAN

Object Stores are not all created with an external metadata DB approach. MatrixStore (like others I am sure) maintains redundancy of content, metadata and management information within the solution and thus does not rely on a ‘head’ node.

Ran, might be there are object-based storage systems that work like this. The ones I am aware of support object enumeration / listing. There is no need to store any information elsewhere.

Robin,

Nice article. I enjoyed our talk. It’s nice to see someone who understands the area.

Bob

@Rocky, I agree interoperability in object storage is a real concern today. S3 became a defacto standard but leaves a big void as to how application interact with meta-data. It’s not uncommon to upload data to S3 using a backup tool for example and not being able to retrieve your data unless using the exact same application in the future. All because of a mix of meta-data incompatibilities and reinventing the wheel in each app…

We’ve created an open source project called Droplet, which is a C client library for cloud storage services whose goal is to be cloud agnostic (talks S3 today but we are adding cloudfiles & SNIA CDMI support) and to solve the most common issues associated with cloud storage, namely caching, compression, encryption and meta-data handling. Any application written with Droplet should be able to open files written by another Droplet app and in particular transparently decompress or decrypt data by using compatible meta-data formats. (GPL Source code at http://github.com/scality/Droplet).

It’s not solving all meta-data issues but I think it’s going in the right direction.

@Jaden D

Interesting that you mention those two platforms. There is a connection. I co-founded both Belgium-based FilePool, who indeed provided the software for EMC’s Centera (just one ‘r’, incidentally – it’s Celerra that is spelled with two – go figure), and Austin-based Caringo, who delivers the rebranded CAStor software at the heart of the DX6000. If there is one lesson we learned from the privilege of being able to do it all over again from scratch: simplicity rules!

When did Isilon become an “Object Store”?

http://www.cs.cmu.edu/~garth/papers/welch-fast08.pdf

http://en.wikipedia.org/wiki/Object_storage_device

I was under the impression (which was reinforced after reading Isilon’s patents) that Isilon is nothing but an NFS store that replicates files across nodes. Is Isilon reinventing itself through market-speak?

More… (Robin, please add to previous post)

http://www.pdsi-scidac.org/docs/sc06/IsilonNFSpanel.pdf

KD,

No, that is my interpretation of their technology. But I may have a broader definition of object than some.

I’ve asked Isilon to comment, but I understand things are pretty busy at their Seattle HQ.

Robin

The security implications of moving storage administration and access to HTTP is scary. HTTP provides a well-known attack vector with well-known exploit classes. It’s a protocol that almost always needs to traverse firewalls. Standardizing on an API lowers a low barrier to entry. Ask storage vendors and their engineers about security and they talk obscurity – it’s a segregated FC fabric that few attackers have access to, an attacker can’t just download our special management software, no attacker knows our protocols, etc. But HTTP is so common, it’s easy to proxy HTTP into a company’s depths (likely some employee has already set it up for you in a misconfigured Apache) and it’s more difficult to notice additional HTTP traffic offsite. There are ways to mitigate the security issues but increasing ease of use goes against those precautions. Storage vendors must put security in the forefront, much more than it has been with previous products.

Great article.

Franisco, HTTP exploits that work with website-related web services like Drupal don’t translate to other web services. Yes, object stores will have security vunerabilities as they are presented via public facing interfaces, but securing HTTP interfaces are pretty easy for any network admin. Most of the finacial transactions today are done through HTTP interfaces over public networks, so there is already a ton of good art to copy.

Robin, sounds like this is a two step journey. First will be to move binaries into object repositories and then the second will be the rise of middleware vendors that will support a heterogenous enviroment. That way an organization can move data between the cloud and local storage without systems caring about where the object resides… Hmmm, I think I need to have lunch with my angel funder….