I don’t plan this stuff, it just happens

Guess this is turning into ZFS performance week at StorageMojo. Now we get to see how HW RAID 10 compares to software ZFS RAID 10.

This comes in a fine report from Robert Milkowski’s milek’s blog. It is part of a continuing series.

Comparing hardware and software RAID

This time Robert compares ZFS to a mid-range EMC Clariion CX3-40, both running on a 16 GB Sun x4100 M2 server with two dual-core Opterons. The CX3-40, which EMC positions as “High performance and capacity for high-bandwidth applications, heavy OLTP or e-mail workloads. . . ,” is connected by dual 4Gb FC links to the 4100 and sports 40 4Gb FC 15k 73 gig drives.

That’s a rig with some scoot.

He also throws in a Sun x4500 (Thumper) as a local disk comparison.

What’s the layout?

Robert configured four test stands on the hardware.

- Hardware RAID: four 10 drive RAID 10 LUNs, two on each controller, with “pci-max-read-request=2048 set in qlc.conf”. Did I mention the tests are running on Solaris 10? ZFS used the four LUNs in its storage pool.

- Software RAID: the Clariion presents 40 individual disks, 20 on each controller.

- Software RAID/Q: same as above with “The CX3-40 is set up with 4 10 drive RAID 10 LUNs, two on each controller, with “pci-max-read-request=2048 set in qlc.conf” which must mean something.

- x4500 SW RAID: a ZFS RAID 10 pool set up across 48 7200 rpm, 500 GB drives.

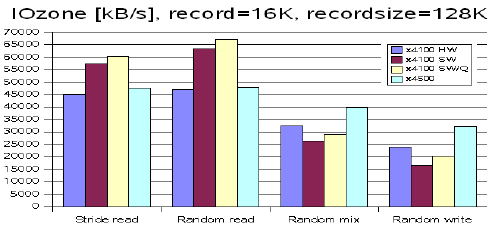

Note the random write performance? 44 slower spindles beat 40 fast ones. Hm-m-m.

If you care about ZFS you should read the whole thing

But here’s some eye-candy to whet your appetite:

An IT product that isn’t fully baked day one? How can that be?

Honest fellow that he is, Robert can’t help noting that while ZFS performance is excellent, there are some feature deficits that the ZFS team is hard at work on:

The real issue with ZFS right now is its hot spare support and disk failure recovery. Right now it’s barely working and it’s nothing like you are accustomed to in arrays. It’s being worked on right now by ZFS team so I expect it to quickly improve. But right now if you are afraid of disk failures and you can’t afford any downtimes due to disk failure you should go with HW RAID and possibly with ZFS as a file system.

The StorageMojo take

Back when RAID was young, dedicated hardware made sense because CPUs were too pathetic for words. Nor had anyone done a clean sheet design like ZFS. What Robert demonstrates is that a software RAID implementation is capable of delivering the performance of hardware RAID today. That is very good news for anyone on a budget.

RAID arrays may escape becoming commodities. They may simply become irrelevant.

Update: Robert commented a correction which I incorporated above and which just strengthens the point.

Comments welcome, of course.

You have the obvious problem of I/O on desktop machines – comparisons of ZFS on uber hardware doesn’t make a bit of difference when talking about Joe Schmo’s latest onboard SATA controller.

One correction – in x4500 I used 44 disks in RAID10 plus 2 hot spare disks + 2 mirrored disks for a system as I think this is good production configuration for x4500.

There ain’t no such thing any more as a “hardware” RAID controller, at the high end. Clariions run Intel CPUs on a well-knon OS, really. The higher-end boxes also run general-purpose CPUs with proprietary software. Gone are the days where we had custom ASICs doing stuff.

Not so Dimitris, take a look at the Sun 6140 and 6540… Hardware based RAID5 on the ASIC. You can see the performance numbers at storageperformance.org

Best regards.

Our experience was that ZFS on clariion didn’t work. We wanted to use large luns (e.g. 6Tb) for veritas netbackup disk staging and hoped ZFS would make that possible. All we got was ZFS errors, system crashes when we were able to create pools+fs, and recommendations from EMC support that we should not use ZFS because it was not supported.