Everyone in the data storage industry knows about the gap between I/Os per second of disk drives and processor I/O requirements. But there is a similar problem facing DRAM support of many-core chips.

Named “the memory wall” by William Wulf and Sally McKee in their 1994 paper Hitting the Memory Wall: Implications of the Obvious (pdf) they described the problem this way:

We all know that the rate of improvement in microprocessor speed exceeds the rate of improvement in DRAM memory speed – each is improving exponentially, but the exponent for microprocessors is substantially larger than that for DRAMs. The difference between diverging exponentials also grows exponentially; so, although the disparity between processor and memory speed is already an issue, downstream someplace it will be a much bigger one.

According to an article in IEEE Spectrum that time is almost upon us. Sandia national labs simulations predict that once there are more than 8 on-chip cores conventional memory architectures will slow application performance.

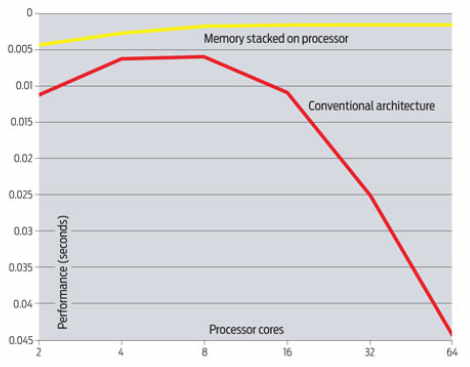

Update 1: here’s the graph from Sandia. It took me quite a while to figure out what it was saying – thanks commenter! – so I didn’t publish in the original post. As I said on ZDnet this morning:

Performance roughly doubles from 2 cores to 4 (yay!), near flat to 8 (boo!) and then falls (hiss!).

End update 1.

James Peery of Sandia’s computation, computers, information and mathematics research group is quoted saying “after about 8 cores, there is no improvement. At 16 cores, it looks like 2.” The memory wall’s impact is greatest on so-called informatics applications, where massive amounts of data must be processed, such as sifting through data to determine if a nuclear proliferation failure has occurred.

John von Neumann emphasized the point in his First Draft of a Report on the EDVAC (pdf)

This result deserves to be noted. It shows in a most striking way where the real difficulty, the main bottleneck, of an automatic very high speed computing device lies: At the memory.

Gee, bandwidth is important. I thought it was all IOPS.

Help is on the way

The Spectrum article notes that Sandia is investigating stacked memory architectures, popular in cell phones for space reasons, to get more memory bandwidth. Professor McKee has also worked on the Impulse project to build a smarter memory controller for

. . . critical commercial and military applications such as database management, data mining, image processing, sparse matrix operations, simulations, and streams-oriented multimedia applications.

Update 2: Turns out Rambus has a 1 TB/sec initiative underway. Goals include:

- 1 TB/s memory bandwidth to a single system on a chip

- Suitable for low-cost, high-volume manufacturing

- Works for gaming, graphics and multi-core apps

The Terabyte Bandwidth Initiative is an initiative, not a product announcement. They’ll be rolling out some of the technologies in 2010 with next-gen memory specs. Courtesy of Rambus is this slide describing some of their issues:

Alas, it doesn’t look like Intel’s late-to-the-party on-board memory controller and Quick Path Interconnect in Nehalem will help us get ahead of the problem. And with multi-core, multi-CPU system designs, how do you keep the system from looking like a NUMA architecture?

End update 2.

The StorageMojo take

Given Intel’s need to create a market for many-core chips, expect significant investment in this engineering problem. It isn’t clear to what extent this affects consumer apps, so solutions that piggyback on existing consumer technologies – like stacked memory from cell phones – will be the economic way to slide this into consumer products like high-end game machines.

Expect more turbulence at the peak of the storage pyramid, which will further encourage the rethinking of storage architectures. That is a good thing for everyone in the industry.

Courteous comments welcome, of course. If anyone wants to make the case that von Neumann was wrong, I’m all ears.

Stacked memory won’t solve the problem. Look again at the chart in the IEEE Spectrum article. Non-stacked (traditional) memory shows a dramatic decline in performance above 8 cores.

Stacked memory shows a leveling off — i.e. no performance drop, but no performance gain. What’s the point of adding more cores? Back to the drawing board to solve the memory bandwidth problem.

Massively multi-core systems have at least two major barriers to overcome — memory constraints, and the difficulty of writing software to take advantage of MMC systems.

Generations of PhD theses and commercial research have not yet cracked the software problem for supercomputing, so don’t expect major breakthroughs soon.

Thanks for the JvN pointer. It’s nice to see the terminology of the time.

That memory bandwidth and latency are essential is not news. That the problem is now hitting mainstream computing is. This is why Intel is long overdue with the QPI switched interconnect in Nehalem processors (AMD’s Opteron has had it for years). The 8-core Mac Pro was benchmarked as no faster than the 4-core version for many workloads, so this is not a theoretical concern.

One important solution to the problem is seldom discussed – refactoring software to make it more efficient, and reduce the bloat from layers and layers of abstraction layers piled upon legacy code. Apple is adopting this approach with Snow Leopard, and other software vendors would do well to follow suit. Moore’s law stalled a number of years ago for single-thread workloads, the sloppy coding habits of many programmers have to go, and be replaced with a newfound emphasis on performance and its necessary precondition, a ruthless culling of unnecessary features that add bloat but little value (JVMs inside the database, anyone?).

Hello Robin,

Enjoyed your post.

Granted those of us who have been around as customers, vendors, analysts, media or what ever are familiar with the decades old I/O performance, storage capacity and processor gap, its far from being known by everyone in the industry.

From what I continue to see and hear from IT customers, even vendors and others particularly those who have not been in the industry for a decade or more, there is still an amazing lack of awareness of the I/O performance gap. In fact, the industry trends white paper Data center performance bottlenecks and the server storage I/O performance gap continues to be a very popular download and point of discussion during presentations, seminars and keynote discussions.

So just how long until we hit the wall and is it a moving wall similar to the super parametric barrier wall for disk drives that we were supposed to hit several years ago?

Granted the disk drive wall has been pushed back for awhile, however will the related memory wall be pushed back before or it is supposed to occur delaying the impact, is it an isolated case for extreme corner case environments, or, a major concern for commercial computing, or, simply something fun to talk about.

Cheers

Gs

Robin

This trend was startlingly obvious to storage manufacturers because they spent more of their time flinging bits from disk to client than they did computing on those bits. One of the reasons I, among others, was thrilled with the original Opteron work from AMD was that it added more memory controllers, a four socket Opteron with each chip having its own memory controller is an impressive beast.

The massive cache experiment that was the Itanium was also flawed in that multiple cores not only raw memory bandwidth requirements to feed the execution engine pipelines but also add coherence traffic which consumes bandwidth as well (and was the death of 8 core Opteron systems which, with a simple probe/reponse coherence protocol quickly lost the extra bandwidth benefit)

Dave Hitz commented that Flash was the new Disk and Disk is the new Tape, perhaps he got it wrong and DRAM is the new disk. When you have 16 billion transistors in a 45nm process why not just a couple of cores and 8GB of static RAM?

–Chuck

In one of the videos originally released by Sun on their 7000 storage system (since been pulled / replaced), they mention that the only thing limiting I/O is memory bandwidth. One of the options available is dual 10 GigE interfaces, and supposedly they can saturate that (streaming from the JBODs).

Given that the controller heads support up to 128 GB of RAM in some configurations, that’s a lot of cache.

Memory bandwidth has been an encroaching problem for years. It’s another of those issues which has come about because mismatches in the characteristic periods of exponential growth. There are some things (memory capacity, disk capacity, processing power etc.) which go up on an arial density basis, whilst some are based on a single dimension (typical of interlink speeds, serial I/O speeds and so on).

There are little tweaks (wider busses, bigger caches), but these all break down eventually and the bottlenecks become very real.

The introduction of hardware threading (really virtual CPUs) to make use of processing time otherwise lost due to memory stalls eeks out a little more. Hypethreading, SUN’s Niagara processor – this is popping up all over the place).

However, we’ve no arrived at a processor architectures which now has to be viewed as a system – the hardware threads on these machines are now virtual resources which are presented to the operating system as individual CPUs. The procesossor has to be viewed as a system with memory access like I/O to understand it properly. It’s causing massive problems of understanding of capacity planning figures now as reported CPU utilisation does not reflect the underlying processor resource usage figures with all these hardware threads. Only the SUN Niagara has any really measurements of underlying core resources, and those are not the ones seen by the OS.

Basically the unit of what a core computer is has shrunk back to the chip. Once the Hyperviser arrives there too, there will be a very different relationship with memory.

Chuck,

Yes, the idea of massive amounts of on-chip RAM is interesting – but remember that typical fast SRAM is 6T (requires 6 transistors), so your 16 billion transistors = ~300M bytes. I’ve heard of SRAM designs with fewer transistors, but don’t know if they have the same speed. Also, even on chip, it appears its hard to maintain the highest speed across a large memory array.

We can only assume the graph is a lie since zero supporting evidence was presented.

Nobody used Rambus RAM except Sony, so their initiatives are irrelevant.

All servers are going to be NUMA anyway, so it’s a little late to worry about that.

Grumpy

the graph is generated from modelling – which is fine providing the assumptions are right (better than building something to find it doesn’t work). The modelling was for a particular workload type and memory access pattern – it could be completely different for another workload.

As for all servers going NUMA, then that might well be (and at least it means that operating systems will be optimised for this). However, this isn’t about servers – its about multi-core chips. It’s possible to go NUMA with a multi-core chip and support multiple external memory buses with the appropriate high speed interconnects in the chip’s memory management, but it still amounts to providing more memory bandwidth to the chip. Faster links will play a part, but that will hit clocking limits so we will still end up with more paths creating packaging and cost issues. The inter-chip NUMA inteconnnects would similarly have to be scaled up adding even more to the packaging issues. Such a server architecture would also be NUMA at two layers requiring more optimisation. It’s also possible to come up with workload types that don’t scale well on NUMA.

However, I’m more optimistic that major strides can be made in increasing memory bandwidth than can be done with hard disks. The latter are inherently constrained by mechanical and geometric issues. It’s also easier to deal with bandwidth issues than latency ones. The mismatch between increased processor speed and memory latency is one of the main factors behind the drive towards hardware multi-threaded cores.

Rex: I would guess, thought the IEEE article does not say, that the graph shows performance as the *total* number of cores remains the same, but the number of cores per CPU goes up. For example, the “4 cores” example was a simulation for a 128-node quad-core-per-node cluster, and the “8 cores” example was a simulation for a 64-node 8-core-per-node cluster.

So, for the stacked-memory case, things are just fine: there isn’t much performance gain to be had by going multi-core beyond 8 cores / CPU, BUT the density gain is linear, and efficiency gain is likely to be a significant positive slope as well.

Want to dive deeper on the problems with massively multi-core system design?

David Patterson, UC Berkeley, on “The Parallel Revolution Has Started: Are You Part of the Solution or Part of the Problem”, at a Google TechTalk a few days ago:

http://www.youtube.com/watch?v=A2H_SrpAPZU

He even touches on i/o issues and the future of flash. It’s worth watching to the end, his answers to audience questions are illuminating, too.