Thinking about cloud

Amid the hype and glitz on cloud storage and computing it can be difficult to separate the essential from the transitory. The word scale is abused; APIs are debated; business models are in flux: and new varieties of cloud infrastructure are being developed or marketed every month.

To get a handle on the concept I’ve developed a descriptive model that may help move the conversation forward.

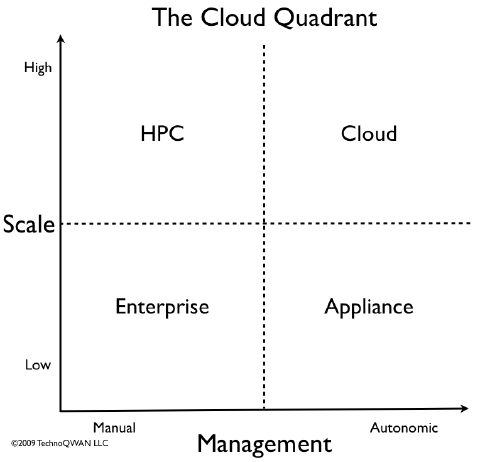

The essential elements of cloud are scale and management. Everything else is negotiable – including cost.

Putting those on the axes of a graph gives a simple visual picture of the scale and management environment.

Scale is reasonably self-explanatory: single namespace; single management domain; reasonable upward performance increment per incremental dollar investment.

Management is perhaps more complex. Researchers at IBM have long championed the idea of autonomic — self managing — systems. At the highest level cloud systems require an autonomic management paradigm: there’s just too much gear and too many failures for people to fix. The infrastructure must be self healing, load balancing, and fault and disaster tolerant.

These are qualitative not quantitative metrics. However it is worth exploring why the other quadrants are named as they are.

- Appliance. This quadrant shares many of the same self management capabilities of the cloud but on a smaller scale. Today we see these systems popular with large volume imaging applications in medical and media production.

- Enterprise. Enterprises don’t have the luxury of a few primary application types. They need systems that adapt to a wide variety of legacy application requirements. In addition they face considerable regulatory and legal constraints that make tuning the storage infrastructure imperative.

- High-Performance Computing. HPC systems need to be highly tunable to meet specialized application requirements. When an application takes days or weeks to run even on few extra percentage points of performance out of the system translates into hours or days saved.

Vendors on the quad

In the second chart I’ve made a first-order approximation of where various systems set on the cloud quadrant. These positions are not cast in concrete or even, at this point, Jell-O. Nor should readers assume that any quadrant is superior to any other in a marketing or technical sense.

I ask readers and vendors for their help in creating a more comprehensive cloud quadrant. There are vendors that I know I left out and other vendors who may feel that they are scalability or management capabilities are wrongly estimated.

I expect to do more work on this in the weeks ahead. It may prove helpful in sorting out some of the currently not well differentiated scalable storage and compute markets.

The StorageMojo take

There is no doubt that Internet-based storage and computing represents a major paradigm shift, not unlike that of PC networks in the 80s and client server computing. In all of these shifts the imagined long-term benefits have far outweighed the immediate and very real costs.

But cloud computing is different: it is the first pay-as-you-go infrastructure. Every user can look at the price tag and make an economic decision based on real costs. In the 80s it took years for good data on the costs and benefits of PC networks to emerge. Good thing too, since CFOs would have screamed.

Tens of thousands of companies and individuals are adopting cloud today based on benefits they see and costs they pay. Yes, there is much hype around cloud computing. But the customers are voting with their dollars and that tells us there is a real economic advantage in cloud computing as well.

Courteous comments welcome, of course.

Hi,

you miss DataCore on the quadrant. Storage virtualization fits well with the cloud, and also grant high scalability (from 0,5TB to unlimited without any change in the infrastructure, from single node to HA syncronous, and/or DR asyncronuous over ip, from JBOD to High End Hardware SAN, mixed brand and models) and manageability (again, a single console from one node to HA/DR and from 0,5 TB to unlimited, from JBOD to SAN, every vendor and model)

I don’t quite agree with your definition of what differentiates cloud computing (I think pay-as-you-go and multi-tenancy are more essential than ease of management) but, for the sake of non-argument, let’s say that it’s a useful quadrant model anyway. Here are some suggestions.

PVFS2 and GlusterFS are probably well known and widely used enough to deserve places in the top left quadrant. HDFS has significant traction in the top right. MPFS, GFS, and OCFS all belong on the lower left, and “classic” NetApp or EMC Celerra as well. Permabit and Cleversafe belong somewhere, probably lower right. Cassandra deserves a place on the chart more than Dynamo by virtue of being functionally equivalent and also available outside of Amazon’s private infrastructure. OTOH, I think those kinds of storage are so different that they deserve to be on a separate table alongside things like BigTable, Hypertable, CouchDB, etc. I think you under-represent the scale of Isilon and Atmos, and rather predictably over-represent the scale of Ibrix. If you’re going to include Parascale and MaxiScale, might as well include Mezeo and Zetta as well.

That’s all I can think of for now.

Robin,

Probably there is space for two different quadrants about cloud storage: one related to filesystems/technologies and one for products/companies.

it would be more clear for readers!

Enrico

Oh yeah, Exanet is in there too. Probably LSI/ONStor as well.

Don’t forget BlueArc as well. We’re near the top of the chart on Scale, and are firmly entrenched in the HPC space. However, I think that our management rides the line between HPC and cloud in your model above.

Jeff,

Aren’t multi-tenancy and PAYG binary? They may be necessary but are they competitive differentiators?

Robin

I think they’re competitive differentiators for cloud vs. non-cloud, but not within the cloud because without them what you have is something other than a cloud – perhaps a grid, perhaps a virtual data center, whatever. The business case for cloud computing is based on two things:

* You pay for exactly what you use, and not a penny more. This reduces OPEX to a minimum, and all but eliminates CAPEX.

* Even though you might not own or house the equipment yourself, you don’t give up a guarantee of getting what you paid for. You still have that through an SLA, which in turn requires a technical infrastructure capable of supporting/enforcing full multi-tenant isolation.

The only thing besides those that makes a cloud is location transparency. If you have those features, you have a cloud. You could score low on both scale and autonomy, and still have a cloud. You could forego virtualization and web standards and a bunch of other features and IMO still have a cloud, though it might not be an economically viable cloud (see http://pl.atyp.us/wordpress/?p=2316). I’m sure many cloud advocates would insist that I’m wrong about that last part, but none of the other definitions I’ve seen treats either scale or autonomy as *essential* characteristics.

Cloud storage certainly tends to have certain features, including high scale and high levels of autonomy, but those are not *defining* characteristics. There are multiple technology shifts occurring all at once – from physical to virtual, from proprietary to open, from tight coupling to loose, from one tier of storage to several, etc. Companies that are ahead of the curve in one area are often ahead of the curve in several. Often the result is a convergence of two or more characteristics which are related only in terms of when they became feasible or popular to provide. To see this, one need only look at your chart in terms of autonomy vs. project age. The projects on the non-autonomous left go back a decade or more, while those on the right are quite new. Autonomy is to the right because time is to the right, and “cloud” is on the right for the same reason, but that’s common cause rather than common definition.

This is a thought-provoking map. Most of the solutions fit in a line from lower left to upper right. It would be great to hear more from you on the solutions that are exceptions to this – why, what it means, etc.

Robin,

Truly useful exercise and representation!

Can you please do me a favor? Draw a vertical line on the far right side of the “Autonomic” axis and label it “Caringo CAStor”.

Thanks 😉

Paul,

My understanding is that Caringo hasn’t tested and/or installed over 32 nodes in a single FS. Update: Paul tells me that they’ve tested over twice as many and that customers have gone well beyond that – but because they license by the TB and not the node they don’t have good visibility into how many nodes their bigger sites have. End update.

Robin

Robin,

Wrong understanding! Even our own in-house testing is on over double that amount of nodes, with customers going well beyond that. We have no way of verifying the exact number of nodes as we sell by the Terabyte – of which some sites have over 500 now.

However, that is nothing compared to a few projects under preparation. One of them calls for 1,500 servers – 15,000 disks in total – 15 Petabyte – all of that in a single flat namespace (we don’t do FS as you may recall). We’ll keep you posted.

–Paul

BTW, it would be nice to apply equal rigor to all names on the chart – including some of the stuff in the upper right corner. I understand clouds consist mostly of vapor, but still… 😉

Missing Sun SAMFS/QFS. Used by several large scale clusters in archive space. Images and videos. Guess where…

Sun QFS and SAMFS open source like ZFS. Interesting road map on all of them.

Other interesting open-source here

MogileFS, Hadoop, Coda, Sun Celeste

For Object Matrix MatrixStore, you are looking at an appliance type solution, highly automated (plug and play) but only tested to 25 nodes.

I have trouble with the Cloud nomenclature: I simply don’t think of Isilon, MatrixStore, etc as Cloud storage, nor do I think of Cloud storage as being “location transparency”.

For me, the term cloud storage strongly implies that there is a managed (or self-managed) soft service available to clients. Sure, the underlying components that make up that cloud based service may contain a set of the hardware solutions listed, but the premise that the cloud quadrant contains storage solutions as opposed to storage services (of which I would list: storage services (aka s3), HPC, applications (aka google docs), etc) doesn’t sit well with me… I’d rather call the chart a “Scalable Storage Solutions” chart, or the such like. Obviously that would defeat the purpose of “bringing clarity to cloud terminology”, but is this really a cloud quadrant?

However, that being said, I find the axes thought provoking and a very useful/visual way in which to compare competing products in a marketplace that has so many shades of gray.

A good read, thanks Robin et. al.

The other thing to consider in this argument, as it pertains to PAYG and multi-tenancy, is how X-axis “management” is set up to actually support both those objectives.

I’d agree with the statement that they are binary elements which you either have or don’t have. However, some of those cloud storage technologies are specifically set up to support keeping track of and metering thousands of unique customers. Others are less service-provider friendly.

It’s great to have an “autonomic” system that saves you opex on storage admin salary – but if you’re spending all that opex on software programmers, billing analysts, and account managers, the opex goes out the door all the same.

I think what muddies the water here, as happens with many cloud discussions these days, is the merging of “Internal” cloud with “Service Provider” cloud. It’s understandable why they merge – after all who wants to exclude one customer base in favor of the other? – but evaluation against the X-axis is different depending on your scenario.

Another vendor worth evaluating in this space is DDN – their Web Object Scaler (WOS) technology is upper-right material.

Hi Robin – This is a nice exercise. Barracuda offers the Barracuda Backup Service, which is a hybrid local and offsite backup using both a local storage appliance and cloud storage. Our position would be highly automated management with the convenience of a complete backup hardware/software solution bundled with cloud storage for disaster recovery. Keep up the good analysis!

I disagree with the poster that said that autonomics was less important than pay as you go. In my mind “fully automated chargeback” is merely a subarea of autonomics. Autonomicity defines the cloud.

Robin, I’d be interested in your scoring methodology for this chart. For example, why did you score Parascale as more cloud like than ByCast? Why is Atmos worse than all the others? Why is MaxiScale so far into the upper right corner? My interest is not academic: we’re currently intensely interested in cloud storage technologies, with a petascale-level problem lurking immanently nearby…

Joe Kraska

BAE Systems

San Diego CA

USA

Fantastic, Robin – I’m glad to see someone finally trying to provide a qualitative assessment of the scalable storage landscape. I agree with Isilon’s placement on the X axis – it is close enough for horseshoes. 😉

Our placement on the Y-axis, however … does not given enough credit to the scalability of Isilon and OneFS. You define scale as “single namespace; single management domain; reasonable upward performance increment per incremental dollar investment.”

Single namespace and single management domain – from as little as 6 TBs to over 5 PBs. Check.

Single system performance which scales up to 45 Gbps and a 144 nodes – with the customer being able to choose, on a per-node basis, whether they are staying along the linearity curve by adding like nodes or deviating from it. Our customers can add denser nodes over time, which adjusts the price/performance curve towards lower cost or they can add higher performing nodes (and accelerator nodes) and increase the performance over time. I would certainly argue that the cost per node is fixed/predictable and competitive with any other commercial vendor above us on the scale axis. Check.

Not only can customers dynamically adjust their system over time to maximize cost or performance, they can do so in a fully backwards-compatible, investment maximizing way. An Isilon customer can happily run multiple generations and types of hardware in the same cluster and choose how and when to adjust – without any disruption, of course.

All of this with a highly advanced protection model incorporating Reed-Solmon encodings – allowing for up to four simultaneous failures with 20% overhead or less and /per-directory flexibility/; core enterprise features – including per-directory snapshots, quotas, replication; and of course, a proven track record – over 1000 customers, over 100 PBs installed, and some of the world’s leading social networks, next-generation cloud bioinformatics, photo-sharing sites, etc.

There is no doubt that Isilon, based on a proven track record of highly scalable technology and demanding customer environments, should be firmly in the upper-right quadrant.

Nick

http://twitter.com/isilon_nick

Another one I forgot: Scalable Informatics in the appliance quadrant. Sorry, JoeL.

@Jeff

Just read this post now, thanks for the shout out 🙂 I’d humbly like to offer up (our partners) Newservers.com as a provider, but I am not quite sure where to put them.

We have a nice ~0.2PB HPC storage cluster going into a supercomputer site soon. Benchmarks I’ve run yesterday on one node are at ~3GB/s sustained (no SSDs in this apart from the boot drives … all spinning rust) for streaming 192GB from disk, 2.5GB/s streaming it to the disk. Running GlusterFS atop Infiniband QDR atop our boxen. Pretty seamless incremental scalability … drop another node in, boot it up, and about 10 minutes later its available. GlusterFS doesn’t (yet) have the ability to hotadd/remove storage, but it is in the roadmap for next release. Until then, we are automatically generating the requisite server config files, and can automate a push or a cause a pull and restart on the client side to provide an approximation to this capability.

My point being that GlusterFS should definitely be up there, certainly in HPC, but they are doing some interesting things into the cloud space. Might be worth a talk with Hitesh Chelani about their offerings.

As for Scalable, we are small, but I can say that interest in our offerings is growing fast and hard. The last several months of ours have been some of our best in the company history. We now have value added resellers integrating/using these systems in their own offerings, as well as customers/partners building larger (cloud-like) storage.

Robin,

I don’t want to spoil a pretty good party, but I’m missing an argument that to me seems to define the cloud thing. A while back (60’s and 70’s) we had time sharing services e.g. by IBM en GE. These were vertically integrated services, where the vendors had to provide all the components from the computer rooms to the application software. This time, enabled by the Internet and a bunch of open protocols, we can have an ecosystem where a software vendor can choose his platform, build his applications and open shop without having to invest in OS, servers, storage and networks. He can even leverage software services from other vendors to build or augment his products.

I believe this is the defining aspect of Cloud Computing. Out of necessity the first players have built their own vertical clouds, but the future of Cloud Computing is not a forest of chimneys. Clients will use a variety of services, some specialized for their line of business, some fairly general, from a dozen or more suppliers. Just as our physical logistics ecosystems allows us to purchase goods, from specials to commodities, from a set of stores in our neighborhood, the cloud ecosystem will have to support the frictionless exchange of digital services and their data to support the whole breath of the market. Scalability and management are necessary, but not sufficient elements to allow a storage system to flourish in the cloud.

Ernst

Ernst,

Certainly, scale and management are necessary but not sufficient for cloud infrastructure. For any infrastructure reliability, availability and serviceability are also vital, among many other attributes. But when you look at cloud infrastructures – or those that would be cloud – high scale and a degree of autonomic management seem to be key enablers. To your point about logistics ecosystems, this is all plumbing, and whether we go to a utility model or a retail model or something else for delivery is still up in the air.

Robin

I am grateful for the insight you bring to working the differentiators down to a familiar business quadrant model. Especially useful is being able to map other potential differentiators that map onto these two.

I am in an “Economics of Strategy” class as part of a part-time MBA. We expect to put forth a thesis that Microsoft should invest in a vertical integration into green data centers as a means of preserving then replacing software revenues current at threat under the cloud.

Imagine our glee with the Wednesday announcement of the large-capacity MS/Chicago Data Center. Probably not so green yet until they get to rural and renewables.

Can I assume your permission to reference your methodology as a means of explaining the market to our non-technical audience?