Talked to a company last week whose cloud app handles several billion transactions per month on a cluster. Sounds like SSDs could help them but how?

In a paper from the latest 5th Biennial Conference on Innovative Data Systems Research (CIDR ’11) researchers Philip A. Bernstein and Colin W. Reid of Microsoft and Sudipto Das of UC Santa Barbara have a suggestion: Hyder – A Transactional Record Manager for Shared Flash (pdf).

As underlying hardware changes – faster networks, large memories, multi-core CPUs and SSDs – database software architectures may change too. Hyder architecture supports

. . . reads and writes on indexed records within classical multi-step transactions. It is designed to run on a cluster of servers that have shared access to a large pool of network-addressable raw flash chips. . . . Hyder uses a data-sharing architecture that scales out without partitioning the database or application.

No partition scale-out

Today, most popular database clusters partition the database across multiple servers. Done well this works, but at some cost. The database design is non-trivial – cross-partition transactions, cache coherence, load balancing, scaling and multi-server debugging – are knotty issues which translate into higher design and operation costs.

Hyder eliminates partitioning, distributed programming, layers of cache, remote procedure calls and load balancing. All servers can read and write the entire database – so any server can execute any transaction. Load-balancing is simple: direct new transactions to lightly-loaded servers.

Each update transaction runs on one machine and writes to a shared log – so there’s no 2-phase commit. And no 2-phase commit locking, which can force performance off a cliff when workloads spike.

The 3 main components of Hyder are the log, the index and the roll-forward algorithm.

Log

The log runs on multiple flash devices – chips, DIMMs or ??? – and writes multi-page log records across multiple devices with parity to enable log recovery after device failures.

Hyder uses a multi-versioned database – old record versions aren’t updated-in-place, only the most recent version of a record is used – which has a couple of useful properties:

- Server caches are inherently coherent since only the most recent versions of records are used.

- Data can be read while writes are in progress.

- Queries that can be decomposed can be run across multiple servers concurrently for a faster response time.

[This may seem like voodoo to ACIDheads. A good technical intro to multi-versioning concurrency control (MVCC) is Multi-core software: to gain speed, eliminate resource contention.]

Servers run a cache update process that keeps them current with updated records. Server caches don’t have to be identical and the cache invalidate messages that most clusters use for cache coherency aren’t needed.

All log writes are idempotent appends, so if a write fails the server can simply reissue the write. The authors describe several error modes and how Hyder handles them.

Index

The index stores the database as a search tree with each node a [key, payload] pair. The tree can store, for example, a relational database. The index tree is also represented in the log.

Tree nodes are not updated in place. When node n is updated, a new copy – n’is created. Then, of course, the parent node must be updated and so on up the tree.

A binary tree minimizes the number of node updates, but can be processor intensive. The optimal tree structure for Hyder is not yet resolved.

Garbage collection is an issue. Each node pointer includes the ID of the oldest reachable data element. An element older than any that is pointed to by a node is garbage.

Roll-forward algorithm

This is the key process of Hyder.

When a record update begins, one server executes the transaction. The server is given a copy of the latest database root, a static snapshot of the entire database.

The updates are stored in a local cache and after execution the after-images are gathered into an intention record, which is broadcast to all servers and appended to the log. The update’s readset is included in the intention record, to insure all intentions are properly ordered, none are lost, and the offset is made known to all servers.

Each server can assemble a local copy of the tail of the log, which is used to determine if there are conflicting updates. The meld procedure manages conflicting updates.

Appending the intention to the database log doesn’t commit the transaction. The intention references the static snapshot of the latest database root. The meld procedure determines if any committed transactions since the snapshot conflict with the intention.

If they don’t, all is good. If they do, the transaction is aborted.

All servers roll forward using meld and don’t message each other about committed and failed transactions. Therefore there is no lock manager and no 2-phase commit.

Contention

Losing the lock manager and 2-phase commit should help performance unless other points of contention throttle the system. Hyder’s points of contention include appending intentions to the log, melding the log at each server, and aborting transactions.

Intention appends are serial. The lower the write latency the more appends can be written. A 10us write latency means a 100k TPS.

Network latency adds to write latency. Faster switches improve append performance.

The abort rate depends on the number of concurrent transactions that conflict. Fast transactions reduce the probability of aborts by reducing the number of concurrent transactions.

The worst case is a record subject to multiple updates from different servers. Detecting high-conflict transactions and serializing them by forcing them onto 1 server would reduce the hot data performance hit.

Performance

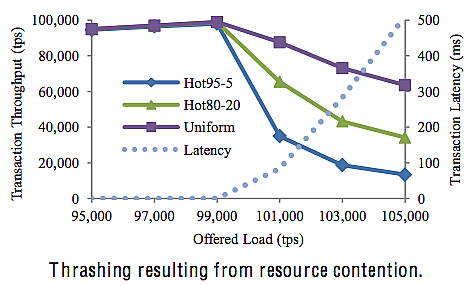

The authors model Hyder’s performance with a focus on the high-contention corner cases. In general, the tests show linear scaling as servers are added.

The problems come when the underlying hardware limits are exceeded. Increasing execution times mean more aborts and performance falls off a cliff. From the paper:

The StorageMojo take

We’ve been building disk workarounds for for decades. We now tend to assume those workarounds are fundamental architectural requirements rather than hacks.

The Hyder paper asks us to imagine a world where non-volatile mass storage is fast and cheap – and how we could re-architect basic systems to be faster and cheaper too.

The authors conclusion is a fair assessment:

Many variations of the Hyder architecture and algorithms would be worth exploring. There may also be opportunities to use Hyder’s logging and meld algorithms with some modification in other contexts, such as file systems and middleware. We suggested a number of directions for future work throughout the paper. No doubt there are many other directions as well.

Courteous comments welcome, of course. I hope to get to some of the other CIDR papers before FAST ’11 snows me under. Update: Phil Bernstein was kind enough to scan the post and I’ve updated 1 minor error. He also mentioned that it won the Best Paper award at the conference. Those CIDR folks have great taste in papers, don’t they?

Why Flash ? Why not run DB run “in memory”. Add some level of redundancy to handle hardware failures.

Naga,

2 reasons: main memory doesn’t scale across servers; and DRAM costs quite a bit more than flash.

Robin

Hi,

Oracle is build on solid MVCC , it does not depend on shared locks ! as MS-SQL server (prior to 2005/2008 with snapshot tx commit they can leverage a bit now …) or DB2 UDB ..and rest of the know shared lock based systems.

Interesting, this sounds just like Clustrix, which is currently shipped mostly as an appliance, but is also ACID compliant, fault tolerant, uses SSDs for fast access, and leverages NVRAM to guarantee durability.

http://www.clustrix.com/product/