It was almost 4 years ago that IBM bought XIV (See 2008: cluster storage goes mainstream). StorageMojo couldn’t understand IBM’s product positioning – yeah, the world was clamoring for a block device for multi-media – but liked the architecture.

Now XIV appears to be making good on its early promise. Here are XIV bullet points to consider.

- XIV interface the “most elegantly simple” in the industry?

- Design goal: using large drives produce needed app performance.

- 3rd gen version – now with Infiniband! – announced.

- Supports 3TB SAS drives.

- Data divided into 1MB chunks and auto distributed across all disks pseudo-randomly.

- Infiniband boosts performance ≈4x, making it competitive with Big Iron arrays at lower cost.

- Full system is 180 drives.

- Gen3 3TB drive data rebuild takes 54 minutes.

- Failing drives are rebuilt from data copies on other drives.

- Minimum LUN size is 17GB.

- Thin provisioning is standard.

- Using Infiniband XIV’s latency and bandwidth are on a par with IBM’s Big Iron DS8000 series.

- Fidelity Investments replaced a 240 drive – all 15k – Big Iron array with a 180 drive XIV and found it was 60% faster.

- Fidelity now has 86 XIV frames installed.

The StorageMojo take

The XIV architecture was its first strong point. And now it looks like commodity hardware has caught up with the architecture.

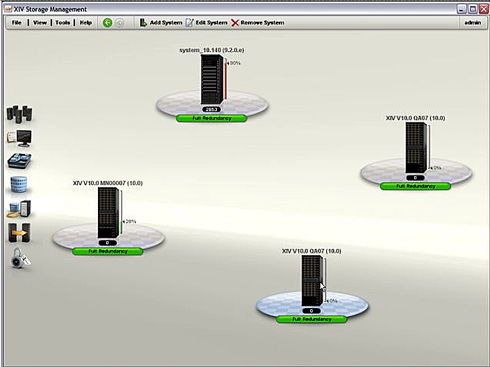

IBM likes the XIV management interface so much that they are standardizing on it. It is the best looking storage GUI I’ve seen – much better than the 1-step-up-from-Excel look of most – and I’d like to hear how it works for large shops.

I expect that a lot of shops that are using EMC and HDS arrays would be surprised at how far XIV has come. I was.

Courteous comments welcome, of course. IBM brought me out to a well done customer event in California and put me up for a couple of nights while schmoozing me. Other than that no lucre changed hands.

Love to see some comments from XIV users. How’s it working for you?

Nice write up. Will spread the word…

86 frames is huge. now if only they could connect all of them together as a grid to leverage the power of all the units. A grid of grids.

HI, is the XIV gui compatible for use with windows 2000 terminal server environments?

86 frames. Ouch. Talk about horrifyingly inefficient and foolishly wasteful.

Each frame requires a minimum 7.5kW of power with a peak of 8.5kW and 28.5kBTU/hr. It provides usable capacity (excluding overprovisioning via thin LUNs) of ~160TB using 2TB disks. Each frame is one floor tile, and a standalone unit. Presume they are doing replication so their actual usable frame count is 43.

That’s 731kW required to provide an estimated 6.8PB of disk needing over 200 TONS of chiller capacity by itself and taking up hundreds of tiles of space – 4 deep, 1 wide for clearance requirements. And that’s presuming no Gen1 racks where they chew up 9kW and spew over 37kBTU/hr each.

So, uh, where exactly is this mythical efficiency again?

For reference: DS8700 maxes at 2PB, DS8800 at 2.3PB. At 2.3PB per DS8800, that’s just 4 systems to capacity match 6.8PB – 8 with replication – each system a total of five cabinets. That’s 40 base tiles for 8 complete systems that can be managed through 4 interfaces, versus 86 base tiles (344 with clearances) for less IOPS, less interfaces, less cache, less capacity.

When Fidelity standardized on XIV, their VMWare IO latency was reduced by 100x, Exchange by 50%, Tax Lot Accounting (mission critical app inline with trading workflow) performance improved by 60% while slashing their storage TCO. Don’t believe me, watch Mark Tierney of Fidelity Investments, explain the Firm’s experience with IBM XIV here: http://www.ibmreferencehub.com/STG/IBM_storage_summit2011.

The presentation is called “Redefining Storage Efficiency for Application Performance”. Mark speaks at 19:35.

@Phil Jaenke: While your unix beard is impressive, I find Mr. Tierney more credible since he is a VP of storage at a tech-savvy global financial services firm.

@Brian Carmody – that’s all well and good, except for the simple fact that the numbers shown above prove the simple fact that it is not efficient. According to IBM’s claims, DS8k to XIV is a ‘fair’ comparison in terms of performance and capacity. But when you run the power, capacity, and floor space numbers which are objective statements of fact, you do not see an ‘efficient’ array at all.

The magical performance numbers are as always, another Unicorn. Latency down by 100x, Exchange faster, etcetera – as compared to what? As usual, we don’t know. This is why I ignore relative performance statements. The fact is that any given storage system can be misconfigured to the point where peak performance is 10% of capability or worse. People overload them, mismanage them, lose track of what’s on it every day of the week.

Mr. Tierney can have all the ‘credentials’ he wants; he’s a VP. That means in most cases, that he lacks the hands-on day to day technical experience required to ascertain whether or not a given system is configured to best practices, or whether or not it’s performing within expectations. He relies on staff he manages to determine these things and manage these systems. I have no specific reason to believe Mr. Tierney isn’t capable, but every reason to believe that he does not personally touch those systems and investigate performance problems.

And again; it’s relative to an unknown system with an unknown configuration other than “multi vendor” and a “240 15K disk” system. For all we know, they were using maxed out IBM DS4700’s and EMC CX3’s. He focused on the company and their data center situation, without discussing the actual storage they migrated from. If I go from 2x internal 7200RPM SATA to XIV, of course I’m going to have “BIG NUMBER!” Same applies here.

So, the facts remain the facts. Powering, cooling and physical footprint, XIV is incredibly inefficient. I grabbed the Gen3 numbers to make it a bit more apparent, too.

While the base DS8800 cabinet is larger, the fact remains that the XIV’s low point of 28K BTU/hr is nearly a full ton of chiller capacity above each DS8700 cabinet – 22,200BTU/hr peak for each 94E. Power consumption on XIV Gen 3 is minimum 6.3KW and peak 7.0KW versus DS8700’s peak of 7.8KW for the base rack and 6.5KW for each expansion.

That means that a fully configured, maximum capacity IBM DS8700 running at 100% load at all times provides 2PB of storage at 33.8KW and 115,400BTU/hr, managed through one interface.

To match storage capacity requires 9 XIV systems, managed through 9 interfaces. These systems will demand 63KW of power and produce 228,000BTU/hr.

In terms of floor space, the DS8700 will require 6 tiles excluding clearances. XIV will require 9, or 33% more physical space. When clearances are taken into account and adjusted for reality, it stays the same.

You can argue performance all you like, but these are the factual numbers from IBM themselves. The operational costs of XIV at large scale are nearly double those of DS8700.

rootwyrm you spammed wikibon with XIV FUD as well and most of it didn’t hold their either.

I think your points are disengenous at best. I’m sure Fidelity didn’t just throw roughly 40+ million dollars worth of storage onto their floor on a lark. Obviously they saw value in the XIV platform and probably did their own internal ROI.

XIV has a fully capable CLI that in larger deployments is going to be used over a per-frame GUI anyway (you can manage multiple frames from a single GUI though).

BTU/KW do factor into storage decisions, but lets face it performance for production workloads are what we are really looking for. Show me a single company that decided on a storage deployment based on its power consumption over its performance and capabilities.

Let me first disclose I work for IBM. . .and it’s nice to see someone so passionate about the DS8000. . .another fantastic solution. . .but. . .

It’s easy to fall into a trap of comparing NL-SAS with NL-SAS but, without taking into account the overall architecture, you’re missing the point (a point a savvy architect with ample hands-on experience should surely appreciate).

The power and cooling math above is correct (enough) but assuming 2.3PB in each DS8800 makes this a misleading comparison. Granted you can physically populate it with enough NL-SAS drives and create a raw capacity pool of this size, but a). you don’t end up with that in usable capacity (XIV numbers are usable) and b). you sure would not get the performance profile of an XIV if you put NL-SAS in a DS88000 (even with some SSD and Easy Tier).

Comparisons of power and cooling cannot be done on usable capacity alone. To create a solution (DS8800 or other) with a similar performance profile to XIV you would be using smaller/faster drives. . .meaning you would need more of them, filling more frames/floor space and ultimately drawing more power and requiring more cooling than the simple math above would indicate (and you’d better be an expert at data layout and reorg).

If you truly don’t need the performance WITH your capacity then maybe XIV isn’t the solution for you. . .but I doubt the DS8800 be either. . .there are cheaper solutions out there (power, cooling, floor space and acquisition) for a simple capacity requirement (tried tape)?

Having said all that, there are times when smaller/faster drives (despite power and cooling disadvantages) are better – we’re in IT. . .”it depends”. Both solutions have their place. . .noone is saying otherwise. Understanding requirements (functional and non-functional) will help you determine which is ultimately best for a given client.

As for Fidelity. . .I think they’ve spoken. . .86 times. If the first few frames didn’t meet expectations/requirements would they have thrown good money after bad?

More things to know about XIV

– RAID 1 only

– Nearline only

The two items above + the fact that the system taps out at 180 disks no matter what, are reasons why I won’t even consider XIV for my past or present projects. It’s maybe a little bit worse than NetApp who forces RAID 6 on you. I hope IBM fixes these things but they haven’t done so to-date so there must be something in the architecture that is preventing it from scaling better.

I was totally expecting once they got away from the 1GbE back end that they could scale better but seems that didn’t happen. I suspect even with the large numbers of CPU cores on the box that they simply can’t handle anything other than RAID 1 on their system on their sub disk distributed RAID. Need to do that stuff in silicon.

IBM released SPC-2 results for the latest gen XIV not long ago and I dug it into it quite a bit. Very impressive throughput performance, though the cost of the system was much higher than I was expecting, and loads of cache will only go so far with random I/O workloads on 180 SATA disks. Now if you have a throughput-bound workload then the XIV looks to be a very fine system. I guesstimated/extrapolated (based on SPC-2 pricing) that for random I/O workloads the XIV is one of, if not the most expensive systems on the market(at least when it comes to published results), which was surprising to me given that it’s SATA disks.

IBM is adding SSD read cache soon on their latest stuff which looks interesting, wonder what the cost of it will be though.

It’s a nice write-up but awfully misleading. XIV’s architecture has some inherent flaws in it, which it makes it tough to be a serious contender in the “storage wars”. What they do, they do well, granted. BUT a few things to consider:

* The ‘60% faster’ claim has more to do with cache than actual drives on the backend. XIV has great cache methodologies. However, if your workload gets mixed up, or lands in a spot with tons of random reads, then you’re going to see really high latencies.

* They still haven’t improved their replication methodologies.

* Thin Provisioning is built in BUT it’s a separate pool of disk that can’t be intermingled. Converting to thin is an offline operation.

* I’m fairly sure they’re using the lower end 7.2RPM SAS drives which, essentially, makes them just 3TB SATA.

* Since you only get just under 45% capacity utilization with them, you see more waste with these drives and because of their density you will see higher rotational latencies. Especially when you factor in a 1MB partition size on the backend.

Certainly this is not an enterprise system. It’s a good “starter san” or lower end type VTL strategy. Anything above that makes it risky, IMO.

Below you can see some answers and my comments at the end.

*The ’60% faster’ claim has more to do with cache than actual drives on the backend. XIV has great cache methodologies. However, if your workload gets mixed up, or lands in a spot with tons of random reads, then you’re going to see really high latencies.

Response: Any application testing performed? or just an assumption? Becasue we collected customer workload from existing customer site (Oracle AWR/Stastpack and IOSTAT) and agree with customer about IO profile. Similar database transcation simulated with Swingbench during simulation system reached 199,000 IOPS with 1546 MBs(without SSD) if you like I can send this bechmark results for your attention.

Also another proof point is Microsoft Exchange 2010, ESRP (Exchange Storage Review program) submission results XIV Gen 3 is scalling 120,000 mailboxes and 88% mail box capacity utilization where nearest competitor EMC V-Max 100,000 mailbox with 45% mailbox capacity utilization with 880 drive inside V-Max system. You can see and review all systems tested at http://technet.microsoft.com/en-us/exchange/ff182054

* They still haven’t improved their replication methodologies.

Response: XIV capable of performing sync and asyn replication but 3 site coordinated replication is on the roadmap. I advise to see video of XIV async replication. Junior storage admin can establish remote replication between two system in minutes. This needs very expensive professional service purchased from a other High-end storage vendors.

* Thin Provisioning is built in BUT it’s a separate pool of disk that can’t be intermingled. Converting to thin is an offline operation.

Response: this is wrong because couple of minutes ago I moved several LUNs atcahed to host and some transaction on it from thick to thin pool. Also I tried opposite it is also non-distruptive and online. If you like I can send the screen shots if you deliver your e-mail adress.

* I’m fairly sure they’re using the lower end 7.2RPM SAS drives which, essentially, makes them just 3TB SATA.

Response : I review drive manufacturer web page 3 TB drives having 2.0 Million hours MTBF value and 15k rpm fibre channel drives having 1.6 million hours and SATA drives 1.2 million hr MTBF. Which mean 3 TB SAS drives more reliable. Slower rotational speed can easily be compensated by parallelism introduced by GRID (no hot-spots possible becasue of equal distribution of workload and tierless architecture) and higher amounth of cache for each usable TB (I advise you compare Max GB cache / Total usable TB with other systems you will see XIV Gen 3 have at least 3-4 time more cache for each usable TB). You can see 3 TB SAS Drive details from below link

http://www.hitachigst.com/tech/techlib.nsf/techdocs/EC6D440C3F64DBCC8825782300026498/$file/US7K3000_ds.pdf

* Since you only get just under 45% capacity utilization with them, you see more waste with these drives and because of their density you will see higher rotational latencies. Especially when you factor in a 1MB partition size on the backend.

Response: On XIV we always talk about usable capacity honestly. We never hide or make suprise after purchase. But Lets look at EMC V-Max product guide, in page 3 it is noted that maximum usable capacity with 2 TB drives is with RAID 6 and RAID 5 configuration 2055,93 TB. But V-Max supports 2400 drives and gross capaity 4800 TB. So my question is why 50% of the capacity lost even with RAID 5 or 6 configuration ?

http://www.emc.com/collateral/hardware/specification-sheet/h6176-symmetrix-vmax-storage-system.pdf

Similar issue also exist in HP 3PAR. Maximum raw capacities are much lower than expected based on the number of disks supported. For example, the V800 supports up to 1920 disks and supported drives include 2TB nearline disks. 1920 disks x 2TB/disk = 3,840TB, more than twice the 1600TB maximum raw capacity specification. For the V400, 960 disks x 2TB/disk = 1920TB, more than twice the 800TB maximum raw capacity specification.

http://h10010.www1.hp.com/wwpc/us/en/sm/WF06a/12169-304616-5044010-5044010-5044010-5157544.html?dnr=1

Finally my response to “not an enterprise system” comment. 1500 customers (most of them migrated from traditional High-end vendors)

shouldn’t be wrong. What defines the the Enterprise system performance? reliabilty? … ? what do you think it is missing in XIV architecture? Major sorce of SPoF is drive rebuild in traditional raid if you shorten this time you decrease your vulnerablity to have multiple components failing. XIV rebuild time for 3 Tb drive less than 1 hr, what is it in traditional High-end systems with workload. It is days. Single Rack XIV box can achive over 500,000 IOPS how many Racks needed in traditional systems ? We can tolerate failure of single modules with 12 drive on it, can any well know high-end System every time commits there is no issue on data availability if they lose single drive shelf.

Hi Mert, you really know what you say. I’ve 5 yrs managing storage and I was reluctant like “JM” when my bosses decided to buy our first Gen2 XIV. I looked the inside components and thought “what a piece of c&$#” . But after then I realized that the performance was stunning (early 2009) and was very easy to manage. You can hire a Junior storage admin and let alone in couple of days.

Now we have XIV Gen3 with SSD caching and I don’t have words to describe this “marvelous” storage.

BTW, I’m not an IBMer

*The ’60% faster’ claim has more to do with cache than actual drives on the backend. XIV has great cache methodologies. However, if your workload gets mixed up, or lands in a spot with tons of random reads, then you’re going to see really high latencies.

Response: Any application testing performed? or just an assumption? Because we collected customer workload from existing customer site (Oracle AWR/Stastpack and IOSTAT) and agree with customer about IO profile. Similar database transcation simulated with Swingbench during simulation system reached 199,000 IOPS with 1546 MBs(without SSD) if you like I can send this bechmark results for your attention.

Also another proof point is Microsoft Exchange 2010, ESRP (Exchange Storage Review program) submission results XIV Gen 3 is scalling 120,000 mailboxes and 88% mail box capacity utilization where nearest competitor EMC V-Max 100,000 mailbox with 45% mailbox capacity utilization with 880 drive inside V-Max system. You can see and review all systems tested at http://technet.microsoft.com/en-us/exchange/ff182054

– SSC used this ESRP. What IBM fails to tell you is that this (1st storage configuration qualified by MS) used TWO storage (clustered) arrays…. thats right TWO arrays to qualify this for e2k10.

@Jon – all vendors submit an ESRP design that provides site resiliency – TWO arrays, as you put it. The submission has to show site resiliency – therefore has to show the full scale (all mailboxes with provided IOps per mailbox) will work on one site only, which is ONE array.

If you can take the time to check out the other submissions – EMC, HP, Hitachi etc – you will see they did the same.

As the VP of a Fortune 500 company I bought into the XIV story. IBM did everything possible to get XIV on our data center floor including giving the first unit for free and bring Moshe to meet our management team. At first we were thrilled with the performance. What is lost in all of these performance comparisions is that most of the fantastic performance variances is when comparing a brand new XIV against their old storage array. I’d sure hope it is much faster.

About a year and 5 arrays into the XIV experience it started to all fall apart. We experienced countless module failures several of which required the entire array to come down to repair. Numerious software bugs that caused IO to stop. And worst of all we experienced the double disk failure that took out the entire array. Despite IBM saying over and over again that this has never happened in the field, it is all a lie. It most certainly has happened in the field and not just to 1 or 2 customers. It is far more prevalent than they would lead you to believe. What made my double disk failure catastrophic was the compounding effect of having the XIV virtualized behind an IBM SAN Volume Controller. When even a couple gigs of data is lost in a virtualized pool, the entire pool is corrupted. This took nearly 24 hrs to recover.

Be very leary of XIV and SVC. And what’s more, they aren’t cheap. Sure when compared to a VMAX or VSP they are less expensive but that isnt a fair comparison. When compaired to a VNX, NetApp or HDS HUS they are much more expsensive.

I’m an ex-developer for XIV and while XIV had its fair share of disk handling problems it had improved. I had a hand in that improvement and cared about these places. I have since left for new challenges but know that things have definitely improved in the system failures front after the release of the appropriate fixes.

I have no knowledge on SVC to comment on it.

This is a nice writeup.

I have a query, is there a way to identify if the XIV volumes are thin volume or a thick volume. Are the volumes by default Thin when created

Vk, strikes me as odd that an EMC’er would ask StorageMojo about XIV. Really?

Hi,

I got to the IBM XIV inheritance. It worked, and that broke the 2 disks. Discs changed. But I can not activate them. Need a password technician.

Tell me password technician, please!