As the economics of data storage push more and more data onto disks, the energy efficiency of data storage is ever more critical. Storage is anti-entropic, so keeping bits organized requires energy. How can we minimize that energy input?

Data cooling is the major reason disk drives have remained a viable storage strategy for 50 years. The IOPS/MB has dropped steadily for decades, yet disks remain the preferred tool outside of very low latency or high-bandwidth applications.

Looking forward to massive scale-out storage infrastructures the data will get even cooler. Copan’s MAID architecture, which turns disks off when not in use, is a rational extension of the cool data concept.

As data continues to cool we will eventually see millions of disk drives – along with tapes – sitting idle. But even if we have cold archive disks, one of disk’s big advantages over tape is the ease with which data can be spread over multiple drives for data protection.

Not RAID 5

You can’t count on any one hard drive actually restarting after a few months or years of idle time. Nor can you expect that any specific sector will be readable. Cold data requires even more advanced – energy efficient, disaster-tolerant – storage techniques than RAID arrays offer today.

Oh, and they need to be cheap too. Which means RAID arrays won’t get this business. What about open source software?

Erasure coding

In A Performance Evaluation and Examination of Open-Source Erasure Coding Libraries For Storage (pdf) James S. Plank, Jianqiang Luo, Catherine D. Schuman, Lihao Xu, and Zooko Wilcox-O’Hearn examine 5 open source implementations of 5 different erasure codes: Reed-Solomon, Cauchy Reed-Solomon, Even-Odd, Row Diagonal Parity and Minimal Density RAID 6 codes.

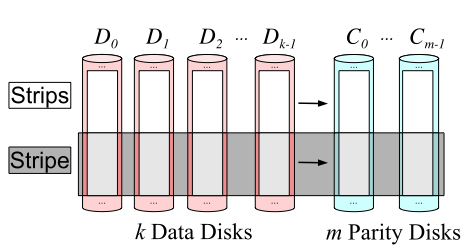

Typical storage system with erasure coding – figure from the paper

Several companies – including Cleversafe, NetApp and Panasas – use erasure codes today to ensure higher data availability. What Plank et. al. wanted to know is how well these codes work and what system designers need to know to use them effectively.

The OSS implementations tested are:

- Luby, a C version of CRS.

- Zfec, a highly tuned Reed-Solomon library.

- Jerasure, a GNU LGPL C library that includes RS, CRS and 3 MDR6 among others.

- Cleversafe

- EVENODD/RDP

released an open source version of their dispersed storage system, from which the authors used just erasure coding parts.

, patented codes not available to the public and included for performance comparison.

Most important result

The study found that while tuning boosts performance and some architectures are much faster than others,

Given the speeds of current disks, the libraries explored here perform at rates that are easily fast enough to build high performance, reliable storage systems.

Translation: this isn’t string theory.

Other findings include:

- The RAID 6 codes out-perform the general purpose codes.

- For non-RAID 6 codes, the Cauchy Reed-Solomon performs much better than straight RS

- CPU architectural features, such as cache size and memory behavior, make it hard to predict an optimal data structure for a given code configuration.

- The code’s memory and cache footprint can have a large impact on performance.

- Specialized RAID 6 codes hold promise for creating efficient storage that can withstand numerous concurrent disk failures.

- Multicore performance issues are largely unexplored.

The StorageMojo take

The architect for a planned commercial 200 PB cold-storage infrastructure confessed that he can see how to get to 25 PB today, but not beyond. Yet they have no choice but to start building now.

This market’s eventual structure may parallel that of today’s tape silo market: everal hundred large customers who are continuously churning through rolling upgrades of media and servers.

Right now, tape silos still enjoy an economic advantage over disks. But it looks like disks have more degrees of freedom to improve their cold storage economics than tape.

In just 5 years the first exabyte cold storage systems will be on the drawing boards. It is time for disk companies to get serious about a tape-replacing archival disk. And for clever startups to focus on this emerging market.

Courteous comments welcome, of course.

Bitrot (which implies disk scrubbing) forbids any potentially stored data to be let on a disk completely cold for ages.

Hi Robin,

I can only speak for Nexsan (though I think other vendors would agree) in saying that deep disk archives should be periodically powered up and surface scanned every 2 to 6 weeks. During this process, the spinning disk activates the drive filtration systems to help remove any outgassed material that’s condensed on the media, we re-map and repair and report any grown defects, and of course dead drives will cause a rebuild on a spare. With RAID-6 you have a very high confidence that defects can be repaired with no loss of data. Our AutoMAID technology does all of the above automatically (though I don’t want to exaggerate the brilliance of this as RAID controllers have been routinely surface scanning disk packs for at least 10 years that I know about — the only new twist is that we might have to wake up the array to do it). We wake each array up one at a time, do the scan, and let it go back to sleep. The added power consumption is very minimal in a deep archive scenario as typically an array only needs to spin for an hour or two per month.

Anyway, this is the advantage that cold disk has over cold tape — it is very labor intensive (and risky to the media) to do a similar kind of scan of offline tapes, especially if not in a library slot, and in most cases there is no concept of RAID-6 on tape which would allow the system to periodically repair minor defects that appear over time. The best you can do is wholesale replacement of tapes or failing that, receive an inventory report that tells you how many tapes you have lost this month.

Five years from now, will SSDs be the answer here? They solve the power problem. They get cheaper over time according to Moore’s law, quicker than spinning disks under Kryder’s law for the past few years. For reliability solid state has to win over electro-mechanical eventually, if it doesn’t already. And if the data is really cold, you don’t have to worry about maximum write cycles for a very long time.

I realize people think of them as being at the other end of the storage spectrum, but they have several attributes that make them fit here. What do you think?

Natmaka – bit rot has multiple causes, some of which relate to use, not time, such as when a track of static data is next to a track of often re-written data and write slop weakens the field on the static data.

In the medium term, if Heat Assisted Magnetic Recording (HAMR) gets productized, that will reduce bit rot.

Gary, I agree that disks have many advantages over tape. Today’s disks have to do all that stuff because they weren’t designed for archiving – but they could be. There is a huge home archive market and IT would eventually warm to the idea – especially if erasure codes made the loss of multiple drives a non-issue.

Charlie, most flash drive go into applications where disks don’t compete, such as the below $50 consumer space or the high-performance IT space. It will be many years before flash competes on a $/TB basis – even with the power advantage.

Robin

@Charlie Dellacona

I’m not sure that SSDs do solve the problem of holding what is largely archive and nearline store for long periods. It’s not just semiconductors that benefit from a form of Moore’s law, does magnetic storage. With both magnetic and semi-conductor storage, capacity goes up with the square of improvements in linear density. The huge cost advantage that magnetic media has over semiconductor is that it is vastly cheaper to put a uniform coating on a substrate than the complex, multi-stage lithographic and doping processes which are used on semi-conductors. That basic materials are cheaper on magnetic media too – which might be plastic film, aluminium or ceramic versus very expensively grown pure silicon.

To overcome this disadvantage, the semi-conductor route has to come up with storage densities much higher than can be achieved with magenetic media. Fujitsu have announced magnetic recording technology with a 25nm pitch. Samsung have announced NAND flash using 30nm fabrication. To get the same cost per GB with SSDs, then the semiconductor side would have to move on far faster than disks, even when things like MLC are used.

This feature density issue also points out the fundamental problem with optical media. The feature density cannot be be greater than that dictated by the wavelength of light used. There are some major technical problems about suitable cheap optics working down into the type of far ultraviolet region to get near what is possible with magnetic media, not to mention issues regarding the optical media itself. Only be exploiting 3D recording would it appear that optical media can compete.

Of course it might be that SSDs get “big enough” to solve the nearline/archive problem, but I think that is only true if volumes of data following the exponential path of the storage medium itself. With consumer level devices capable of HD video (and no doubt that will be supplanted by yet higher formats), then the trend will continue for some time yet.

I do fully expect SSDs to effectively kill HDDs for transactional I/O. The fundamental problem with HDDs is that the IOPs per GB figures is dropping like a stone as capacities increase and that latencies have barely improved at all over the past 5 years. It is already the case that on a cost per IOP basis, enterprise SSDs are way ahead of rotating magnetic disk (and possibly even on GB/s) as is power used per IOP. Those distances will get even bigger in favour of SSD.

But for nearline/archive storage – I think tjhat SSDs are way off the mark.

Of course something might come along (like dataslide) or a completely storage system which changes all the rules. But for now we are seeing a shuffling of the boundaries with semiconductor storage gradually displacing HDDs at the high I/O density level and HDDs supplanting tape at the archive/nearline. For other than removable storage, optical is going nowhere and it is conceivable that flash will take that market.

Charlie,

I agree with Robin, with a twist. “It will be many years before flash competes on a $/TB basis”. Here’s the twist. I predict at least 5 years, and that’s an eternity in tech development time. During the next five years, the magnetic media vendors will be working density improvements like crazy. There are improvements in the lab that would permit increases as much as 1000X for magnetic media. While these lab improvements are too much like science to save the high speed (10K, 15K) segment in the near term, within 5 years they could change everything. Hence, do not count, at all or ever, on FlashSSD’s taking the bulk storage crown.

Joe.

I found the Pergamum paper much more interesting (although more radical) for archival storage.

I like the Pergamum approach too. And I also like the Cleversafe approach. I suspect they’ll each find a place.

Raid solved lost of disk storage problems but it remain hard to configure maintain and scale. Internet File system like Google prove that RAID is no longer necessary and can achieve same reliability using cheap disk. The future is internet like clustered file system such as Sun Celeste http://www.opensolaris.org/os/project/celeste/

MogileFS http://www.danga.com/mogilefs/

and more to come if not already.

Disk Magnetic R&D and going down from what I can see. Hitachi and others never fully recovered from several disk technologies (1 and 2 in) and capacity increase failures rate. Millions $ lost here. I start to see slow capacity increase on magnetic disk over the years. We used to see 2x increase per year. Now it start to be 18 months or so.

SSD should definitively kill magnetic disks. Market will drive this and not technology. With millions of small devices the R&D will be in these products and not on the hundred of thousand disks enterprise grade ship per year. More competitions will also drive this market. There is more SSD vendors than magnetic disks. Simple maths should work on SSD.

Tape capacity should double or more next year (from 800GB to few TB). HP, IBM and Sun should come out with their new devices by mid next year. As storage capacity increase, media cost will be the key here. Not the device themself. Do not forget media or disk migration cost.

Just to entertain the community,

1) following our paper, we published another DSN 2009 paper on how to practically increase encoding performance of popular RAID-6 codes:

http://nisl.wayne.edu/Papers/Tech/dsn-2009.pdf

2) a while ago ( 2005 ), we published a RAID-7 code, which we call STAR code at FAST 2005:

http://nisl.wayne.edu/Papers/Tech/star.pdf

so more choices are available. And coming soon, we will publish another paper on how to use the STAR code to tolerate both a single (whole) disk failure and silent disk errors at the same time.

Enjoy.