The 4 TB Sun F5100 Flash Array product launch is imminent. Much talked up by Andy Bechtolsheim the 1U array promises 1 million IOPS, 10 GB/sec throughput, 64 SAS channels, redundant power & cooling and SES management.

Beyond the raw performance the F5110 is notable for 2 technical advances.

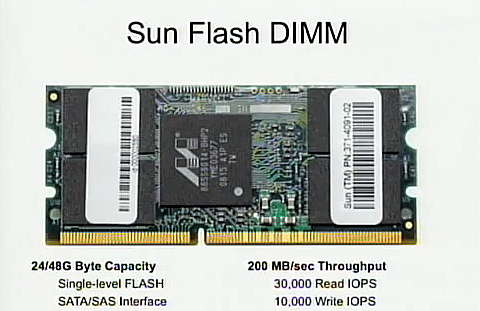

- The Sun flash DIMM modules (FMods) which appears to support 64 GB – or is it only 48 GB? – of SLC flash on a notebook SO-DIMM card form factor.

- A set of 4 super-capacitors that maintain power for the FMod’s on-board DRAM write buffers and I/O interfaces if mains power is lost – an innovative enterprise feature. The cool feature: a 10 minute charge time.

FMod

Here’s a picture of an FMod prototype from a presentation Andy gave several months ago.

The F5100 is spec’d with 80 FMods, which means 50 GB per. Are they really 64 GB and over-configured or is the spec very optimistic?

Also, what looks like an SO-DIMM is accessed as a disk. Would you give up one of your notebook DIMM slots to get 48 or 64 GB of very fast disk?

Issues

No v.1 product is perfect and the F5100 is no exception. There is no multipath access. Each FMod group attaches to one host through a single SAS link.

There is no cascading of F5100s. Each has to connect directly to a host SAS HBA.

Each FMod is a specific physical address. If one is replaced “Applications and utilities that depend on the old device path will need to be reconfigured to work with the new one.” Curious.

To maximize performance do not configure more than 20 FMods per HBA.

Software support

The usual suspects: Solaris 10.5/08×64/SPARC or greater plus patches

Microsoft Windows Windows 2003 32-bit (R2 SP2) & 64-bit (SP2), Windows 2008 64-bit (SP1), Windows 2008 64-bit (SP2)

RHEL 4 U5 32/64-bit, RHEL 5 64-bit, RHEL 4 U6 32/64-bit, RHEL 5 U2 32/64-bit, RHEL 5 U1 32/64-bit

SUSE 10 32/64-bit SP1, SUSE 10 SP2 32/64-bit, SUSE 9 SP3 32/64-bit, SUSE 9 SP4 32/64-bit, SUSE 10 SP1 64-bit

There’s a required patch for Windows 2003.

Only 2 SAS HBAs, both from Sun, are supported on the F5100.

The StorageMojo take

No pricing or delivery as yet, so it isn’t officially announced AFAIK. Given the SLC capacity and current prices I’d guess that it will retail in the $80-$90k range.

The more interesting question is how Oracle will integrate this into their database server strategy. If we’re lucky Kevin Closson or someone just as knowledgeable will weigh in with a survey of the possibilities.

Kudos to the Sun engineers who have been driving flash forward faster than almost anyone else in the industry.

Courteous comments welcome, of course. How would you use this box?

Here’s a link to 17 MB of F5100 documentation. Update 9-04: Looks like Sun has pulled all the F5100 docs down. Maybe the announcement isn’t as imminent as I’d thought. End update.

If you’re late to the flash party, here’s an accessible YouTube video of Andy talking about flash and Sun’s strategy several months ago. There’s a lot more about flash here on StorageMojo.

Just think about 4 TB ultrafast swap at a small system 😉

Hi Robin,

Sorry, I can’t comment about futures… there will be trench coat clad thugs showing up to whack me if I do.

One point I’d like to make: this is UNDOUBTEDLY aimed at a back-end for the 7000 series platform. That will completely negate any issues with swapping out an FMOD.

We have a latency averse application for which this would be the perfect fit.

A single transaction can generate 10,000 IO on a 2 TB working set.

This would bring response time from 25s down to 2s. Nice.

Kevin, it’s worse than that. Those guys in the trench coats will actually be lawyers.

Outsider,

Tell me about it. I am an Oracle employee with a blog.

The link to the documentation is broken (http://dlc.sun.com/pdf/820-5874-10/820-5874-10.pdf). Has it been pulled by Sun or something?

Robin:

I am not a Sun engineer, but judging from what I know, it is probably not going to be released till Feb 2010.(I could be wrong on this). And here is the reason why:

1. It is obvious this is the perfect L2ARC cache device for the 7410 server. Anyone who uses this as a RAID10 storage instead of L2ARC is wasting his money. But currently L2ARC isn’t persistent, and takes about a day to warm up just the 6x100GB stec SSD in there, so 4TB L2ARC warm up will be horrible. I don’t know why, but I suspect that L2ARC persistence patch would have to go into OpenSolaris before they will release it for the 7410.

2. Finding a good set of 4x HBAs to drive this thing would be a challenge. I think they merged in LSI SAS 6Gbps drivers into NV_118 build, and the LSI 2108 ROC chip is specified to deliver 200K IOPS, which is far more appropriate than LSI 1068E/1078 based adapters. I think the 7410 right now is IO bound by the 1068Es right now for the Stec 100GB.

3. Taking 1+2 into consideration, they will probably release OpenSolaris 2010.02 into production before F5100.

Of course, the “regular” folks like myself will have to find alternatives with Dell/HP gear. I think from HP Side, you can use 8x MSA50s filled with X25-Es driven by 8x P411 adapters to get the same performance as the F5100. Or from Dell side 4x MD1120s with X25-Es driven by 8x SAS6E HBAs.

@TS: You are wrong about this … the software of the S7000 has a different release schedule than OpenSolaris 20xx.yy ….

“Sun Storage 7000 2009.Q2.5.0 Update”. I would assume that the Q stands for Quarter 😉 … and a feature doesn’t have to be in OpenSolaris at first. Thus your assumption about the release date is wrong. Just as a thought: there wouldn’t be the docs at docs.sun.com for a system to be released somewhere next year…

@Joerg:

I already said I could be wrong about this. Everything is speculation at this time.

Although Storage 7000’s updates are named with “Q2” in the name, it doesn’t mean it will be updated every quarter. And we all know that 7000 series uses OpenSolaris as the base OS, so I doubt it will release something out of ON Gate without officially releasing a OpenSolaris version first. In fact, the Q2 update was released at the end of April, so we already missed the Quarterly update time frame for 2009Q3 update.

This is the way I look at it from an outsider speculative position. If Sun wants to rush the F5100 out, it is fine. I was merely speculating on the fact that if Sun wants the most performance out of the F5100, they would have bundled the LSI MPT2.0 driver that was merged into SNV_118 build. Therefore, from a risk mitigation perspective, they should wait for an official OpenSolaris 2010.02 release before releasing 7000 series updates which is based on OpenSolaris. That way, you get SAS 2.0 bandwidth(F5100 should have engineered LSI SAS 2.0 Expander into it because anything less would be a lesser optimal solution from aggregate bandwidth perspective) And if they did wait till Feb of 2010 to release a major update, it would give them ample time to do L2ARC persistence, and possibly ZFS Dedup in there as well.

Feb 2010 is only what 5 months away. This is all speculation and my personal opinion. Don’t take it too seriously. Of course, if you have inside scoop, keep us updated.

@TS: Yes … i have inside scoop, yes … you would know why, when you followthe link to my blog, no … i won’t write about the inside scoop … neither here nor in my blog.

If you consider the throttle points of the FMODs and the SAS HBAs, then it rapidly becomes apparent that the “limitation” of not daisy-chaining F5100 trays is actually there to help you and make sure that you can actually achieve the claimed throughput.

I do work for Sun. So no comment on unannounced product from me. What I can share that the industry’s biggest problem with the concept of FLASH based SSD aggregation is:

a) Queue depths not being large enough, and

b) The HBAs available were never designed to drive high enough IOPS

If one were to take say 20 Intel SSDs and gang them together in a some sort of array configuration, we would need an aggregate queue depth of roughly 200 IOs to drive the stripe. The aggregated pool can do 35K X 20, so we would need a 700K IOPS capable HBA (all this is at the 4K IO size). But there is NO HBA that can do this today! This is a REAL problem. Our multi-core/multi-socket CPU server designs are more than capable of digesting that much IO. Data base servers would LOVE to be able to do this. The problem is that with today’s technology it I am forced to use 4-7 HBAs to pull this off. Not good slot economy.

Whether one builds an array of off the shelf Intel SSDs or Sun Fmods, the HBAs are the impediment to realize the performance an aggregated SSDs storage array is capable of.

So if any of the SAS HBA vendors are reading this: PLEASE develop a HBA that is capable of driving lots of SSDs – without losing device count. That would help the industry move forward on SSD/FLASH adoption in enterprise IT shops.