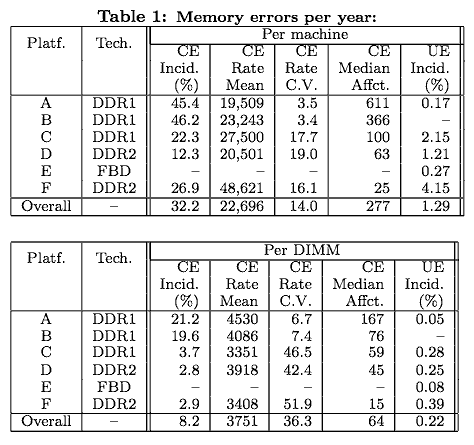

A 2½ year study of DRAM on 10s of thousands Google servers found DIMM error rates are hundreds to thousands of times higher than thought — a mean of 3,751 correctable errors per DIMM per year. Another piece of hallowed Conventional Wisdom bites the dust.

Google and Prof. Bianca Schroeder teamed up on the world’s first large-scale study of RAM errors in the field. They looked at multiple vendors, DRAM densities and DRAM types including DDR1, DDR2 and FB-DIMM.

Every system architect and motherboard designer should read it. And I agree with James Hamilton’s suspicion that even clients need ECC – at least heavily used clients.

If you can’t trust DRAM . . .

Here are some hard numbers from DRAM Errors in the Wild: A Large-Scale Field Study by Bianca Schroeder, U of Toronto, and Eduardo Pinheiro and Wolf-Dietrich Weber, Google.

What you don’t know can hurt you

Most DIMMs don’t include ECC because it costs more. Without ECC the system doesn’t know a memory error has occurred.

Which is part of the reason people aren’t more concerned. Ignorance is bliss.

Everything is fine until a memory error means a missed memory reference or a flipped bit in file metadata writing to disk. What you see is a “file not found†or a “file not readable†message, silent data corruption – or even a system crash. And nothing that says “memory error.â€

Conventional Wisdom

The industry take on DRAM is summed in a quote from an old AnandTech FAQ that took the industry at its word:

Everyone can agree that hard errors are fairly rare. . . . For the frequency of soft errors. . . . IBM stated . . . that at sea level, a soft error event occurs once per month of constant use in a 128MB PC100 SDRAM module. Micron has stated that it is closer to once per six months . . . .

An even bigger surprise: it appears that hard errors, not soft errors, are the dominant error mode – the reverse of the conventional wisdom. This conclusion isn’t solid – the study’s data set didn’t distinguish between hard and soft errors – but the circumstantial evidence is suggestive. There may be a another study coming that uses error address data to distinguish hard and soft errors.

Issues

The paper has a few issues that make it difficult to understand. One issue is the use of the chip industry’s Failure In Time (FIT) metric.

One FIT = one failure per billion hours per mbit.

Confused? Me too. Taken at face value, FIT suggests that a 2 GB DIMM – 16,000 Mbit – has 16x the errors of a 128 MB DIMM.

But that isn’t what the study found: higher density DRAM doesn’t have more errors per DIMM. The FIT metric is most useful for comparing with earlier studies.

Good news

The study had some good news:

- Temperature plays little role in errors – just as Google found with disk drives – so heroic cooling isn’t necessary. Good news for data center air economizer architectures.

- Density isn’t a problem. The latest, most dense generations of DRAM perform as well, error wise, as previous generations.

- Heavily used systems have more errors.

- No significant differences between vendors or DIMM types (DDR1, DDR2 or FB-DIMM). You can buy on price – at least for ECC DIMMS.

- Only 8% of DIMMs had errors per year on average. Fewer DIMMs = fewer error problems – good news for users of smaller systems. Bad news for large-memory servers running in-memory databases.

Bad news

Besides error rates much higher than expected – which is plenty bad – the study found that error rates were motherboard, not DIMM type or vendor, dependent. Some popular mobos must have poor EMI hygiene.

Route a memory traces too close to noisy components or shirk on grounding layers and instant error problems. Design or manufacturing problems in motherboards? The study did not do a root cause analysis.

Hardware failures are much more common as well and may be the most common type of memory failure. Google replaces all DIMMs with hard errors – as do most data centers – as a matter of policy.

The server error reporting could not always differentiate between hard and soft errors. Hard errors are discovered through memory tests run on off-line servers.

Other interesting findings

For all platforms they found that 20% of the machines with errors make up more than 90% of all observed errors on that platform. There be lemons out there!

In more than 93% of the cases a machine that sees a correctable error experiences at least one more in the same year. They don’t get better by themselves.

High quality error correction codes are effective in reducing uncorrectable errors. There are “chip-kill†DIMM/mobo combinations that can detect and correct 4 bit errors, but few vendors offer those. Kingston and Corsair don’t.

Besides costing more, ECC DIMMs are about 3-5% slower than unprotected DIMMs. Few of us would ever notice that small a performance hit, but gamers might care.

HPC users might care too, for a different reason. James Hamilton noted a talk by Kathy Yelick – she doesn’t keep her web site updated – where she found that ECC recovery times are substantial and the correction latency slows the computation.

The StorageMojo take

You’d think that after several decades of semiconductor DRAM usage that this study would be old news. I did.

Like most folks I accepted industry assurances that DRAM is reliable. My main machine – a Mac Pro with an Intel server-class mobo – has FB-DIMMs whose 5-watt-per-DIMM overhead has irritated me. But when I found one DIMM reporting errors recently I felt better about it.

I suspect this is another example of the industry’s code of omerta. System vendors have scads of data on disk drives, DRAM, network adapters, OS and filesystem based on mortality and tech support calls, but do they share this with the consuming public? Nothing to see here folks, just move along.

Kudos to Google for doing the long-term research required for substantive results and then sharing those results with the rest of us. I expect ECC systems will become a lot more popular in the years ahead.

Courteous comments welcome, of course. Note: Much of this was published on ZDnet Sunday night. This version is updated after speaking to Prof. Schroeder Wednesday. This version also dispenses with some consumer-oriented content.

Hanlon’s razor – do not ascribe to malice what can be better explained by incompetence (or in this case ignorance). I doubt DRAM and DIMM manufacturers have hundreds of thousands of DIMMs running for years the way Google does. They probably just run hundreds of early samples in the lab, then use statistical process control, but certainly not with the sample sizes Google can attain.

What about the mostly non-ECC RAM in locations besides the motherboard? What about onboard disk caches, disk controller caches, RAID caches, SAN subsystems, Ethernet boards, FC boards, NAS boxes, network switches, …?

Maybe some of the high error rates reported in earlier studies of disk bit rot were due to faulty RAM in the path from main memory to disk rust and back.

Even if you put ECC RAM in those boxes, how do you report an error? If you did report an error, is anything listening? Most operating systems and file systems almost silently ignore disk errors anyway (except ZFS).

The implications of this study go way beyound motherboard RAM, and affect just about every device with more than $10 of parts inside.

Lemons indeed! You really have to go out of your way to notice correctable ECC errors, so you could have defective DIMMs and not know it. What a windfall for the manufacturers – increased yield thanks to error correction.

I’d have to partially agree with Fazal, there is no explicit omerta, just human nature. From personal experience at a semi, troubleshooting a customer EMI mobo problem, once the panic is over and a 15 cent high quality cap grudgingly added to the BOM nobody has time to collect statistics. The entire engineering team is now several weeks behind (they were understaffed to begin with), middle management doesn’t want to look bad, and sales doesn’t want to bad mouth their customer, all of which would happen if a systematic collection of failures were undertaken. I will note that certain customers who had control of most of the stack (software and hardware), and thus couldn’t pass the buck, did engage in google style data collection. They were a pain in the heiny. They were rolling in dough from their patents and cornering a broad chunk of the market, but they still engaged in cut throat negotiations with their obsessively collected data. They also had a habit of demanding changes in our operations to bring the numbers up even a tick, even if it didn’t really make sense. Given those two extremes, I would say that most of the time the data doesn’t exist to the level of detail needed to make changes, and if anything, most of the industry are incentivized to muddy the water(note that spinning the data isn’t the same as omerta).

Google has taken the high road here by releasing full data and not naming names. And in the long term improved quality will float all boats, including Googles. Anyway its not clear that names would help, compare the HP DL385 G1 vs. HP DL380 G6(first amd vs. first intel mobo with on die mem controller) in terms of memory errors.

I don’t know about others but my experience has shown me far fewer failures from memory errors on HP systems than other boxes. I suppose I have attributed it mostly to their Advanced ECC technology which has been out for more than 10 years now it really seems pretty neat:

http://www.krogmartensen.dk/filer/memory.pdf

Some of their newer systems seem to include multiple types of DRAM scrubbing as well:

http://h20000.www2.hp.com/bc/docs/support/SupportManual/c00218059/c00218059.pdf

Dell recently announced something called “Advanced ECC” into their 11th gen systems, though in my brief searches, and even asking Dell directly I haven’t found much info on what it does. I have found that the technology does disable certain memory slots for some reason, and if you load up on DIMMs you have to turn it off to be able to access all of your memory. It doesn’t mention sparing or mirroring so I don’t think that is what is happening.

Google has a reputation for being cheap & scalable so I believe they probably don’t use the highest grade gear thus are more impacted by memory errors. They can recover better since there is more redundancy but it just depends on the scale your operating at.

I have a few HP DL585G1s (really old) running VMware, and they fairly routinely report memory errors, for a while we tried tracing it down, replacing DIMMs, and even boards, but nothing would make the errors stop. So we gave up, and just are letting the boxes run until we retire them next year, no crashes, or failures as a result, just periodic entries in the system log saying error threshold exceeded. There was a BIOS update released a couple of years ago that fixed an issue which caused the system to hang/crash/reboot when that threshold was reached. I suspect the errors would go away if we had HP “certified” memory, they opted for cheaper Kingston stuff at the time(I wasn’t at the company at the time, prefer Crucial myself).

Myself at least for white box systems have found memory to be the #1 cause of system problems, even ECC ram. I find it funny people often knock hardware RAID cards saying well if one fails you need another identical one. I can count the # of hardware raid HBA failures over roughly 700-800 systems on 1 hand. I gave up trying to keep track of memory errors/failures resulting in system crashes/failures over the years. As recently as June we got 3 new HP DL165G5p systems, all three were plagued with memory issues, they don’t have the fancy memory technology that the DL3xx and DL5xx have, but they wouldn’t run for more than a few minutes without rebooting, and they could never complete the HP diagnostics. Fortunately the BIOS logged all of the events and after much pain HP just came out and replaced them, no issues since.

The need for ECC memory is one of those dirty little secrets. It’s becoming well known that bad/dying memory is responsible for vicious little errors that are hard to track down. I thought I had seen some pie chart showing that they were a significant factor in Windows XP crashes but a quick search has yielded nothing. The best I come up with is that MS would have liked more machines to be shipped with ECC memory for Vista – http://www.tgdaily.com/content/view/24190/135/ .

Back in 1996 our company developed error correcting memory modules (SIMMs were used at that time). Unlike regular ECC which corrects single bit errors and detects double errors our memory corrected up to 12 bits. Also, all the magic was done by the memory module itself without any support from the motherboard. There is more info at http://www.divo.com/page4.html.

We built prototypes and tried to get memory manufactures interested in our beautiful product 🙂 Kingston got very interested for a while, but eventually decided that it would be too hard to convince consumers to buy 2-3 times more expensive memory to get this very hypothetical benefit of a more dependable computer. We think that reliable memory is a must for all mission critical applications, but who would listen to an engineer …

Symmetrix has used ECC-protected DRAM for years, and it actively tracks and reports back to EMC even the corrected errors. But ECC alone is not trusted as sufficient protection- within Symmetrix DRAM, data blocks are striped across multiple chips with additional check bytes (Hamming codes, iirc, not simple CRC) capable of detecting and correcting multi-bit errors. Together, these form a belt-and-suspenders approach to protecting data integrity in memory.

Additionally, Symmetrix protects data with verified guard bytes all along the I/O path, from the front-end ports all the way through to drives, and back again – integrity is verified and the protection bytes regenerated every time data moves across different “domains” in the I/O path..

Beginning with V-Max, we take this one step further: an (8-byte) T10-DIF guard is generated for each 512-bytes of data upon receipt from the server, and this is carried through all the way to be stored on the disk along with the data; these check bytes can be used not only to validate the integrity of the block(s) read from disk, but also to ensure that the drives in fact returned the actual block(s) requested (another infrequent, but real, error that can occur within a disk or flash drive is for it silently to return the wrong block – simple CRC will not detect this).

As I said, Symmtrix frequently identifies (and corrects) bit errors in memory, on disk, and during transfers – we sometimes joke that your data integrity is assured once it gets into the array, but only so long as it stays there…if the same precautions are not taken along the entire I/O path, there is a higher risk of silent corruption outside the array than there is within.

Just as you (Robin) reported on CERN’s experiences with silent data corruption a few years back and the realization that not all arrays actively validate the data they read from disk is what was actually written, Google’s revelation that unprotected DRAM can indeed also lead to silent data corruption should not come as a surprise – at least, not to those of us who are in the business of protecting customer data.

FPGAs, ASICS and even CPU engines are other places where data integrity can be exposed to silent corruption if the proper precautions are not taken, but I’ll leave that discussion for another day.

Barry,

How about the DRAM in network adapters and such? Embedded (sub)system DRAM is vulnerable too. Thoughts?

Robin

Robin,

You are absolutely correct – there are many places that are susceptible (sic?) to data corruption. Most communications and I/O protocols generally provide adequate protection, however – there are CRC’s and verification in almost every layer of FCAL, SCSI, IP, TCP, UDP, iSCSI, FCOE, DCE, WiFi, (ad infinitum).

But if shortcuts are taken in the implementation, particularly where data must cross from one domain (or layer) to another, SEUs and indeed cosmic particles can (and often do) corrupt data.

Most of these get detected and the protocol forces a retry/retransmit, so nothing bad happens. Many of today’s SSDs are in fact not really considered enterprise-class by the array vendors because of unprotected data paths and incomplete integrity checks as data moves from the I/O controller through FPGA/ASIC into DRAM and eventually down to the NAND cells.

But indeed, the risks are just about everywhere, and it is often difficult to identify them without analysing logic diagrams and the actual FPGA/ASIC programs. In some places, the issue isn’t worth worrying about (you probably will never notice a bit flip in an MP3 song or a JPG image). But were the bit flip to result in a 32,768 increase in someone’s weekly pay, somebody probably isn’t going to be very happy.

And where accuracy matters, the memory and compute overhead required for error detection and correction is worth every penny.

Robin,

We couldn’t agree more with your blog on this subject! There are quite a few studies out there highlighting these types of issues. CERN did a nice study a few years back that you blogged about (CERN’s data corruption research, http://storagemojo.com/2007/09/19/cerns-data-corruption-research/), as well as some interesting work from Carnegie Mellon on disk reliability (http://www.cs.cmu.edu/~bianca/fast07.pdf), and building highly reliable systems (e.g. Understanding Failures in Petascale Computers, http://www.cs.cmu.edu/~garth/papers/jpconf7_78_012022.pdf).

Emulex has been focused on this problem for awhile now, and has worked closely with Oracle to enhance the reliability of data in enterprise data centers. This has been through our work with the

T10 Protection Information (AKA DIF) standard, building reliability into the storage systems, and also driving the same capability into the server systems, through the ongoing work being done in the SNIA Data Integrity Technical Work Group (DITWG). We’ve also published a white paper (http://www.emulex.com/solutions/oracle/reliability-and-new-protection-against-silent-corruption.html), along with Oracle, on this subject. Emulex has built a corruption calculator (http://www.emulex.com/files/solutions/oracle/calculator.html) that helps to put into perspective the cost of Silent Data Corruption (SDC). In fact, Emulex just conducted a non-scientific survey of attendees at Oracle OpenWorld in San Francisco about SDC. Of the technical respondents (DBAs, Systems Analysts, IT Managers), over 70% responded that they had experienced unexplained database outages due to corruption. These outages lasted anywhere from a few minutes to a few days, with an average outage of more than eleven hours. These same respondents rated the importance of a solution that addresses this issue at 8 out of 10, which means that even the 30% who hadn’t experienced an outage felt the availability of a solution was important.

One thing the article talks about is soft error rate (SER). This has been around and studied quite awhile, but always in the realm of memory. What most people don’t realize is that SER is now starting to affect

flip flops and combinational logic in the smaller geometry ASICs. We now have to be aware that SDC can hit us in places that we typically wouldn’t think to protect and weren’t aware of as being an issue, including control path! The work we’ve been doing in this area attempts to address the integrity of data from the time

it is created via an application, to the time it is put to rest on a storage platform. It seems from our industry discussions, that everyone believes there are real issues here, but no one really wants to air their dirty laundry, which at the end of the day equates to some serious money being left on the table. The goal of the work is to flag and address the corruption when it occurs, not to find out about it months later when a backup is restored with the corrupted data, and life then really begins to suck.

David Crespi

allow me to ask a silly question…

a lot of ZFS evangelists preach the ZFS superiority and the ECC RAM adoption. I will not challenge none of those comments, but:

Suppose a user creates a übber ZFS based NAS using ECC ram, decent hard drives, etc. intending to offer the storage to a typical desktop using iSCSI. (The workstation does not uses ECC).

My understanding is that, since the storage would be provisioned as a LUN to the desktop, the lack of ECC on the desktop and the possibility of file (system) corruption due to the memory errors would continue to exist despite the fact that the übber ZFS NAS uses ECC. (Is the chain broken?)

Seems to me that several evangelists have been preaching the ECC “must haviness” without realising that ECC should not make much difference when the NAS disks are accessed using iSCSI to a non ECC initiator.

Do you agree?

This is why I wish there were more desktop mother boards with an ECC option. My current motherboard is an ASUS P5W-DH which has ECC but I have yet to find a Core i7 mainboard with support even though the newest Intel chip sets provide it.

I also had a manufacturer tell me once that their “supports ECC” meant that you could be ECC DIMMs in their board and they would use them just like Non-ECC DIMMs. As if it was a parts sourcing issue and not a data reliabilty issue. Sigh.

–Chuck

Chuck, that is a scary and very broad definition of “supports.” A word to the wise. . . .

Robin

@Chuck,

Core i7 has the memory controller integrated on the processor and the Core i7

controller does not provide ECC. For ECC you need to use Xeon W3500.

You need to be looking for workstation motherboards, not PC motherboards.

Look at the Asus P6 WS models and Supermicro C7X58 which accept both

Core i7 and Xeon W3500 processors and support ECC with a Xeon.

Hi,

Be aware of Intel motherboards, their website claims ECC support on the DX58SO2 motherboard (which supports up to 48GB RAM) but in fact the ECC function is NOT implemented in the BIOS, and dmidecode shows that ECC memory has 64bit instead of 72bit and shows Error Correction Type: None. We contacted them and they said: “You have a Xeon CPU with ECC RAM and you can boot your system, so ECC is working, if ECC was not working the system wouldn’t boot and you couldn’t even access the BIOS”. All we asked for was a BIOS update but they thought they would fool us by saying it’s working because we tell you it’s there and working…the cowards !

Look at this thread to see how Intel customers are being SCAMED:

http://communities.intel.com/thread/21216?start=0&tstart=0

My advice is: Never ever buy an Intel motherboard !

Regards,

WantsECConDX58SO2.

Funny that anyone has the time to spend on correctable errors. Maybe they are the people that bought cheap servers and run LINUX on them. You know who you are Google…

If you chose a decent server the DIMM’s are separated from noisy components, and have ECC error checking. One issue that was not brought up here is refresh rate. With the speed of new processors moving data the memory is hard tasked to keep the data refreshed and also provide read/write access at nearly the same time.

Cheap mobo’s tend to have issues clocking read, write, refresh without a few ECC errors if they have that capability. Finally you get the stardust excuse which I in fact have seen. Alpha particles which can penetrate nearly anything can bombard a system(‘s) and cause 10’s of thousands of errors in seconds.

One systems maker took a large enterprise class machine into a lab and filled it full of dimm’s with a hard failed bit on every dram. The machine ran for over a year, collected billions of singlebit correctable memory errors but never went down and never corrupted any data.

Finally while temperature doesn’t effect DRAM’s much, it can effect the memory buffer chip that addresses the DIMM. Many of the cheapest of these chips uses cut rate phosphor as an insulator which will definitely break down in a warm environment.

The bottom line here is just like anything else you get what you pay for. Buy Enterprise class equipment you get long running stability. Buy cheap LINUX servers you get the instability that goes along with the LOW price. You choose!