Virtual machines (VMs) solve the problem of many tiny servers on a big server. VMs are a logical outgrowth of Moore’s Law: server CPUs got bigger, faster, than the apps required. And Windows Server didn’t handle multiple apps well.

But the growth of 100 megawatt Internet-scale data centers has architects rethinking efficiency-at-scale. As James Hamilton put it in his presentation

Internet-Scale Service Infrastructure Efficiency (pdf):

Single dimensional performance measurements are not interesting at scale unless balanced against cost

Therefore: work done per $; per joule; and per rack.

Microslice server

Because CPU performance has grown so much faster than storage – disk and DRAM – over the last 30 years, powerful multicore CPUs are spending much of their time idling. The microslice server idea: build servers from slower, cheaper and much more power-efficient CPUs.

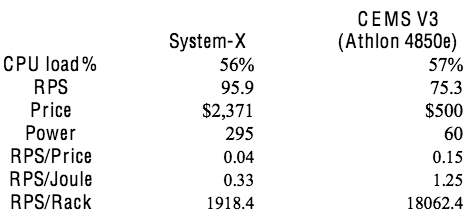

Amazon has done just that. A microslice prototype jointly developed with SGI – formerly Rackable – using a lower power Athlon 4850e CPU handled over 9x the requests per second (RPS) of a rack of conventional servers.

And the server cost just $500, used 1/5th the power and provided about 70% of the performance (RPS) of the much costlier server. Higher density – something like 6 servers per rack unit – provided the rack-level performance.

Disk Workload from Hell

At October’s 22nd ACM Symposium on Operating Systems Principles (SOSP) – David G. Andersen, Jason Franklin, Amar Phanishayee, Lawrence Tan, Vijay Vasudevan – all from Carnegie Mellon University – and Michael Kaminsky (Intel Research Pittsburgh) presented FAWN: A Fast Array of Wimpy Nodes, a Best Paper award winner.

FAWN’s goal: maximizing queries per Joule in a high performance key-value storage system. Key-value stores are seeing increasing use in Internet-scale systems – the key is a unique identifier for the associated value.

The paper explains:

The workloads these systems support share several characteristics: they are I/O, not computation, intensive, requiring random access over large datasets; they are massively parallel, with thousands of concurrent, mostly-independent operations; their high load requires large clusters to support them; and the size of objects stored is typically small, e.g., 1 KB values for thumbnail images, 100s of bytes for wall posts, twitter messages, etc.

The paper describes both the hardware – which uses 500 MHz embedded processors, 256 MB DRAM and 4 GB CF flash – and the software – a log-structured per-node datastore that optimizes flash performance. The net/net: FAWN is over 6x more efficient – on queries per second – than conventional systems.

At 1/5th the cost. And 1/8th the power.

The StorageMojo take

This is more important than it looks. The Internet guys are optimizing for power, something most businesses ignore. But the low cost and performance of these nodes is attractive to everyone else.

Back in the day, DEC sold a lot of 3 node DSSI VAXclusters. Why? They were cheap(er) and if you lost a node you still had 2/3rds of your system.

In 2010 I expect to see low-end, cluster-based storage systems that offer multi-node resilience at low cost. Not just purchase price either, but service costs as well. A node went down? We’ll overnight you a new one.

The low-end is about to get a lot more interesting.

Courteous comments welcome, of course. The other SOSP best paper is fascinating too: RouteBricks: Exploiting Parallelism to Scale Software Routers. I hope I have time to post on it.

And BTW, Intel is also showing a microslice proto.

This reminds me of the Sun Fire B1600 strategy, circa 2004. And to some degree, this is the idea behind Sun’s Niagara processor line. Lots of wimpy processors in a small space using little power. Sun offered two blades which used x86 processors (AMD Mobile Athlon or Intel low power Xeon) and supported Linux. Even if such systems were dirt cheap, it is not clear that they could garner any market share — people like Ferraris better than they like bicycles. What would change this perception in the market?

Storage clustering is a really attractive idea in theory, but somehow it doesn’t work out on the low end. Expensive clustering software always cancels out the savings from scale-out hardware. Of course, software pricing is somewhat arbitrary so it could change overnight.

Richard, to change perceptions of such systems they need to present obvious benefits that standard systems don’t. And they probably can’t be sold through a direct sales force either.

Wes, who says the software has to be expensive? Think of a micro-cluster as an appliance with bundled software in an entry-level 3 node cluster. Make more money selling add-on nodes.

Robin