Megastore handles over 3 billion writes and 20 billion reads daily on almost 8 PB of primary data across many global data centers.

In a paper by Jason Baker, Chris Bond, James C. Corbett, JJ Furman, Andrey Khorlin, James Larson, Jean-Michel Léon, Yawei Li, Alexander Lloyd, Vadim Yushprakh titled Megastore: Providing Scalable, Highly Available Storage for Interactive Services Google engineers describe how it works. From the abstract:

Megastore is a storage system developed to meet the requirements of today’s interactive online services. Megastore blends the scalability of a NoSQL data store with the convenience of a traditional RDBMS in a novel way, and provides both strong consistency guarantees and high-availability. We provide fully serializable ACID semantics within fine-grained partitions of data. This partitioning allows us to synchronously replicate each write across a wide area network with reasonable latency and support seamless failover between data centers.

The mission

Support Internet apps such as Google’s AppEngine.

- Scale to millions of users

- Responsive despite Internet latencies to impatient users

- Easy for developers

- Fault resilience from drive failures to data center loss and everything in between

- Low-latency synchronous replication to distant sites

The how

Scale by partitioning the data store and replicating each partition separately, providing full ACID semantics within partitions but limited consistency guarantees across them. Offer some traditional database features if they scale with tolerable latency.

The key assumptions are that data for many apps can be partitioned, for example by user, and that a selected set of DB features can make developers productive.

Availability and scale

To achieve availability and global scale the designers implemented two key architectural features:

- For availability, an asynchronous log replicator optimized for long-distance

- For scale, data partitioned into small databases each with its own replicated log

Rather than implement a master/slave or optimistic replication strategy, the team decided to use Paxos, a consensus algorithm that does not require a master, with a novel extension. A single Paxos log would soon become a bottleneck with millions of users so each partition gets its own replicated Paxos log.

Data is partitioned into entity groups which are synchronously replicated over a wide area while the data itself is stored in NoSQL storage. ACID transaction records within the entities are replicated using Paxos.

For transactions across entities, the synchronous replication requirement is relaxed and an asynchronous message queue is used. Thus it’s key that entity group boundaries reflect application usage and user expectations.

Entities

An e-mail account is a natural entity. But defining other entities is more complex.

Geographic data lacks natural granularity. For example, the globe is divided into non-overlapping entities. Changes across these geographic entities use (expensive) two-phase commits.

The design problem: entities large enough to make two-phase commits uncommon but small enough to keep transaction rates low.

Each entity has a root table and may have child tables. Each child table has a single root table. Example: a user’s root table may have each of the user’s photo collections as a child. Most applications find natural entity group boundaries.

API

The insight driving the API is that the big win is scalable performance rather than a rich query language. Thus a focus on controlling physical locality and hierarchical layouts.

For example, joins are implemented in application code. Queries specify scans or lookups against particular tables and indexes. Therefore, the application needs to understand the data schema to perform well.

Replication

Megastore uses Paxos to manage synchronous replication. But in order to make Paxos practical despite high latencies the team developed some optimizations:

- Fast reads. Current reads are usually from local replicas since most writes succeed on all replicas.

- Fast writes. Since most apps repeatedly write from the same region, the initial writer is granted priority for further replica writes. Using local replicas and reducing write contention for distant replicas minimizes latency.

- Replica types. In addition to full replicas Megastore has 2 other replica types:

-

witness replicas. Witnesses vote in Paxos rounds and store the write-ahead log but do not store entity data or indexes to keep storage costs low. They are also tiebreakers when isn’t a quorum.

Read-only replicas are the inverse: nonvoting replicas that contain full snapshots of the data. Their data may be slightly stale but they help disseminate the data over a wide area without slowing writes.

Architecture

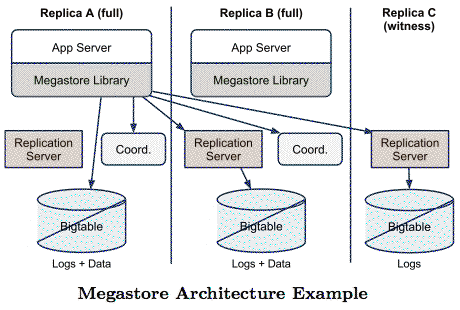

What does Megastore look like in practice? Here’s an example.

A Megastore client library is installed on the app server. It implements Paxos and other algorithms such as read replica selection. The app server has a local replica written to a local BigTable instance.

A coordinator server tracks a set of entity groups and observes all Paxos writes. The coordinator is simpler than BigTable and serves local reads.

Concurrent with writing local data to BigTable and the coordinator the Megastore library is also writing to a second full replica: a replication server and a second coordinator. The stateless replication servers handle the writes to the remote big table while the lower latency coordinator handles any reads from the remote replica.

Failures may leave writes abandoned or in an uncertain state. The replication servers scan for incomplete writes and offer no op values via Paxos to complete the.

Availability

As coordinator servers do most local reads their availability is critical to maintaining Megastore’s performance. The coordinators use an out-of-band protocol to track other coordinators and use Google’s Chubby distributed lock service to obtain remote locks. If the coordinator loses a majority of its locks it will consider all entities in its purview to be out of date until the locks are regained and the coordinator is current.

There are a variety of network and race conditions that can affect coordinator availability. The team believes the simplicity of the coordinator architecture and their light network traffic makes the availability risks acceptable.

Performance

Because Megastore is geographically distributed, application servers in different locations may initiate writes to the same end entity group simultaneously. Only one of them will succeed and the other writers will have to retry.

Limiting writes to a few per second per entity group makes contention insignificant, e-mail for example.

For multiuser applications with higher write requirements developers can shard entity groups more finely or batch user operations into fewer transactions. Fine-grained advisory locks and sequencing transactions are other techniques to handle higher write loads.

The real world

Megastores been deployed for several years and more than 100 production applications using today. The paper provides these figures on availability and average latencies.

The high availability of the system architecture creates a nice-to-have problem: small transient errors on top of persistent uncorrected problems can cause much larger problems.

Fault tolerance makes finding underlying faults more difficult. The price of fault tolerance is eternal vigilance.

As the architecture diagram suggests Megastore doesn’t manage BigTable. Developers must optimize the storage for their app.

The StorageMojo take

As Brewer’s CAP theorem showed, a distributed system can’t provide consistency, availability and partition tolerance to all nodes at the same time. But this paper shows that by making smart choices we can get darn close as far as human users are concerned.

If Microsoft Office – or an open-source analog – could plug into a productized version of Megastore this could become popular for private cloud implementations: LAN performance in the office and global availability on the road. What’s not to like?

But whether that happens or not, the paper demonstrates again the value of Internet scale infrastructure thinking. Enterprise vendors would never have developed Megastore, but now that we’ve seen it work we can begin applying its principles to smaller scale problems.

Courteous comments welcome, of course. If this overview intrigues I urge you to read the entire paper as there are some interesting pieces I’ve left out.

Trackbacks/Pingbacks