I was surprised by the number of questions at last week’s webinar – many more than we could get to – so I’m answering a few here.

Performance

Q: Can Robin talk about performance and how does flash help solve I/O bottleneck?

NAND flash is very good at random reads, and a good SSD can handle thousands per second, compared to a disk drive’s 150-500 (for a high-end drive). That’s one reason arrays are popular: they provide higher random I/O performance because multiple heads are seeking. But that’s also why capacity utilization is so low: the disks come with more capacity than most applications use.

So not only are you buying multiples of the most expensive disks, but then you only use a fraction of their capacity. This is why flash SSDs are predicted to kill the high-end drives even though SSDs cost much more per GB: a single SSD can eliminate 6-10 hard drives. That is a major cost saving.

Q: So the low cost assumes that the cache is read only, otherwise it needs to be RAID? That comes off as misleading.

While flash SSDs are much better at random reads than they are random writes, they still beat several high-end disks at writes.

Since most workloads are 80%-95% reads, an SSD that can handle 1,000 writes per second can handle a lot of work. Disks are still the most cost-effective solution for large sequential workloads because their performance is close to SSDs and they are so much cheaper.

Reliability

Q: What are Robin’s thoughts on the reliability of SSDs? We have seen failure rates of over 10% on drives less than two months old.

Flash SSD reliability today is all over the map. As flash SSD technology matures, I’d expect to see drive reliability rates converge. 5 years ago disk reliability was fairly similar with the glaring exception of Maxtor.

That said, it’s useful to recognize that there’s a lot more design and sourcing variability in SSDs. If someone uses the cheapest parts – and there are plenty available – they can offer good specs but highly variable reliability.

If they leave out too much redundancy they’ll have a cost advantage but will be more vulnerable to chip and plane failures. The market will eventually settle on similar specs for each application, but we’re years away from that.

Q: You mentioned 10,000 writes and failure can begin, what is that in years?

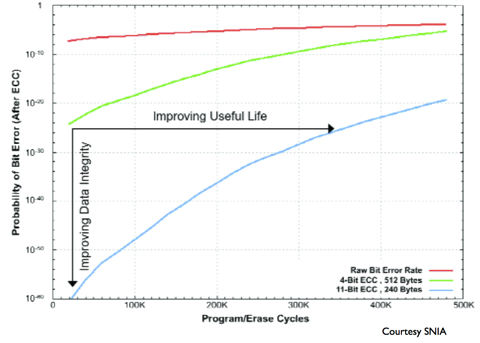

Like so many storage specs, that 10k write spec for MLC flash is a statistical one that can be improved upon by more robust ECC, as this chart from SNIA shows:

But the most important way to improve upon it is by increasing the capacity of the SSD. Double the size of the SSD and you double the total write capacity.

As to what that is in years, the industry is still figuring out how to spec that. The best vendor spec I’ve seen so far has been from Intel – 5 years at 20 GB of writes per day.

Courteous comments welcome, of course. I enjoyed the webinar – a new experience for me – and not just because I got paid. The crew at Nimble was a pleasure to work with. Here’s a link to the webinar.

Hi Robin,

I believe the new Intel MLC consumer SSDs can withstand 20GB/day for 5 years, not 2.

In disk arrays, systems that use dedicated parity drives (RAID-4 or RAID-DP) and avoid the extra writes of R5 and R6 (like my employer’s, NetApp) can also help prolong SSD life.

Otherwise, go with R10.

Thx

D

Right you are! Thanks!

I’ll correct in the post.

Robin

Enterprise-class SSD suppliers are required to support 10 total rewrites of the drive per day for 5 years – a 100GB (usable) SSD must be able to handle 1TB of writes/day. I have recently begun to see NAND devices (both drives and PCIe cards) specified in this manner in marketing collateral.

Note that this does not necessarily mean that every usable block in the SSD is overwritten 10x daily. In fact it is more likely that the 1TB of writes are actually directed at a fraction of the total capacity. In order to avoid early death of chips/planes, the SSD logic must remap those writes – and even move unmodified blocks around – in order to maintain even wear on the components.

Maintaining high performance under the stresses of a heavy erase/write/remap workload is challenging, and few drives are able to handle this sufficiently today.