The crack StorageMojo analyst team has finally named a StorageMojo FAST 15 Best Paper. It was tough to get agreement this year because of the many excellent contenders. Here’s a rundown of the most interesting before a more detailed explication of the winner.

CalvinFS: Consistent WAN Replication and Scalable Metadata Management for Distributed File Systems impressed with its ambition.

Analysis of the ECMWF Storage Landscape, an analysis of a 100PB active archive, impressed with its scale.

FlashGraph: Processing Billion-Node Graphs on an Array of Commodity SSDs answered important questions about big data and flash.

Reliable, Consistent, and Efficient Data Sync for Mobile Apps holds out hope of a fix for a major failure of most sync services.

The mostly EMC paper RAIDShield: Characterizing, Monitoring, and Proactively Protecting Against Disk Failures offered useful insight on drive failure modes based on EMC’s internal support records – something StorageMojo has been agitating for these many years past.

Towards SLO Complying SSDs Through OPS Isolation offered the long needed observation that

. . . performance SLOs [Service Level Objectives] cannot be satisfied with current commercial SSDs. We show that garbage collection is the source of this problem and that this cannot be easily controlled because of the interaction between VMs.

And Skylight — A Window on Shingled Disk Operation – the FAST Best Paper winner – definitely deserves a post. But there can only be one StorageMojo Best Paper.

Best Paper

The winner is Having Your Cake and Eating It Too: Jointly Optimal Erasure Codes for I/O, Storage and Network-bandwidth, by K. V. Rashmi, Preetum Nakkiran, Jingyan Wang, Nihar B. Shah, and Kannan Ramchandran, all of the University of California, Berkeley.

The paper explores a holistic view of high-scale storage, simultaneously optimizing I/O, capacity and network bandwidth.

Our design builds on top of a class of powerful practical codes, called the product-matrix-MSR codes. Evaluations show that our proposed design results in a significant reduction the number of I/Os consumed during reconstructions (a 5× reduction for typical parameters), while retaining optimality with respect to storage, reliability, and network bandwidth.

In a Reed-Solomon 7+1 RAID 5 (7 data blocks and 1 parity block) the loss of a single data block causes 7 data reads and 7 times the size of single block in bandwidth consumption. When the loss is a terabyte+ disk, the glacial pace of reconstruction is mute testament to this feature of Reed-Solomon codes.

Dimakis et al. introduced minimum-storage-regeneration (MSR) codes, that can reduce the data transfer for reconstruction by 2/3rds or more.

However, the I/O overhead of MSR codes can be much higher than the Reed-Solomon codes used in current RAID arrays and some scale-out storage. For disk-based systems, that’s a problem.

The paper proposes product-matrix reconstruct-by-transfer (RBT)codes that achieve optimal system resource utilization. They also offer an algorithm that converts any product-matrix vanilla code into an RBT code.

Performance

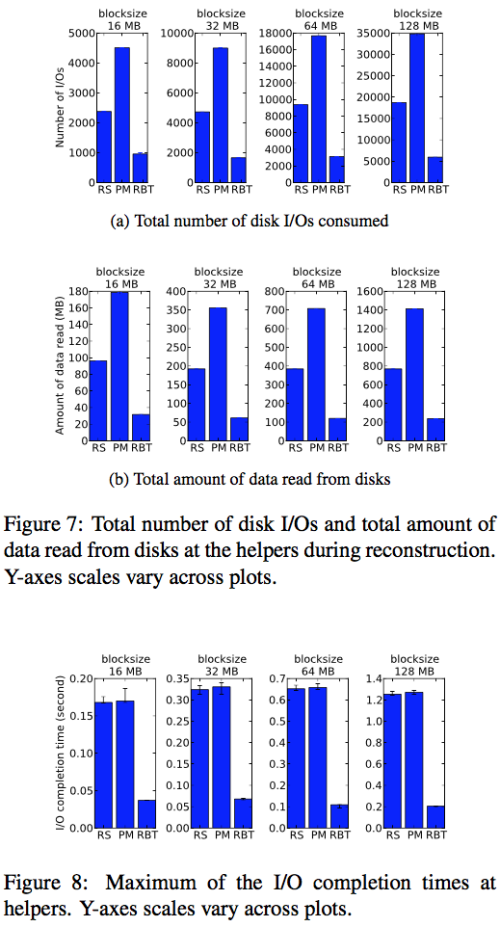

The paper offers some graphs showing the results of experiments with Reed-Solomon (RS), product-matrix (PM) and RBT codes carried out on Amazon EC2 instances:

The StorageMojo take

Disks are going to be with us for decades to come for cost and streaming performance. Networks – typically ethernet – are a limited and costly resource as well. Learning how to optimize both in scale-out systems is necessary.

The shift to high-IOPS media, like flash drives, means cheap I/Os on expensive media. But that doesn’t change anything for disk-based scale-out storage where massive capacity guarantees that data reconstruction will be common.

For future research I’d like to see more on the latency impact of advanced erasure codes. As object storage continues to displace file servers latency will become a critical issue. Update: K.V. Rashmi was nice enough to let me know that they are indeed working on the latency issue. Good to know! End update.

Courteous comments welcome, of course.

I agree with your assessments in general, but wanted to add a little to your comments about your favored Best paper, about MSR. With the high-capacity of disks, the odds of having a second failure during a recovery operation are nearly 100%, so any technique that results in having to read less data, is a real win.

I also liked the SHARDS paper (Waldspurger et al), where the authors have used hashes of blocks to create a sampling algorithm that they then feed to the classic Mattson Missed Cache Rate algorithm. Using their sampling, they can calculate MCR in near real time, instead of days without caching.

Hi Robin.

Thanks for the great FAST roundup.

I liked the VM/SSD GC paper. I almost feel guilty piling on VMs or SSD (since as a DBA I can find so many reasons to disparage them), but now I have another arrow in my quiver.

On another topic, maybe you can do a post on whitebox switches in the data center, specifically responding to a quote in this article at http://www.theregister.co.uk/2015/05/14/cisco_q3_2014_results/ ,”In response to the threat posted by the emerging white-box market, Chambers told the earnings call that Cisco has “moved from selling boxes … to partnering with customers on their outcomesâ€.

Sounds to me like Cisco is a hostage to its historical margins and has decided it’s not financially worthwhile to compete at all.

I’ve heard of AmaGoogFace developing their own 10G swiches, but that was to sever their relationship with Cisco, not to “partner on outcomes.” 🙂

Rik writes: “With the high-capacity of disks, the odds of having a

second failure during a recovery operation are nearly 100%,”

Really? Citation please, hee.

Robin:

“When the loss is a terabyte+ disk, the glacial pace of reconstruction is mute testament to this feature of Reed-Solomon codes.”

The answer is sub-LUN RAID. XIV in some senses, IBM’s DDP?,

InfiniRAID. Other frame implementations?

InfiniRAID rebuild time of 15 minutes with 6 TB drives.

http://www.zdnet.com/article/infinidat-shakes-up-enterprise-storage-market/

Future-proofed as larger drives will have the same rebuild time with

raid group portions of the underlying physical. Maybe they could get it

down to less than 5 minutes with RBT. But it wouldn’t appear to

matter other than marketing.

Sofware: IBM’s GNR. 100000 disk systems have several rebuilds

going on all the time and one of the few places triple parity makes

sense. MTDL of 10000 years IIRC.

Rob,

Indeed, modern erasure code implementations have fixed the rebuild issues for large drives and that is all to the good. We are rapidly approaching a digital divide in storage, where new technical requirements will leave older implementations behind, trapped by architectural limitations. XIV and Infinidat won’t have that problem.

Robin

If I may .. a double-dip.

EMC XtremIO 4.0 blurb says:

“Sustains two simultaneous SSD failures per X-Brick”

Trusting Trevor’s/EarthwormJim’s research:

http://www.theregister.co.uk/2015/05/07/flash_banishes_the_spectre_of_the_unrecoverable_data_error/

“Enterprise SSD error rates are 10^17 bits or an error every 12.5PB.”

Their RAID implementation in XTremIO (XDP) gives them

flexibility in rebuilding and they speak to multiple drive failure

scenarios. You wonder what they were thinking or anticipating, a

second SSD read-error on rebuild would be extremely rare.

SSD’s don’t have near the vulnerability and won’t require the storage

architectural re-work that very large spinning disks do.

PMSR codes (Product-matrix-based Minimum Storage Regenerating codes) is a big achievement in erasure-coding theory. However, there is a feature of PMSR codes that is not mentioned in the paper and may make them unsuitable for general storage systems. That is low code rate which is no more than 1/2, which means to store data of size 1GB requires to store the same size of parity data (1GB redundancy information) as well.

The constrained of low rate with PMSR is indeed mentioned by the authors. The logic is when you’re ready to take storage overhead nearly twice e.g 1:1.9, then you’ll get most stable erasure codes. However, this overhead may not be acceptable to the storage systems for purely commercial point of view.