What is Software Defined Memory?

A converged memory and storage architecture that enables applications to access storage as if it was memory and memory as if it was storage

Unlike most of the software defined x menagerie, SDM isn’t simply another layer that virtualizes existing infrastructure for some magical benefit. It addresses the oncoming reality of large non-volatile memories – i.e. 3D XPoint – that can be used as either memory or storage.

OK, 3D XPoint. What else?

NVDIMMs have been around for a few years, but have been highly proprietary. Not a recipe for widespread adoption.

But JEDEC standardized NVM support last year and the BIOS changes needed to support NVM are now reaching the market from Supermicro and HPE. Microsoft is on the case as well.

Hybrid NVDIMMs are the most popular. DRAM and NAND flash together with some kind of power backup, battery or supercapacitor, to keep the NVDIMM powered long enough – 10-100ms – so the DRAM’s data can be moved to the flash. There are five NVDIMM standards though, including all-flash, DRAM with system-accessible flash, MRAM NVDIMM and a generic RRAM standard for new technologies.

What Plexistor brings to the NV table

Plexistor offers a new file system that works directly with devices and replaces all I/O layers.

That’s a big conceptual leap, so let’s break it down.

- It is a POSIX file system: no application changes needed.

- Supports DRAM, NV memory, flash and disk.

- Single name space atop those devices.

- Tiering performed at file, not block, level.

- No page cache; no block abstraction.

- Treats memory+storage as a single layer.

If you take Storage Class Memory seriously, there is no reason to treat SCM and storage differently – it’s all persistent. And if your name space covers both memory and storage, then your I/O problems are much simplified.

So you arrive at Plexistor SDM. You can go to their web site and download the Community Edition for free today to run on Linux kernel 4.x and newer. If you have a supported NVDIMM you can run SDM CE in persistent mode; if not, in ephemeral mode, like an AWS instance.

The payoff

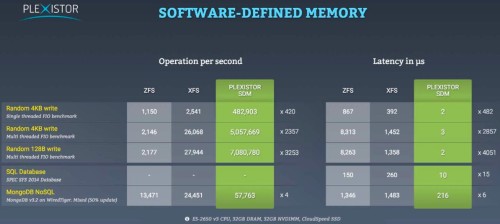

Compacting the current labyrinthine I/O paths into Plexistor SDM pays enormous benefits. Like average latency of 1-3 μs and a maximum latency of 6-7μs.

Here are their stats:

Wow.

The StorageMojo take

The IOPS illusion has replaced the capacity illusion as the major impediment to understanding today’s I/O requirements. Latency, not IOPS, is the gating factor in storage performance today.

In other words, customers buy IOPS, but they need much lower – and more predictable – latency. Plexistor is leading the way to the next generation of I/O optimization.

Courteous comments welcome, of course.

So ZFS for the IO stack?

The equation is changing at several levels – the impacts will be significant. This article helps raise awareness – Thanks