Big data will overwhelm artisanal science. That’s what I conclude from a recent paper that lays out the stark statistics:

Science is a growing system, exhibiting 4% annual growth in publications and 1.8% annual growth in the number of references per publication. Together these growth factors correspond to a 12-year doubling period in the total supply of references, thereby challenging traditional methods of evaluating scientific production, from researchers to institutions.

Given 4% annual growth, the number of publications will double in ≈17 years. But 1.8% growth in references means that they will only double in ≈39 years. At some point, the number of references will fall so far behind research production that papers will become hit-or-miss affairs, unable to accurately portray the current state of knowledge.

The paper The Memory of Science: Inflation, Myopia, and the Knowledge Network by Raj K. Pana, Alexander M. Petersen, Fabio Pammolli, and Santo Fortunato, researchers from Aalto University, Finland, School for Advanced Studies, Lucca, Italy, University of California, Merced, and Indiana University, takes a long and large view. The team

. . . analyzed a citation network comprised of 837 million references produced by 32.6 million publications over the period 1965-2012, . . .

in order to analyze the trends in how research is cited and used. They noted trends that will accelerate with the growth of automated data collection and analysis.

Over this half-century period we observe a narrowing range of attention – both classic and recent literature are being cited increasingly less, pointing to the important role of socio-technical processes. . . . In particular, we show how perturbations to the growth rate of scientific output – i.e. following from the new layer of rapid online publications – affects the reference age distribution and the functionality of the vast science citation network as an aid for the search & retrieval of knowledge.

Going deep, not wide

The authors are looking at historical trends – and not looking forward to a future with Big Data and AI-driven analysis. But the historical trends are difficult enough.

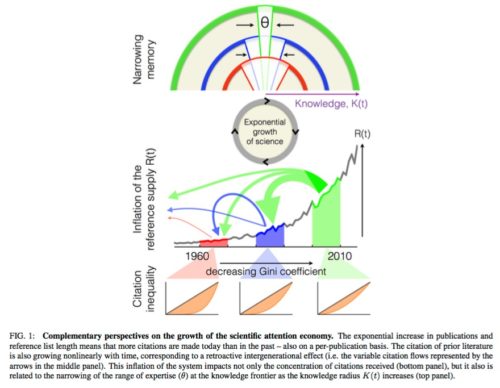

They posit that science has an attention economy, and as research output has grown – especially due to China’s large investment in science and research, but also due to GDP growth everywhere – the growth of knowledge has forced researchers to narrow their focus. That is a trend since the beginning of the Enlightenment.

Here’s their illustrative chart of the process, showing how reduction in income inequality globally has led to an explosion in research and the narrowing of scientific attention:

The StorageMojo take

Our current system for the diffusion of knowledge is breaking down. How are we going to fix it?

Google’s mission . . . to organize the world’s information and make it universally accessible and useful may no longer be enough. The ultimate problem is that human bandwidth doesn’t scale.

Sure, Really Smart People can ingest and make sense of much more information than most people; and they may come up with new paradigms that enable us to organize knowledge in more digestible ways. But the advent of automated data generation, collection, analysis and, finally, AI-driven knowledge, will overwhelm human knowledge processing.

One solution is another layer to “virtualize” knowledge domains for interdisciplinary scrambling, creating an interface between the deep and the broad. That is, in fact, what we’ve been doing for the last 75 years or so. But it isn’t enough.

So Google – or someone else with the next money-spinning machine and an appetite for moon-shot projects – is going to have help a large group of RSPs to figure out how to artificially augment human cognition. That’s a challenge for the 21st century.

Courteous comments welcome, of course.

exactly – the augmenting of human cognition will be key. think about your everyday as a programmer (or writer or devops etc) you need to access some system, or link to some article, or look up some note that you made at a conference. you have to go out to your storage (email, google search etc) to get the information.

Imagine the first people that can recall that directly, as if its in their memory, and just get to work. Their productivity will be astounding and everyone who doesn’t know the tech, or get the connection will be left behind.