Last year StorageMojo interviewed Violin Memory CEO Don Basile. He noted that as flash features sizes shrank, NAND would get slower as well as reducing endurance.

Intellectually that is correct, but it isn’t an easy concept. Flash stores a physical thing – electrons – and smaller features means fewer electrons, lower endurance and more difficult reads. But accustomed to Moore’s Law, it was hard to imagine how it would play out.

Fast forward 18 months. Violin has migrated from 32nm flash to 19nm. They tell me their new array is denser, faster and more power efficient despite slower flash – with the same number of chips.

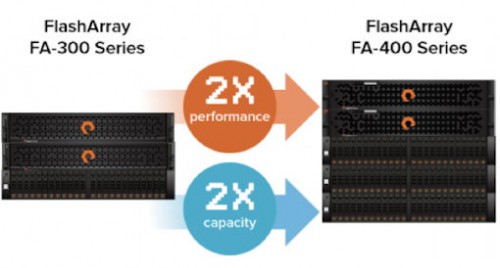

Meanwhile, Pure Storage has this graphic on their website:

Twice the performance with 3x the drives? Maybe there is a problem as flash gets slower.

Here’s a Storagemojo Video White Paper that lays out the issues in less than 5 minutes.

The StorageMojo take

Storagemojo suggested last year that SSDs were not optimal for storage arrays. Not everyone agreed.

Proponents argued that the SSDs massive volumes, low costs and architectural simplicity would inevitably overwhelm whatever performance advantages designers could achieve through a clean-sheet non-SSD architecture. Some went so far as to assure me privately that Violin could never migrate to denser flash while SSD-based designs would automatically upgrade as the SSDs did. Today Violin has migrated, SSDs are denser, and it appears SSD arrays may also be slower.

It will be interesting to see how other SSD-based arrays fare in the months ahead. StorageMojo will be watching.

Courteous comments welcome, of course. Happy to hear other views on the SSD array performance issue.

Disclosure: Violin paid me for this and previous video white papers, but the facts speak for themselves. I’ve been a fan of Violin’s architecture since they briefed me in 2008.

Take credence on anyone paid to speak in a bias form about a technology and then follow it up with “but I have always liked the technology”. Lost credibility IMO.

How about a resilient box? Who cares about hero numbers of 4K reads at some ridiculous IOP number if the upgradability via NDU is risky or problematic at best. Let me pull the array put of the rack, CRACK my array case, and replace VIMMs hopefully quickly to prevent from overheating. Wow! But lets keep talking about stupid metrics that don’t matter. Do you want to yell SQUIRREL or should I?

How about layered software that 85% of the enterprise would like to leverage? Is it Symantec or is it Falconstor NSS that can be bolted on for these layered features? How well does that work? Will this impact latency?

At the heart of this Violin, Kominario, TMS, EMCs Thunder (now abandoned), Fusion ION, etc were designed for Tier 0 environments which sacrificed HA, features (dedup, compression, replication, encryption, snaps, clones, etc) for the sake of ultimate performance and a jaw dropping price. Those customers driving by ultimate performance don’t care about features and/or cost. FWIW, perhaps EMC bowing out of the ultra high performance Tier 0 market might suggest the enterprise might want features without compromise at 1ms or less. Thinking that is significant to the traditional spinning rust today don’t you?

Any reason why Violin doesn’t publish all sorts of best practices, solution guides, reference architectures, etc? Given the product is so awesome in your mind, why doesnt Violins website pour with all sorts of documentation? Transparency is a great thing.

So take off your financed Violin beer goggles and realize that Violin might be good from far but far from good. Beck, I would say nice things about someone paying me. I might even overlook the ugly and obvious but I am not you… Great invaluable post.

Just a FYI. That graphic is just demonstrating that the array can support more shelves AND is faster. There is no coralation between number of drives and speed. The published IOPs and latency numbers can be achieved with just one shelf OR three shelves.

Phil, I’d love to have a representative of Pure write in about the meaning of the graphic and their current specs. The graphic is open to multiple interpretations.

Robin

Hi Robin,

Thanks for the opportunity to comment and for leveraging the image from our site, it seems as though everyone in the industry loves to compare themselves to Pure….we’re honored!

To answer your specific question on the performance/scale differences between our FA-300 array (shipped May, 2012) and our FA-400 array (shipped May, 2013), you can see a comparison of both performance and capacity specs right here: http://www.purestorage.com/flash-array/tech-specs.html

Our performance is determined by the generation of controllers not by how much flash is attached, so don’t read too much into the particular image you got from our site, look at the specs or data sheet instead.

Unlike vendors who are tied to exotic hardware architectures that require new ASICs or FPGAs with every generation of their product (slowing time to market), Pure takes advantage of industry-standard Intel processors and best-of-breed SSDs….both of which improve rapidly year after year. In fact, we have built our whole architecture around this flexible and modular approach, which delivers some interesting advantages compared to the “appliance” form factor flash guys:

– Incremental performance upgrades: our FA-300 customers were able to double the performance of their arrays by moving to FA-400 controllers non-disruptively. If you buy a flash appliance, you are stuck with the performance you buy on day 1. Pure arrays can be made faster and faster, year after year, with in-place upgrades to the controllers, leveraging your day 1 investments in flash.

– Incremental capacity upgrades: Pure customers can start with our smallest systems (~10 TBs usable) and grow non-disruptively to our largest systems (~100TBs usable today, and much larger tomorrow), again without downtime. If you buy a flash appliance, you are stuck with the capacity you buy on day 1, unless you take downtime and swap-out for different flash modules.

– Non-disruptive software upgrades: NDU is a must for any scaled deployment of storage, there is no outage window for an array which hosts 10s or 100s of applications. Pure customers can non-disruptively upgrade software, swap failed flash modules, and expand performance/capacity, all online, and all without performance degradation.

– Mix/match generations of flash. Pure is constantly releasing advances in flash as new SSDs come out, and our architecture was designed to be able to mix/match generations of flash. If you deploy a Pure Storage FlashArray for 5 years and expand as you go, you’ll end up with many different generations of flash inside the array over time – and that’s just fine. When cheaper/denser flash devices come out next year, will I be able to put them inside my existing (running) flash appliance to scale at better economics?

While the ultra-density of some of the flash appliances is indeed novel, as I discuss the trade-offs with enterprise customers, they tend to care a whole lot more about the resiliency and software features of a flash device vs. sacrificing these attributes for ultra-density…or said differently, they want “Flash Arrays” not “Flash Appliances”.

Hope this contributed to the conversation, and thanks for keeping the debate lively!

–Matt Kixmoeller, VP, Products @ Pure Storage

PeterJ, I can’t recall having worked with you. We pride ourselves for our technical expertise and have direct access to some of the most talented engineers I’ve met (who are devoted 24/7 to resolving any issue). Hope you’ll try us out again sometime.

Robin, I’ve been reading your blog for years. Not happy about you being on Violin’s payroll, but I’ve always enjoyed reading your blog.

Hi Peter,

Care to explain how you purchased a product from EMC that is not yet out of BETA? I’ve asked to test the EMC product – and yes it can be tested, but NO – you are NOT allowed to put a production workload on it! So did you purchase an extremely expensive piece of tin that you can do nothing with but look at?….. or do you work for EMC and are feeling a little uncomfortable about the risk Pure poses to you?

Ben, after looking into PeterJ’s comment further it appeared to me that it came from a troll. I have removed it. My apologies.

Thanks for reading StorageMojo.

Robin

Robin Harris:

You have on multiple occasions stressed that SSDs are the “wrong way” and their speed and density are lower that systems like violin memory’s 6XXX series.

Yet nimbus data have higher IOPS, higher density, higher bandwidth and they are using SSDs.

Violin Memory 6000:

Max Size 64TiB (21.3 TiB per Unit)

Max IOPS 750.000

Max Bandwidth: 4 GB/s

datasheet: http://www.violin-memory.com/wp-content/uploads/Violin-Datasheet-6000.pdf?d=1

Nimbus data Gemini:

Max size: 43.7 TiB (21.8 TiB per Unit)

Max IOPS 2.000.000

Max Bandwidth: 12 GB/s

datasheet: http://www.nimbusdata.com/resources/literature/Nimbus_Gemini_All_Flash.pdf

I am not affiliated with any storage vendor nor any company selling storage. Opinions expressed are solely my own and do not express the views or opinions of my employer.

Hi Robin.

Regarding the hecklers above … obviously you get paid by storage vendors, as you work as a consultant in that industry. I feel your disclosures are adequate to readers, just ensure those are also clearly spelled out to your vendor sponsors, and that you’re a blogger trying to balance news and fairness.

Thanks, James B.